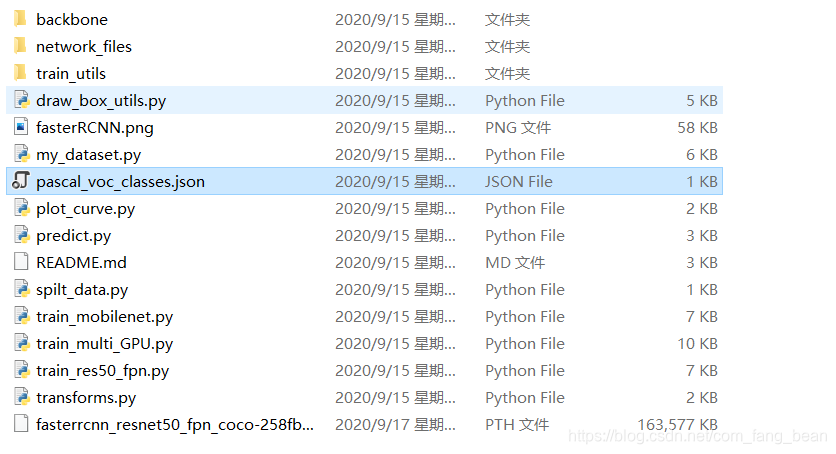

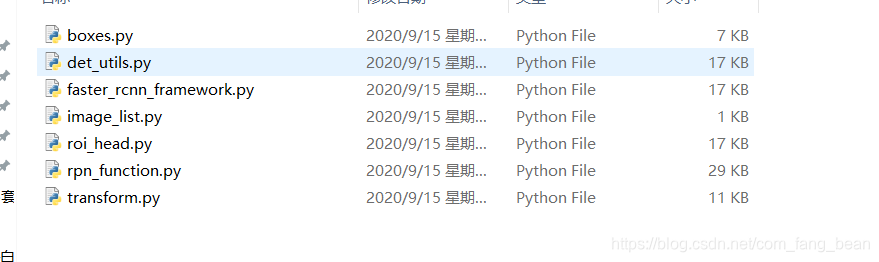

首先看一下系统架构:

解读create_model方法

1.backbone = MobileNetV2(weights_path="./backbone/mobilenet_v2.pth").features-》加载MobileNetV2预训练模型

backbone.out_channels = 1280-》设置输出通道

2.anchor_generator = AnchorsGenerator(sizes=((32, 64, 128, 256, 512),),

aspect_ratios=((0.5, 1.0, 2.0),))-》调用AnchorsGenerator函数,AnchorsGenerator函数作用以后再讲,

3.roi_pooler = torchvision.ops.MultiScaleRoIAlign(featmap_names=['0'], # 在哪些特征层上进行roi 池化

output_size=[7, 7], # roi_pooling输出特征矩阵尺寸

sampling_ratio=2) # 采样率

4. model = FasterRCNN(backbone=backbone,

num_classes=num_classes,

rpn_anchor_generator=anchor_generator,

box_roi_pool=roi_pooler)-》调用faster_rcnn_framework的FasterRCNN

解读main方法:

1.device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")-》调用GPU

print(device)

2. if not os.path.exists("save_weights"):

os.makedirs("save_weights")-》 检查保存权重文件夹是否存在,不存在则创建

3. data_transform = {

"train": transforms.Compose([transforms.ToTensor(),

transforms.RandomHorizontalFlip(0.5)]),

"val": transforms.Compose([transforms.ToTensor()])

}-》加载数据预处理函数

4. VOC_root = "./"

assert os.path.exists(os.path.join(VOC_root, "VOCdevkit")), "not found VOCdevkit in path:'{}'".format(VOC_root)-》加载数据集

5.train_data_set = VOC2012DataSet(VOC_root, data_transform["train"], True)-》加载训练数据

train_data_loader = torch.utils.data.DataLoader(train_data_set,

batch_size=8,

shuffle=False,

num_workers=0,

collate_fn=utils.collate_fn)

val_data_set = VOC2012DataSet(VOC_root, data_transform["val"], False)-》加载测试数据

val_data_set_loader = torch.utils.data.DataLoader(val_data_set,

batch_size=1,

shuffle=False,

num_workers=0,

collate_fn=utils.collate_fn)

6. for param in model.backbone.parameters():

param.requires_grad = False-》冻结前置特征提取网络权重

7. params = [p for p in model.parameters() if p.requires_grad]

optimizer = torch.optim.SGD(params, lr=0.005,

momentum=0.9, weight_decay=0.0005)-》设置优化器

8.在冻结情况下训练RPN5轮

num_epochs = 5

for epoch in range(num_epochs):

# train for one epoch, printing every 10 iterations

utils.train_one_epoch(model, optimizer, train_data_loader,

device, epoch, print_freq=50,

train_loss=train_loss, train_lr=learning_rate)

utils.evaluate(model, val_data_set_loader, device=device, mAP_list=val_mAP)-》 对测试数据集进行评估

torch.save(model.state_dict(), "./save_weights/pretrain.pth")-》保存权重

9. 解冻前置特征提取网络权重(但冻结backbone部分底层权重)(backbone),接着训练整个网络权重

for name, parameter in model.backbone.named_parameters():

split_name = name.split(".")[0]

if split_name in ["0", "1", "2", "3"]:

parameter.requires_grad = False

else:

parameter.requires_grad = True

10. lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer,

step_size=5,

gamma=0.33)-》定义这个学习率调节器

11.冻结backbone部分底层权重前提下训练20轮

num_epochs = 20

for epoch in range(num_epochs):

# train for one epoch, printing every 50 iterations

utils.train_one_epoch(model, optimizer, train_data_loader,

device, epoch, print_freq=50,

train_loss=train_loss, train_lr=learning_rate)

12. lr_scheduler.step()-》更新学习率

utils.evaluate(model, val_data_set_loader, device=device, mAP_list=val_mAP)-》对测试数据集进行评估

13. 保存权重

if epoch > 10:

save_files = {

'model': model.state_dict(),

'optimizer': optimizer.state_dict(),

'lr_scheduler': lr_scheduler.state_dict(),

'epoch': epoch}

torch.save(save_files, "./save_weights/mobile-model-{}.pth".format(epoch))

14. if len(train_loss) != 0 and len(learning_rate) != 0:

from plot_curve import plot_loss_and_lr

plot_loss_and_lr(train_loss, learning_rate)-》画出训练loss和准确率曲线图

15.if len(val_mAP) != 0:

from plot_curve import plot_map

plot_map(val_mAP)-》画出验证集准确率变化图

import torch

import transforms

from network_files.faster_rcnn_framework import FasterRCNN, FastRCNNPredictor

from backbone.resnet50_fpn_model import resnet50_fpn_backbone

from my_dataset import VOC2012DataSet

from train_utils import train_eval_utils as utils

import os

def create_model(num_classes):

backbone = resnet50_fpn_backbone()

model = FasterRCNN(backbone=backbone, num_classes=91)

# 载入预训练模型权重

# https://download.pytorch.org/models/fasterrcnn_resnet50_fpn_coco-258fb6c6.pth

weights_dict = torch.load("./backbone/fasterrcnn_resnet50_fpn_coco.pth")

missing_keys, unexpected_keys = model.load_state_dict(weights_dict, strict=False)

if len(missing_keys) != 0 or len(unexpected_keys) != 0:

print("missing_keys: ", missing_keys)

print("unexpected_keys: ", unexpected_keys)

# get number of input features for the classifier

in_features = model.roi_heads.box_predictor.cls_score.in_features

# replace the pre-trained head with a new one

model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_classes)

return model

def main(parser_data):

device = torch.device(parser_data.device if torch.cuda.is_available() else "cpu")

print(device)

data_transform = {

"train": transforms.Compose([transforms.ToTensor(),

transforms.RandomHorizontalFlip(0.5)]),

"val": transforms.Compose([transforms.ToTensor()])

}

VOC_root = parser_data.data_path

assert os.path.exists(os.path.join(VOC_root, "VOCdevkit")), "not found VOCdevkit in path:'{}'".format(VOC_root)

# load train data set

train_data_set = VOC2012DataSet(VOC_root, data_transform["train"], True)

# 注意这里的collate_fn是自定义的,因为读取的数据包括image和targets,不能直接使用默认的方法合成batch

train_data_loader = torch.utils.data.DataLoader(train_data_set,

batch_size=1,

shuffle=False,

num_workers=0,

collate_fn=utils.collate_fn)

# load validation data set

val_data_set = VOC2012DataSet(VOC_root, data_transform["val"], False)

val_data_set_loader = torch.utils.data.DataLoader(val_data_set,

batch_size=2,

shuffle=False,

num_workers=0,

collate_fn=utils.collate_fn)

# create model num_classes equal background + 20 classes

model = create_model(num_classes=21)

# print(model)

model.to(device)

# define optimizer

params = [p for p in model.parameters() if p.requires_grad]

optimizer = torch.optim.SGD(params, lr=0.005,

momentum=0.9, weight_decay=0.0005)

# learning rate scheduler

lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer,

step_size=5,

gamma=0.33)

# 如果指定了上次训练保存的权重文件地址,则接着上次结果接着训练

if parser_data.resume != "":

checkpoint = torch.load(parser_data.resume)

model.load_state_dict(checkpoint['model'])

optimizer.load_state_dict(checkpoint['optimizer'])

lr_scheduler.load_state_dict(checkpoint['lr_scheduler'])

parser_data.start_epoch = checkpoint['epoch'] + 1

print("the training process from epoch{}...".format(parser_data.start_epoch))

train_loss = []

learning_rate = []

val_mAP = []

for epoch in range(parser_data.start_epoch, parser_data.epochs):

# train for one epoch, printing every 10 iterations

utils.train_one_epoch(model, optimizer, train_data_loader,

device, epoch, train_loss=train_loss, train_lr=learning_rate,

print_freq=50, warmup=True)

# update the learning rate

lr_scheduler.step()

# evaluate on the test dataset

utils.evaluate(model, val_data_set_loader, device=device, mAP_list=val_mAP)

# save weights

save_files = {

'model': model.state_dict(),

'optimizer': optimizer.state_dict(),

'lr_scheduler': lr_scheduler.state_dict(),

'epoch': epoch}

torch.save(save_files, "./save_weights/resNetFpn-model-{}.pth".format(epoch))

# plot loss and lr curve

if len(train_loss) != 0 and len(learning_rate) != 0:

from plot_curve import plot_loss_and_lr

plot_loss_and_lr(train_loss, learning_rate)

# plot mAP curve

if len(val_mAP) != 0:

from plot_curve import plot_map

plot_map(val_mAP)

# model.eval()

# x = [torch.rand(3, 300, 400), torch.rand(3, 400, 400)]

# predictions = model(x)

# print(predictions)

if __name__ == "__main__":

version = torch.version.__version__[:5] # example: 1.6.0

# 因为使用的官方的混合精度训练是1.6.0后才支持的,所以必须大于等于1.6.0

if version < "1.6.0":

raise EnvironmentError("pytorch version must be 1.6.0 or above")

import argparse

parser = argparse.ArgumentParser(

description=__doc__)

# 训练设备类型

parser.add_argument('--device', default='cuda:0', help='device')

# 训练数据集的根目录

parser.add_argument('--data-path', default='./', help='dataset')

# 文件保存地址

parser.add_argument('--output-dir', default='./save_weights', help='path where to save')

# 若需要接着上次训练,则指定上次训练保存权重文件地址

parser.add_argument('--resume', default='', type=str, help='resume from checkpoint')

# 指定接着从哪个epoch数开始训练

parser.add_argument('--start_epoch', default=0, type=int, help='start epoch')

# 训练的总epoch数

parser.add_argument('--epochs', default=15, type=int, metavar='N',

help='number of total epochs to run')

args = parser.parse_args()

print(args)

# 检查保存权重文件夹是否存在,不存在则创建

if not os.path.exists(args.output_dir):

os.makedirs(args.output_dir)

main(args)

https://github.com/WZMIAOMIAO/deep-learning-for-image-processing