完整信息:

-- Process 1 terminated with the following error:

Traceback (most recent call last):

File "/home/lzk/anaconda3/lib/python3.7/site-packages/torch/multiprocessing/spawn.py", line 19, in _wrap

fn(i, *args)

File "/home/lzk/IJCAI2021/GraphWriter-DGL/train.py", line 278, in main

train_loss = train_one_epoch(model, train_dataloader, optimizer, args, epoch, rank, device, writer)

File "/home/lzk/IJCAI2021/GraphWriter-DGL/train.py", line 63, in train_one_epoch

for _i , batch in enumerate(dataloader):

File "/home/lzk/anaconda3/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 278, in __iter__

return _MultiProcessingDataLoaderIter(self)

File "/home/lzk/anaconda3/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 682, in __init__

w.start()

File "/home/lzk/anaconda3/lib/python3.7/multiprocessing/process.py", line 112, in start

self._popen = self._Popen(self)

File "/home/lzk/anaconda3/lib/python3.7/multiprocessing/context.py", line 223, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "/home/lzk/anaconda3/lib/python3.7/multiprocessing/context.py", line 284, in _Popen

return Popen(process_obj)

File "/home/lzk/anaconda3/lib/python3.7/multiprocessing/popen_spawn_posix.py", line 32, in __init__

super().__init__(process_obj)

File "/home/lzk/anaconda3/lib/python3.7/multiprocessing/popen_fork.py", line 20, in __init__

self._launch(process_obj)

File "/home/lzk/anaconda3/lib/python3.7/multiprocessing/popen_spawn_posix.py", line 47, in _launch

reduction.dump(process_obj, fp)

File "/home/lzk/anaconda3/lib/python3.7/multiprocessing/reduction.py", line 60, in dump

ForkingPickler(file, protocol).dump(obj)

File "/home/lzk/anaconda3/lib/python3.7/site-packages/torch/multiprocessing/reductions.py", line 314, in reduce_storage

metadata = storage._share_filename_()

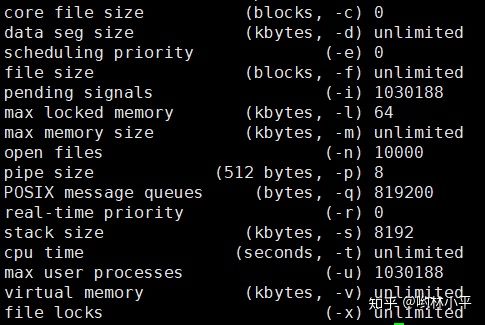

RuntimeError: unable to open shared memory object </torch_30603_1696564530> in read-write mode用指令ulimit -a来查看当前用户的各项limit限制:

我的问题出现在dataloader,查了一番博客,主要有2种解决方式:

- 增大空间:ulimit -SHn 51200;

- 限制number_workers为0(但是杀敌一千,自损八百,这么做以后我的epoch时间慢了一倍)

- 换成小数据集就不会有这样的错误,但同样治标不治本