最近在看计算机视觉:模型学习与推理这本书,书中讲了几十种算法,原书是使用matlab实现的,本人打算用c++重新实现一遍。书中作者也推荐自己实现一遍代码对于理解问题大有裨益。

本篇博客主要讲使用c++标准库生成正态分布的数据,然后使用最大似然的方法估计学习参数。并提供c++源码。

一维正态分布满足如下公式:

Pr ( x ) = 1 2 π σ 2 exp [ − 0.5 ( x − μ ) 2 / σ 2 ] \operatorname{Pr}(x)=\frac{1}{\sqrt{2 \pi \sigma^{2}}} \exp \left[-0.5(x-\mu)^{2} / \sigma^{2}\right]Pr(x)=2πσ21exp[−0.5(x−μ)2/σ2]

n个数据假设独立分布,则联合分布为:

Pr ( x 1 … I ∣ μ , σ 2 ) = ∏ i = 1 I Pr ( x i ∣ μ , σ 2 ) \operatorname{Pr}\left(x_{1 \ldots I} | \mu, \sigma^{2}\right)=\prod_{i=1}^{I} \operatorname{Pr}\left(x_{i} | \mu, \sigma^{2}\right)Pr(x1…I∣μ,σ2)=i=1∏IPr(xi∣μ,σ2)

= ∏ i = 1 I Norm x i [ μ , σ 2 ] = 1 ( 2 π σ 2 ) I / 2 exp [ − 0.5 ∑ i = 1 I ( x i − μ ) 2 σ 2 ] \begin{array}{l}{=\prod_{i=1}^{I} \operatorname{Norm}_{x_{i}}\left[\mu, \sigma^{2}\right]} \\ {=\frac{1}{\left(2 \pi \sigma^{2}\right)^{I / 2}} \exp \left[-0.5 \sum_{i=1}^{I} \frac{\left(x_{i}-\mu\right)^{2}}{\sigma^{2}}\right]}\end{array}=∏i=1INormxi[μ,σ2]=(2πσ2)I/21exp[−0.5∑i=1Iσ2(xi−μ)2]

进行极大似然估计,就是选择一组参数,使得目前观测的联合概率分布达到最大,那么这组参数就是最符合目前观测数据的。

常规方法是求对数的导数,计算极值:

μ ^ , σ ^ 2 = argmax μ , σ 2 [ ∑ i = 1 I log [ Norm x i [ μ , σ 2 ] ] ] = argmax μ , σ 2 [ − 0.5 I log [ 2 π ] − 0.5 I log σ 2 − 0.5 ∑ i = 1 I ( x i − μ ) 2 σ 2 ] \begin{aligned} \hat{\mu}, \hat{\sigma}^{2} &=\underset{\mu, \sigma^{2}}{\operatorname{argmax}}\left[\sum_{i=1}^{I} \log \left[\operatorname{Norm}_{x_{i}}\left[\mu, \sigma^{2}\right]\right]\right] \\ &=\underset{\mu, \sigma^{2}}{\operatorname{argmax}}\left[-0.5 I \log [2 \pi]-0.5 I \log \sigma^{2}-0.5 \sum_{i=1}^{I} \frac{\left(x_{i}-\mu\right)^{2}}{\sigma^{2}}\right] \end{aligned}μ^,σ^2=μ,σ2argmax[i=1∑Ilog[Normxi[μ,σ2]]]=μ,σ2argmax[−0.5Ilog[2π]−0.5Ilogσ2−0.5i=1∑Iσ2(xi−μ)2]

求极值:

∂ L ∂ μ = ∑ i = 1 I ( x i − μ ) σ 2 = ∑ i = 1 I x i σ 2 − I μ σ 2 = 0 \begin{aligned} \frac{\partial L}{\partial \mu} &=\sum_{i=1}^{I} \frac{\left(x_{i}-\mu\right)}{\sigma^{2}} \\ &=\frac{\sum_{i=1}^{I} x_{i}}{\sigma^{2}}-\frac{I \mu}{\sigma^{2}}=0 \end{aligned}∂μ∂L=i=1∑Iσ2(xi−μ)=σ2∑i=1Ixi−σ2Iμ=0

μ ^ = ∑ i = 1 I x i I \hat{\mu}=\frac{\sum_{i=1}^{I} x_{i}}{I}μ^=I∑i=1Ixi

σ ^ 2 = ∑ i = 1 I ( x i − μ ^ ) 2 I \hat{\sigma}^{2}=\sum_{i=1}^{I} \frac{\left(x_{i}-\hat{\mu}\right)^{2}}{I}σ^2=i=1∑II(xi−μ^)2

实际上我们的最终算法就是这两个公式。算法整体流程如下:

Input : Training data { x i } i = 1 I Output: Maximum likelihood estimates of parameters θ = { μ , σ 2 } begin / / Set mean parameter μ = ∑ i = 1 I x i / I / / Set variance σ 2 = ∑ i = 1 I ( x i − μ ^ ) 2 / I end \begin{array}{l}{\text { Input : Training data }\left\{x_{i}\right\}_{i=1}^{I}} \\ {\text { Output: Maximum likelihood estimates of parameters } \theta=\left\{\mu, \sigma^{2}\right\}} \\ {\text { begin }} \\ {/ / \text { Set mean parameter }} \\ {\mu=\sum_{i=1}^{I} x_{i} / I} \\ {/ / \text { Set variance }} \\ {\qquad \sigma^{2}=\sum_{i=1}^{I}\left(x_{i}-\hat{\mu}\right)^{2} / I} \\ {\text { end }}\end{array} Input : Training data {xi}i=1I Output: Maximum likelihood estimates of parameters θ={μ,σ2} begin // Set mean parameter μ=∑i=1Ixi/I// Set variance σ2=∑i=1I(xi−μ^)2/I end

数据生成的代码如下:

#include <random>

#include <iostream>

#include <vector>

using std::vector;

template <typename T>

vector<T> generate_normal_distribution_data(T mu,T sigma,int number)

{

vector<T> data;

std::random_device rd{};

std::mt19937 gen{ rd() };

std::normal_distribution<> d{ mu,sigma*sigma };

for (int n = 0; n < number; ++n) {

data.push_back(d(gen));

}

return data;

}

学习代码如下:

void learning_normal_distribution_parameters()

{

vector<double> data=generate_normal_distribution_data<double>(0, 1, 100000);

double mu = 0.0;

double sigma = 0.0;

for (int i = 0; i < data.size(); i++)

{

mu += data[i];

}

mu = mu / data.size();

for (int i = 0; i < data.size(); i++)

{

sigma += (data[i] - mu)*(data[i] - mu);

}

sigma = sigma / data.size();

double var = sigma;

cout << "mu: " << mu << endl;

cout << "var: " << var << endl;

}

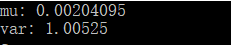

我们在模型的参数设计上,生成均值为0,方差为1的10000个数据点。

学习得到的参数如下: