之前写过收集java日志的文档,但没有使用kafka,这次加了个kafka。

kafka安装

filebeat+logstash+es+kibana安装

filebeat 配置文件:

# cat java-logstash.yaml

filebeat.inputs:

- type: log

enabled: true

paths:

- /usr/local/tomcat_18080/logs/catalina.out

tail_files: true

input_type: log

fields.service: qcban-gw-1

tags: ["qcban-gw-1"]

output.kafka:

enabled: true

hosts: ["172.16.105.20:9092"]

topic: qcban-gw-log

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

max_message_bytes: 1000000filebeat启动:

我这里已经有了一个收集nginx日志的filebeat进程,所以这里是多实例启动

./filebeat -e -c conf/java-logstash.yaml -path.data /usr/local/filebeat-7.13.2-linux-x86_64/java_log/ -d publish和logstash多实例启动一样,创建一个目录,然后--path.data指定即可

logstash配置文件

# cat qcban_java_log.conf

input {

kafka {

bootstrap_servers => ["172.16.105.20:9092"]

client_id => "qcban_gw1"

group_id => "qcban_gw_log"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => true

topics => ["qcban-gw-log"]

type => "qcban-gw-log"

codec => json

}

}

filter {

if [type] == "qcban-gw-log" {

mutate {

remove_field => ["@metadata","log","fields","input","ecs","host","agent","[message][@metadata]","[message][input]","[message][fields]","[message][ecs]","[message][host]","[message][agent]","[message][log]"] # 删除字>段

}

}

}

output {

stdout {

codec => rubydebug

}

if [type] == 'qcban-gw-log' {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "qcban-gw-log-%{+YYYY.MM.dd}"

template_overwrite => true

}

}

}codec => json 这里很重要,不加的话日志输出的格式很不友好

另外 remove_field 这里我是在没加 code => json 的时候匹配的删除字段,现在似乎可以去掉了或者改改。

启动 logstash

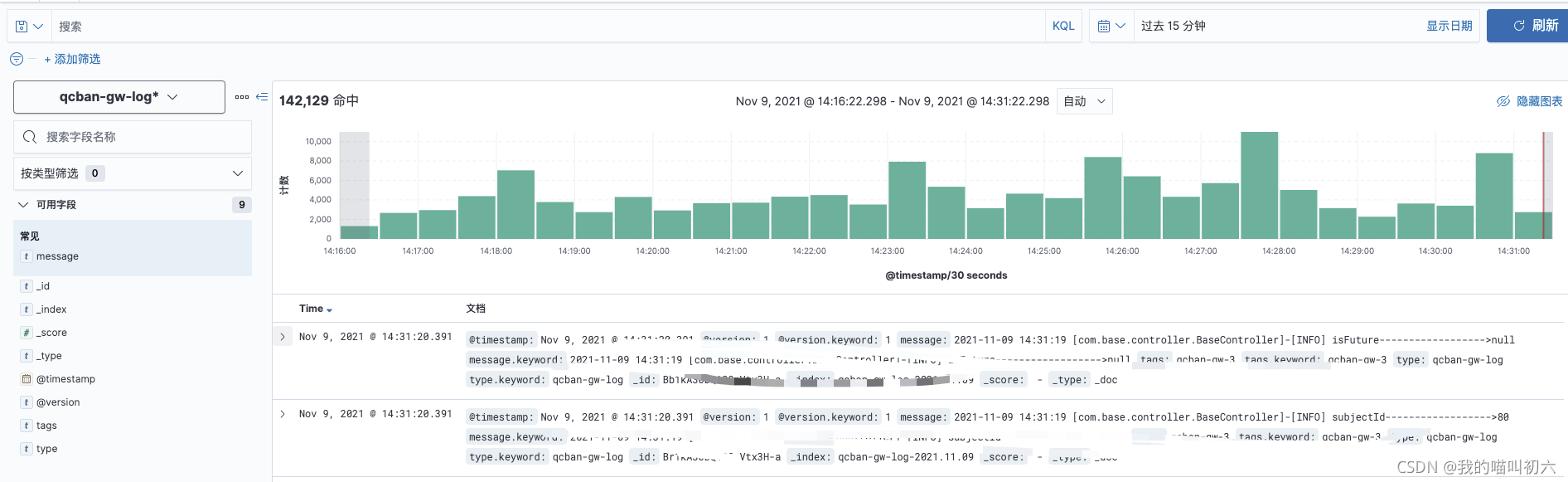

nohup bin/logstash -f config/qcban_java_log.conf --path.data=/data/qcban_log_work/ > /data/nohup_logs/qcban_logstash.log &去kibana添加索引后查看

版权声明:本文为weixin_38367535原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。