机械臂手眼标定主要是为了获取机械臂与相机之间的相对位姿关系。本文主要利用opencv中的calibrateHandEye()函数进行标定。

calibrateHandEye()函数

#每个输入为多帧图像对应的变换矩阵的list->[array1,array2,...]

R_cam2gripper,T_cam2gripper = calibrateHandEye(R_gripper2base,T_gripper2base,R_target2camera,T_target2camera):

注:从机械臂中读取到的机械臂末端位姿即Ts_end_to_base

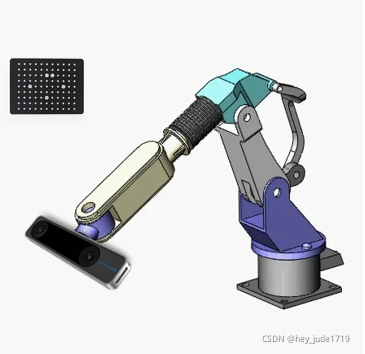

眼在手上

即相机被固定在机械臂末端,标定需要得到相机坐标系到机械臂末端坐标系的变换矩阵。

对于眼在手上的情况,calibrateHandEye()的输入输出参数为:

"""

R_cam2gripper,T_cam2gripper = calibrateHandEye(R_gripper2base,T_gripper2base,R_target2camera,T_target2camera):

R_gripper2base : R_end_to_base

T_gripper2base : T_end_to_base

R_target2camera : R_board_to_camera

T_target2camera : T_board_to_camera

R_cam2gripper : R_camera_to_end

T_cam2gripper : T_camera_to_end

"""

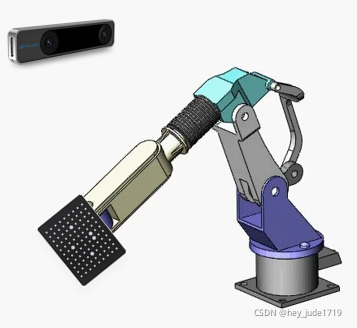

眼在手外

即相机被固定在世界坐标系中某个位置,标定需要得到相机坐标系到机械臂基座坐标系的变换矩阵。

对于眼在手上的情况,calibrateHandEye()的输入输出参数为:

"""

R_cam2gripper,T_cam2gripper = calibrateHandEye(R_gripper2base,T_gripper2base,R_target2camera,T_target2camera):

R_gripper2base : R_base_to_hand

T_gripper2base : T_base_to_hand

R_target2camera : R_board_to_camera

T_target2camera : T_board_to_camera

R_cam2gripper : R_camera_to_base

T_cam2gripper : T_camera_to_base

"""

代码实现(以眼在手外为例)

marker_detect.py

检测标定板特征点像素坐标,生成对应标定板3D坐标,并计算Ts_board_to_camera。

import numpy as np

import time

import cv2

import cv2.aruco as aruco

# 设置相机参数

mtx = np.array([

[916.28, 0, 650.7],

[0, 916.088, 352.941],

[0, 0, 1],

])

dist = np.array([0, 0, 0, 0, 0])

# 欧拉角转换为旋转矩阵

def eulerAnglesToRotationMatrix(theta):

R_x = np.array([[1,0,0],

[0,np.cos(theta[0]),-np.sin(theta[0])],

[0,np.sin(theta[0]),np.cos(theta[0])]])

R_y = np.array([[np.cos(theta[1]),0,np.sin(theta[2])],

#[0,-1,0],

[0, 1, 0],

[-np.sin(theta[1]),0,np.cos(theta[1])]])

R_z = np.array([[np.cos(theta[2]),-np.sin(theta[2]),0],

[np.sin(theta[2]),np.cos(theta[2]),0],

[0, 0,1]])

#return np.dot(np.dot(R_z,R_y),R_x)

# combined rotation matrix

R = np.dot(R_z, R_y.dot(R_x))

return R

# 标定板特征点检测 input:image

# 将检测得到的特征点按照maker的id大小排序

def aruco_detect(frame):

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

aruco_dict = aruco.Dictionary_get(aruco.DICT_6X6_250)

parameters = aruco.DetectorParameters_create()

'''

detectMarkers(...)

detectMarkers(image, dictionary[, corners[, ids[, parameters[, rejectedI

mgPoints]]]]) -> corners, ids, rejectedImgPoints

'''

# lists of ids and the corners beloning to each id

corners, ids, _ = aruco.detectMarkers(gray,

aruco_dict,

parameters=parameters)

ids = ids.flatten()

#将标定板上的标志按id排序,保证与3D点一一对应

corners_sort = np.zeros((len(corners)*4,2),np.float16)

ids_sort_idx = np.argsort(ids, -1)#xiao->da

for i, idx in enumerate(ids_sort_idx):

corner = corners[idx][0]

#print(corner[0][0], corner[0][1])

corners_sort[i * 4, 0] = corner[0][0]

corners_sort[i * 4, 1] = corner[0][1]

corners_sort[i * 4+1, 0] = corner[1][0]

corners_sort[i * 4+1, 1] = corner[1][1]

corners_sort[i * 4+2, 0] = corner[2][0]

corners_sort[i * 4+2, 1] = corner[2][1]

corners_sort[i * 4+3, 0] = corner[3][0]

corners_sort[i * 4+3, 1] = corner[3][1]

# 绘制特征点

# for i,c in enumerate(corners_sort):

# print(c)

# cv2.putText(frame, str(i+1), (int(c[0]), int(c[1])), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0),

# 1, cv2.LINE_AA)

#

# cv2.imshow("frame", frame)

# cv2.waitKey(0)

# key = cv2.waitKey(1)

return corners_sort

# 生成标定板3D坐标(marker从左到右,从上到下的顺序,每个marker左上角-右上角-右下角-左下角的顺序生成)

def generate_3D_point(grid_size,distance,size = (7,5)):

point_n = size[0]*size[1]*4

point_3d = np.zeros((point_n,3),np.float16)

#print(point_3d.shape)

for i in range(point_n):

lu_idx = int(np.floor(i/4))*4

#print(lu_idx)

if i%4==0:

point_3d[i,0] = np.floor(i/4)%5*(grid_size+distance)

point_3d[i,1] = np.floor(i/(4*size[1]))*(grid_size+distance)

if i%4==1:

point_3d[i,0] = point_3d[lu_idx,0]+grid_size

point_3d[i,1] = point_3d[lu_idx,1]

if i%4==2:

point_3d[i, 0] = point_3d[lu_idx, 0] + grid_size

point_3d[i, 1] = point_3d[lu_idx, 1] + grid_size

if i%4==3:

point_3d[i, 0] = point_3d[lu_idx, 0]

point_3d[i, 1] = point_3d[lu_idx, 1] + grid_size

return point_3d

#grid_size:每个marker矩形的长度

#distance:marker之间的距离长度

#size:标定板marker的行数,列数 eg:(7,5)

def camera_calibrate(grid_size,distance,frame,size = (7,5)):

w,h,c = frame.shape

#生成标定板的3D point

point_3d = generate_3D_point(grid_size, distance, size)

#检测图片中标定板的2D point

corners_2d = aruco_detect(frame)

#转为统一数据类型

point_3d = [point_3d.astype('float32')]

corners_2d = [corners_2d.astype('float32')]

# 利用3D和2D对应点计算变换矩阵

(success, rotation_vector, translation_v) = cv2.solvePnP(np.array(point_3d), np.array(corners_2d), mtx, dist,flags=cv2.SOLVEPNP_ITERATIVE)

rotation_v = cv2.Rodrigues(rotation_vector)[0]

return rotation_v,translation_v

# 反投影误差

def calculate_rejection_error(corners_2d,point_3d,rotation_v,translation_v,camera_matrix,distortion_coefficient):

total_error = 0

for i in range(len(point_3d[0])):

imgpoints2, _ = cv2.projectPoints(point_3d[0][i], np.array(rotation_v), np.array(translation_v), camera_matrix,

distortion_coefficient)

imgpoints2 = imgpoints2.flatten()

# cv2.circle(frame, (int(imgpoints2[0]), int(imgpoints2[1])), 2, (255, 0, 0), 2)

# cv2.circle(frame, (int(corners_2d[0][i, 0]), int(corners_2d[0][i, 1])), 2, (0, 0, 255), 2)

error = cv2.norm(corners_2d[0][i, :], imgpoints2, cv2.NORM_L2)

total_error += error

#print("total error: ", total_error / len(point_3d))

return total_error

if __name__ == '__main__':

frame = cv2.imread("aruco.jpg", -1)

rvec,tvec = camera_calibrate(10,1,frame)

calibrate.py

利用多帧的Ts_board_to_camera,Ts_hand_to_base标定得到Ts_camera_to_end

import numpy as np

import os

import cv2

import glob

import math

from marker_detect import camera_calibrate,aruco_detect,generate_3D_point,eulerAnglesToRotationMatrix

from quaternions import Quaternion as Quaternion

from scipy.spatial.transform import Rotation as R

#设置标定板尺寸信息 单位(mm)

grid_size = 12.6

offset = 1.4

# 设置相机参数

# 设置相机参数

mtx = np.array([

[916.28, 0, 650.7],

[0, 916.088, 352.941],

[0, 0, 1],

])

dist = np.array([0, 0, 0, 0, 0])

# created by Leo Ma at ZJU, 2021.10.05

def get_Ts_board_in_camera(img_name):

img = cv2.imread(img_name)

if img is None:

print("img read error!")

return -1

w,h,c = img.shape

newcameramtx, roi = cv2.getOptimalNewCameraMatrix(mtx, dist, (w, h), 0, (w, h)) # 自由比例参数

img = cv2.undistort(img, mtx, dist, None, newcameramtx)

R_board_in_camera,T_board_in_camera = camera_calibrate(grid_size,offset,img)

Ts_board_in_camera = np.zeros((4,4),np.float)

Ts_board_in_camera[:3,:3] = R_board_in_camera

Ts_board_in_camera[:3,3] = np.array(T_board_in_camera).flatten()

Ts_board_in_camera[3,3] = 1

return Ts_board_in_camera

def get_Ts_hand_in_base(file):

if not os.path.exists(file):

print("file not exist!")

return -1

with open(file,"r") as f:

line = f.readline()

Ts = line.split(" ")

Ts = [float(i) for i in Ts]

#R_hand_in_base= eulerAnglesToRotationMatrix(np.array(Ts[3:]))

R_hand_in_base= R.from_euler('xyz',np.array(Ts[3:]),degrees=True).as_matrix()

T_hand_in_base = Ts[:3]

# R T拼接

Ts_hand_in_base = np.zeros((4, 4), np.float)

Ts_hand_in_base[:3, :3] = R_hand_in_base

Ts_hand_in_base[:3, 3] = np.array(T_hand_in_base).flatten()

Ts_hand_in_base[3, 3] = 1

return Ts_hand_in_base

# 根据计算和读取到的Ts_board_to_camera,Ts_hand_to_base,利用calibrateHandEye标定得到变换矩阵

def calibrate_opencv(Ts_board_to_camera,Ts_hand_to_base):

n = len(Ts_hand_to_base)

R_base_to_hand = []

T_base_to_hand = []

R_board_to_camera = []

T_board_to_camera = []

R_hand_to_base = []

T_hand_to_base = []

R_camera_to_board = []

T_camera_to_board = []

for i in range(n):

Ts_base_to_hand = np.linalg.inv(Ts_hand_to_base[i])

R_base_to_hand.append(np.array(Ts_base_to_hand[:3,:3]))

T_base_to_hand.append(np.array(Ts_base_to_hand[:3,3]))

R_board_to_camera.append(np.array(Ts_board_to_camera[i][:3,:3]))

T_board_to_camera.append(np.array(Ts_board_to_camera[i][:3,3]))

print("R_base_to_hand:\n",R_base_to_hand)

print("T_base_to_hand:\n",T_base_to_hand)

print("R_board_to_camera:\n", R_board_to_camera)

print("T_board_to_camera:\n", T_board_to_camera)

"""

R_cam2gripper,T_cam2gripper = calibrateHandEye(R_gripper2base,T_gripper2base,R_target2camera,T_target2camera):

R_gripper2base : R_base_to_hand

T_gripper2base : T_base_to_hand

R_target2camera : R_board_to_camera

T_target2camera : T_board_to_camera

R_cam2gripper : R_camera_to_base

T_cam2gripper : T_camera_to_base

"""

R_camera_to_base,T_camera_to_base = cv2.calibrateHandEye(R_base_to_hand,T_base_to_hand,R_board_to_camera,T_board_to_camera,method=cv2.CALIB_HAND_EYE_TSAI)

#验证

# Ts_camera_to_base = np.zeros((4, 4), np.float)

# Ts_camera_to_base[:3, :3] = R_camera_to_base

# Ts_camera_to_base[:3, 3] = np.array(T_camera_to_base).flatten()

# Ts_camera_to_base[3, 3] = 1

# print("-----------------验证-------------------")

# print(np.dot(Ts_board_to_camera[0],np.dot(Ts_camera_to_base,np.linalg.inv(Ts_hand_to_base[0]))))

# print(np.dot(Ts_board_to_camera[1], np.dot(Ts_camera_to_base, np.linalg.inv(Ts_hand_to_base[1]))))

return R_camera_to_base,T_camera_to_base

# 生成Ts_board_to_camera,Ts_hand_to_base,调用calibrate_opencv

def calibrate(path):

imgs_name = glob.glob(os.path.join(path,"*-Color.png"))

files_name = glob.glob(os.path.join(path,"*.txt"))

n_files = len(imgs_name)

Ts_hand_in_base_all = []

Ts_board_in_camera_all = []

for i in range(n_files):

file_name = str(i+1)+".txt"

img_name = str(i+1)+"-Color.png"

Ts_board_in_camera_all.append(get_Ts_board_in_camera(os.path.join(path,img_name)))

Ts_hand_in_base_all.append(get_Ts_hand_in_base(os.path.join(path,file_name)))

R_camera_to_base,T_camera_to_base = calibrate_opencv(Ts_board_in_camera_all,Ts_hand_in_base_all)

return R_camera_to_base,T_camera_to_base

if __name__ == '__main__':

#图片所在路径

path = '..\\data\\'

R_camera_to_base,T_camera_to_base = calibrate(path)

print(T_camera_to_base)

Ts_camera_to_base = np.vstack((np.hstack((R_camera_to_base,T_camera_to_base)),np.array([0,0,0,1])))

#存储标定结果矩阵

np.savetxt("calibration.txt", Ts_camera_to_base)

版权声明:本文为qq_40016998原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。