Docker

文档链接:https://pan.baidu.com/s/1kKkXuW5uOehC3E2iylrwGA 提取码:wy47

建议用谷歌浏览器,自带英文翻译功能

进入docker官方文档:https://docs.docker.com

进入docker中心(用于Docker社区查找和共享容器映像):https://hub.docker.com/search?q=&type=image

选择下载与安装,然后选择自己要想要安装的系统

本文档选择的是linux,CentOs7版本

选择Linux后在左侧目录中的installation per distro (按发行版安装)中选择相应镜像要安装的版本

可根据自我情况选择依照官方文档提示安装,也可以观看此文档进行安装与使用

一、安装与启动

1.卸载旧版本

yum remove docker

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

2.需要的安装包

yum install -y yum-utils

yum install -y yum-utils

我之前已经安装过,才如此显示

3、设置镜像仓库

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo #官网默认国外的

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo #阿里云的(推荐)

![]](https://img-blog.csdnimg.cn/20200805201213707.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L05ld19TYW50YQ==,size_16,color_FFFFFF,t_70)

4、更新yum软件包索引

yum makecache fast

yum makecache fast

5、安装docker

yum install docker-ce docker-ce-cli containerd.io

#安装docker docker ce社区版 ee企业版 yum install docker-ce docker-ce-cli containerd.io [root@localhost /]# yum install docker-ce docker-ce-cli containerd.io Loaded plugins: fastestmirror, langpacks Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com Package 3:docker-ce-19.03.12-3.el7.x86_64 already installed and latest version Package 1:docker-ce-cli-19.03.12-3.el7.x86_64 already installed and latest version Package containerd.io-1.2.13-3.2.el7.x86_64 already installed and latest version Nothing to do

我之前已经安装,才如此显示

若想安装其他版本(默认安装时最新版本),可用以下代码**

yum install docker-ce-<VERSION_STRING> docker-ce-cli-<VERSION_STRING> containerd.io

版本安装格式在官网文档此行代码上有,可以自行查看

6、启动docker

systemctl start docker

systemctl start docker

#检查docker是否安装成功

docker version

#查看docker版本

docker -v

7、通过运行hello-world 映像来验证是否正确安装了Docker Engine

[root@localhost docker]# docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

0e03bdcc26d7: Pull complete

Digest: sha256:49a1c8800c94df04e9658809b006fd8a686cab8028d33cfba2cc049724254202

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

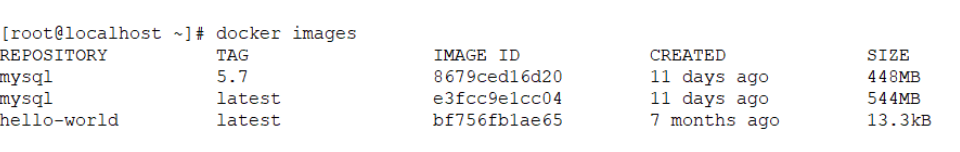

8、查看一下已有的镜像

docker images

docker images

REPOSITORY TAG IMAGE ID CREATED

hello-world latest bf756fb1ae65 7 months ago

9、了解:卸载docker

#1 卸载依赖

yum remove docker-ce docker-ce-cli containerd.io

#2 删除资源

rm -rf /var/lib/docker

# /var/lib/docker docker的默认工作路径

二、设置阿里云的镜像加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://x2gdib5d.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

三、Docker的启动与停止

systemctl命令是系统服务管理器指令

启动docker:

systemctl start docker

停止docker:

systemctl stop docker

重启docker:

systemctl restart docker

查看docker状态:

systemctl status docker

开机启动:

systemctl enable docker

查看docker概要信息:

docker info

查看docker帮助文档

docker --help

帮助文档的地址https://docs.docker.com/reference/

四、Docker的常用命令

镜像命令

1.查看所有本地的主机上的镜像

docker images

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest bf756fb1ae65 7 months ago 13.3kB

#解释

REPOSITORY 镜像名称

TAG 镜像标签

IMAGE ID 镜像ID

CREATED 镜像的创建日期(不是获取该镜像的日期)

SIZE 镜像大小

这些镜像都是存储在Docker宿主机的/var/lib/docker目录下

#可选项 docker images --help

-a, --all #列出所有镜像

-q, --quiet #只显示镜像id

2.搜索镜像

docker search

[root@localhost ~]# docker search mysql

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

mysql MySQL is a widely used, open-source relation… 9793 [OK]

mariadb MariaDB is a community-developed fork of MyS… 3574

#解释

NAME 仓库名称

DESCRIPTION 镜像描述

STARS 用户评价,反应一个镜像的受欢迎程度

OFFICIAL 是否官方

AUTOMATED 自动构建,表示该镜像由Docker Hub自动构建流程创建的

#可选项 docker search --help

--filter filter

eg:

--filter=STARS=5000 #可以通过搜索来过滤 #搜索出来的镜像就是STARS大于5000的

[root@localhost ~]# docker search mysql --filter=STARS=5000

NAME DESCRIPTION STARS OFFICI AL AUTOMATED

mysql MySQL is a widely used, open-source relation… 9793 [OK]

3.下载镜像

docker pull

#下载镜像 docker pull 镜像名[:tag]

[root@localhost ~]# docker pull mysql

Using default tag: latest #如果不写tag,默认就是latest

latest: Pulling from library/mysql

6ec8c9369e08: Pull complete #分层下载,docker images的核心 联合文件系统

177e5de89054: Pull complete

ab6ccb86eb40: Pull complete

e1ee78841235: Pull complete

09cd86ccee56: Pull complete

78bea0594a44: Pull complete

caf5f529ae89: Pull complete

cf0fc09f046d: Pull complete

4ccd5b05a8f6: Pull complete

76d29d8de5d4: Pull complete

8077a91f5d16: Pull complete

922753e827ec: Pull complete

Digest: sha256:fb6a6a26111ba75f9e8487db639bc5721d4431beba4cd668a4e922b8f8b14acc #签名 防伪标志

Status: Downloaded newer image for mysql:latest

docker.io/library/mysql:latest #真实地址

#等价

docker pull mysql

docker docker.io/library/mysql:latest

#指定版本下载

[root@localhost ~]# docker pull mysql:5.7

5.7: Pulling from library/mysql

6ec8c9369e08: Already exists

177e5de89054: Already exists

ab6ccb86eb40: Already exists

e1ee78841235: Already exists

09cd86ccee56: Already exists

78bea0594a44: Already exists

caf5f529ae89: Already exists

4e54a8bcf566: Pull complete

50c21ba6527b: Pull complete

68e74bb27b39: Pull complete

5f13eadfe747: Pull complete

Digest: sha256:97869b42772dac5b767f4e4692434fbd5e6b86bcb8695d4feafb52b59fe9ae24

Status: Downloaded newer image for mysql:5.7

docker.io/library/mysql:5.7

4.删除镜像

docker rmi

#按镜像ID删除镜像

docker rmi 镜像ID

或

docker rmi -f 镜像ID

eg:

[root@localhost ~]# docker rmi 8679ced16d20

Untagged: mysql:5.7

Untagged: mysql@sha256:97869b42772dac5b767f4e4692434fbd5e6b86bcb8695d4feafb52b59fe9ae24

Deleted: sha256:8679ced16d206961b35686895b06cfafefde87ef02b518dfc2133081ebf47cda

Deleted: sha256:355f87dc5125a32cc35898a4dde17fb067585bc0d86704b5a467c0ccc0eea484

Deleted: sha256:8299d5c38042216210125535adb2600e46268a0e2b9ec799d12ea5b770236e79

Deleted: sha256:07311a303b2c7cf2ac6992aaf68e12326fe7255985166939cbab7d18b10e0f47

Deleted: sha256:306c9bc1ce2997d000bb6f1ea4108420d9752df93ce39164b7a2f876b954afc4

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mysql latest e3fcc9e1cc04 11 days ago 544MB

hello-world latest bf756fb1ae65 7 months ago 13.3kB

#删除命令

docker rmi -f 镜像id #删除指定镜像

docker rmi -f 镜像id 镜像id 镜像id 镜像id #删除多个镜像

docker rmi -f $(docker images -aq) #删除所有镜像

容器命令

说明:我们有了镜像才能创建容器,linux下载一个centos镜像来测试学习

docker pull centos

1、新建容器并启动

docker run [可选参数] image

#参数说明

--name="Name" 容器名字 tomcat1 tomcat2,用来区分容器

-d 后台方式运行

-it 使用交互式运行,进入容器查看内容

-p (小p) 指定容器的端口,-p 8080:8080

-p ip:主机端口:容器端口

-p 主机端口:容器端口 (常用)

-p 容器端口

容器端口

-P(大p) 随机指定端口

#测试 启动并进入容器

docker run -it --name=容器名称 镜像名称:标签 /bin/bash #交互式方式创建容器

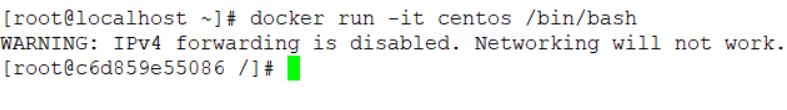

[root@localhost ~]# docker run -it centos /bin/bash

我连接的时候出现以下问题

WARNING: IPv4 forwarding is disabled. Networking will not work.

会导致本地连接不上docker上的容器

解决办法:

vi /etc/sysctl.conf

net.ipv4.ip_forward=1 #添加这段代码

#重启network服务

systemctl restart network && systemctl restart docker

#查看是否修改成功 (备注:返回1,就是成功)

[root@docker-node2 ~]# sysctl net.ipv4.ip_forward

net.ipv4.ip_forward = 1

#正常进入容器

[root@localhost ~]# docker run -it centos /bin/bash

[root@f9727fa537a4 /]#

#接着测试

[root@538b7de76fc5 /]# ls #查看容器内的centos 基础版本很多命令是不完善的

bin dev etc home lib lib64 lost+found media mnt opt proc root run sbin srv sys tmp usr var

2、从容器中退回主机

(1)exit 停止并退出容器

(2)Ctrl + p + q 不停止退出容器

#exit 直接停止并退出容器

[root@538b7de76fc5 /]# exit

exit

[root@localhost ~]#

#Ctrl + p + q 不停止退出容器

[root@localhost ~]# docker run -it centos /bin/bash

[root@d5da10017431 /]# [root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS

d5da10017431 centos "/bin/bash" 31 seconds ago Up 30 seconds

3、列出所有在运行的容器

docker ps

#docker ps 命令

#列出当前正在运行的容器

-a #列出当前正在运行的容器+带出历史运行过的容器

-n=? #显示最近创建的容器

-q #只显示容器编号

#查看所有在运行的容器

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

#查看之前运行过的容器

[root@localhost ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

538b7de76fc5 centos "/bin/bash" 11 minutes ago Exited (130) 5 minutes ago unruffled_nash

f9727fa537a4 centos "/bin/bash" 18 minutes ago Exited (127) 12 minutes ago charming_fermi

4c7dced39dee centos "/bin/bash" 23 minutes ago Exited (127) 23 minutes ago sad_swirles

c135f7f0035b centos "/bin/bash" 24 minutes ago Exited (1) 23 minutes ago suspicious_lehmann

c6d859e55086 centos "/bin/bash" 49 minutes ago Exited (130) 25 minutes ago bold_leavitt

fd897408a819 centos "/bin/bash" 50 minutes ago Exited (130) 49 minutes ago dazzling_hamilton

c4f5c13879b7 bf756fb1ae65 "/hello" 6 hours ago Exited (0) 6 hours ago pedantic_chatelet

#显示最近创建的2个容器

[root@localhost ~]# docker ps -a -n=2

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

538b7de76fc5 centos "/bin/bash" 21 minutes ago Exited (130) 15 minutes ago unruffled_nash

f9727fa537a4 centos "/bin/bash" 28 minutes ago Exited (127) 22 minutes ago charming_fermi

#只显示容器的编号

[root@localhost ~]# docker ps -aq

538b7de76fc5

f9727fa537a4

4c7dced39dee

c135f7f0035b

c6d859e55086

fd897408a819

c4f5c13879b7

4、删除容器

docker rm 容器id #删除指定的容器 不能删除正在运行的容器 如果要强制删除 rm -f

docker rm -f $(docker ps -aq) #删除所有容器

docker ps -a -q|xargs docker rm #删除所有容器

5、启动并停止容器

docker start 容器id #启动容器

docker restart 容器id #重启容器

docker stop 容器id #停止容器

docker kill 容器id #强制停止当前容器

6、后台启动容器

docker run -d 镜像名

#docker run - d 镜像名

[root@localhost ~]# docker run -d centos /bin/bash

df2f98023927ae83d3da95e83c7941ff83f6a4b7a73ccee8b5b42e3dd11b9c25

#问题docker ps,发现centos 停止了

#常见的坑,docker容器使用后台运行,就必须有一个前台进程,docker发现没有应用,就会自动停止

#nginx,容器启动后,发现自己没有提供服务,就会立刻停止,就是没有程序

7、查看日志

docker logs

docker logs -f -t --tail [number] 容器 没有日志

#自己编写一段shell日志

[root@localhost ~]# docker run -d centos /bin/sh -c "while true;do echo Santa;sleep 1;done"

e9cc823b5a6732a77159e7fb8ce56e97baa01d7a4cc81e927ad4beb20c62d34d

#查看

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e9cc823b5a67 centos "/bin/sh -c 'while t…" 28 seconds ago Up 27 seconds brave_liskov

#查看日志

[root@localhost ~]# docker logs -ft --tail 10 e9cc823b5a67

2020-08-03T09:26:01.342380023Z Santa

2020-08-03T09:26:02.345681136Z Santa

2020-08-03T09:26:03.350124251Z Santa

2020-08-03T09:26:04.353270896Z Santa

2020-08-03T09:26:05.356494066Z Santa

2020-08-03T09:26:06.359486117Z Santa

2020-08-03T09:26:07.361925694Z Santa

2020-08-03T09:26:08.364800919Z Santa

2020-08-03T09:26:09.367819068Z Santa

2020-08-03T09:26:10.370290437Z Santa

2020-08-03T09:26:11.372604642Z Santa

2020-08-03T09:26:12.374797002Z Santa

2020-08-03T09:26:13.377226865Z Santa

2020-08-03T09:26:14.379389029Z Santa

2020-08-03T09:26:15.382546465Z Santa

2020-08-03T09:26:16.386634389Z Santa

2020-08-03T09:26:17.390531223Z Santa

2020-08-03T09:26:18.393130983Z Santa

#显示日志

-ft #显示日志

--tail #要显示日志条数

[root@localhost ~]# docker logs -ft --tail 10 e9cc823b5a67

8、查看进程中的信息

docker top 容器id

[root@localhost ~]# docker top e9cc823b5a67

UID PID PPID C STIME

root 14589 14573 0 02:24

root 15180 14589 0 02:31 /usr/bin/sleep 1

9、查看镜像的元数据

docker inspect 容器id

[root@localhost ~]# docker inspect e9cc823b5a67

[

{

"Id": "e9cc823b5a6732a77159e7fb8ce56e97baa01d7a4cc81e927ad4beb20c62d34d",

"Created": "2020-08-03T09:24:12.320064937Z",

"Path": "/bin/sh",

"Args": [

"-c",

"while true;do echo Santa;sleep 1;done"

],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 14589,

"ExitCode": 0,

"Error": "",

"StartedAt": "2020-08-03T09:24:12.992021922Z",

"FinishedAt": "0001-01-01T00:00:00Z"

},

"Image": "sha256:831691599b88ad6cc2a4abbd0e89661a121aff14cfa289ad840fd3946f274f1f",

"ResolvConfPath": "/var/lib/docker/containers/e9cc823b5a6732a77159e7fb8ce56e97baa01d7a4cc81e927ad4beb20c62d34d/resolv.conf",

"HostnamePath": "/var/lib/docker/containers/e9cc823b5a6732a77159e7fb8ce56e97baa01d7a4cc81e927ad4beb20c62d34d/hostname",

"HostsPath": "/var/lib/docker/containers/e9cc823b5a6732a77159e7fb8ce56e97baa01d7a4cc81e927ad4beb20c62d34d/hosts",

"LogPath": "/var/lib/docker/containers/e9cc823b5a6732a77159e7fb8ce56e97baa01d7a4cc81e927ad4beb20c62d34d/e9cc823b5a6732a77159e7fb8ce56e97baa01d7a4cc81e927ad4beb20c62d34d-json.log",

"Name": "/brave_liskov",

"RestartCount": 0,

"Driver": "overlay2",

"Platform": "linux",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "",

"ExecIDs": null,

"HostConfig": {

"Binds": null,

"ContainerIDFile": "",

"LogConfig": {

"Type": "json-file",

"Config": {}

},

"NetworkMode": "default",

"PortBindings": {},

"RestartPolicy": {

"Name": "no",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": null,

"CapAdd": null,

"CapDrop": null,

"Capabilities": null,

"Dns": [],

"DnsOptions": [],

"DnsSearch": [],

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "private",

"Cgroup": "",

"Links": null,

"OomScoreAdj": 0,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": null,

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "runc",

"ConsoleSize": [

0,

0

],

"Isolation": "",

"CpuShares": 0,

"Memory": 0,

"NanoCpus": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": [],

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": [],

"DeviceCgroupRules": null,

"DeviceRequests": null,

"KernelMemory": 0,

"KernelMemoryTCP": 0,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": null,

"OomKillDisable": false,

"PidsLimit": null,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0,

"MaskedPaths": [

"/proc/asound",

"/proc/acpi",

"/proc/kcore",

"/proc/keys",

"/proc/latency_stats",

"/proc/timer_list",

"/proc/timer_stats",

"/proc/sched_debug",

"/proc/scsi",

"/sys/firmware"

],

"ReadonlyPaths": [

"/proc/bus",

"/proc/fs",

"/proc/irq",

"/proc/sys",

"/proc/sysrq-trigger"

]

},

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/d4989853f64e6777170d2869c5cd3e4965a682c7ce2b6ed507606b062565f9ca-init/diff:/var/lib/docker/overlay2/cd44800523819748f37817e96637d68527ec4fe830c9f266cda590d09b0e1d81/diff",

"MergedDir": "/var/lib/docker/overlay2/d4989853f64e6777170d2869c5cd3e4965a682c7ce2b6ed507606b062565f9ca/merged",

"UpperDir": "/var/lib/docker/overlay2/d4989853f64e6777170d2869c5cd3e4965a682c7ce2b6ed507606b062565f9ca/diff",

"WorkDir": "/var/lib/docker/overlay2/d4989853f64e6777170d2869c5cd3e4965a682c7ce2b6ed507606b062565f9ca/work"

},

"Name": "overlay2"

},

"Mounts": [],

"Config": {

"Hostname": "e9cc823b5a67",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"/bin/sh",

"-c",

"while true;do echo Santa;sleep 1;done"

],

"Image": "centos",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"org.label-schema.build-date": "20200611",

"org.label-schema.license": "GPLv2",

"org.label-schema.name": "CentOS Base Image",

"org.label-schema.schema-version": "1.0",

"org.label-schema.vendor": "CentOS"

}

},

"NetworkSettings": {

"Bridge": "",

"SandboxID": "d8ad3e61ad53115924881aad65c08bc0364971af3eff1ce5737f04a1d84f262f",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": {},

"SandboxKey": "/var/run/docker/netns/d8ad3e61ad53",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "4fdce70b1e5dcf2f22185f56864cd3ac24988ef95e1bfd5ba145a17ac4761367",

"Gateway": "172.17.0.1",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"MacAddress": "02:42:ac:11:00:02",

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "7a2a106803348b0f0f6ee579b784b4be92d5f06fb04af61536a013c623c20f06",

"EndpointID": "4fdce70b1e5dcf2f22185f56864cd3ac24988ef95e1bfd5ba145a17ac4761367",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:02",

"DriverOpts": null

}

}

}

}

]

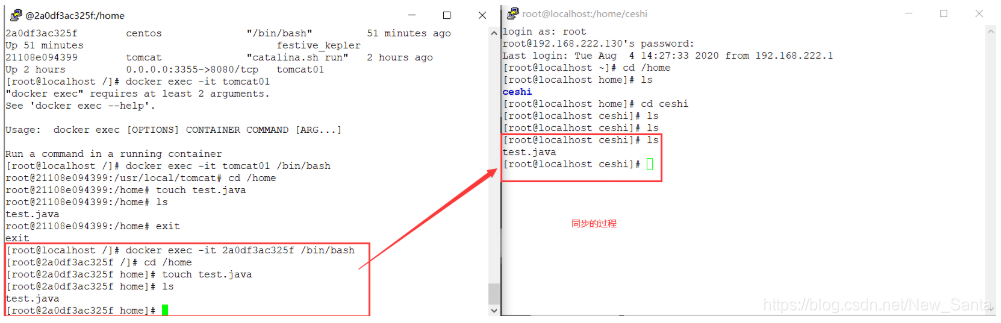

10、进入正在运行的的容器

(1) docker exec -it 容器id /bin/bash

(2) docker attach 容器id

#我们容器都是后台方式运行的,需要进入容器,修改一些配置

#命令

docker exec -it 容器id /bin/bash

#测试

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e9cc823b5a67 centos "/bin/sh -c 'while t…" 16 minutes ago Up 16 minutes brave_liskov

[root@localhost ~]# docker exec -it e9cc823b5a67 bashShell

OCI runtime exec failed: exec failed: container_linux.go:349: starting container process caused "exec: \"bashShell\": executable file not found in $PATH": unknown

[root@localhost ~]# docker exec -it e9cc823b5a67 /bin/bash

[root@e9cc823b5a67 /]# ls

bin etc lib lost+found mnt proc run srv tmp var

dev home lib64 media opt root sbin sys usr

[root@e9cc823b5a67 /]# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 09:24 ? 00:00:00 /bin/sh -c while true;do echo Santa;sleep 1;

root 1126 0 0 09:42 pts/0 00:00:00 /bin/bash

root 1166 1 0 09:43 ? 00:00:00 /usr/bin/coreutils --coreutils-prog-shebang=

root 1167 1126 0 09:43 pts/0 00:00:00 ps -ef

#方式二

[root@localhost ~]# docker attach e9cc823b5a67

正在执行的代码。。。

#docker exec #进入容器后开启一个新的终端,可以在里面操作(常用)

#docker attach #进入容器正在执行的终端,不会启动新的终端!

11、从容器内拷贝文件到主机上

docker cp 容器id : 容器内路径 目的的主机路径

#查看当前主机目录下

[root@localhost home]# ls

santa.java wh

[root@localhost home]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e9cc823b5a67 centos "/bin/sh -c 'while t…" 2 hours ago Up 2 hours brave_liskov

#进入docker容器内部中

[root@localhost home]# docker exec -it e9cc823b5a67 /bin/bash

[root@e9cc823b5a67 /]# cd /home

[root@e9cc823b5a67 home]# ls

#在容器内部创建一个文件

[root@e9cc823b5a67 home]# touch test.java

[root@e9cc823b5a67 home]# ls

test.java

[root@e9cc823b5a67 home]# exit

exit

#将docker容器中的文件拷贝到主机上

[root@localhost home]# docker cp e9cc823b5a67:/home/test.java /home

[root@localhost home]# ls

santa.java test.java wh

#拷贝是一个手动过程,未来我们使用 -v 卷的技术,可以实现自动同步

五、作业练习

1、docker 安装 Nginx

#1、搜索镜像 (建议去docker官网搜索,可以看到帮助文档)

docker search nginx

#2、拉取镜像

docker pull nginx

#3、运行测试

[root@localhost home]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 8cf1bfb43ff5 12 days ago 132MB

centos latest 831691599b88 6 weeks ago 215MB

#-d 后台运行

#--name 给容器命名

#-p 宿主机端口:容器内部端口

[root@localhost home]# docker run -d --name=nginx01 -p 3344:80 nginx

1d7e0f857ebf

[root@localhost home]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1d7e0f857ebf nginx "/docker-entrypoint.…" 7 minutes ago Up 7 minutes 0.0.0.0:3344->80/tcp nginx01

e9cc823b5a67 centos "/bin/sh -c 'while t…" 3 hours ago Up 3 hours brave_liskov

#4、进入本机查看是否成功连通

[root@localhost home]# curl localhost:3344

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

#5、进入容器

[root@localhost home]# docker exec -it nginx01 /bin/bash

root@1d7e0f857ebf:/# whereis nginx

nginx: /usr/sbin/nginx /usr/lib/nginx /etc/nginx /usr/share/nginx

root@1d7e0f857ebf:/# cd /etc/nginx

root@1d7e0f857ebf:/etc/nginx# ls

conf.d koi-utf mime.types nginx.conf uwsgi_params

fastcgi_params koi-win modules scgi_params win-utf

root@1d7e0f857ebf:/etc/nginx#

2、docker 安装 tomcat

#官方的使用

docker run -it --rm tomcat:9.0

#我们之前的启动都是后台,停止了容器之后,容器还是可以查到 docker run -it --rm 一般用于测试,用完即会自动删除

#下载在启动

docker pull tomcat

#启动运行

docker run -d -p 3355:8080 --name=tomcat01 tomcat

#测试访问没有问题

#进入容器

[root@localhost docker]# docker exec -it b03ce995a321 /bin/bash

root@b03ce995a321:/usr/local/tomcat# ls

BUILDING.txt NOTICE RUNNING.txt lib temp work

CONTRIBUTING.md README.md bin logs webapps

LICENSE RELEASE-NOTES conf native-jni-lib webapps.dist

#发现问题:1、linux命令杀了 2、没有webapps。 阿里云镜像的问题:默认最小镜像,把不必要的都剔除掉

#保证最小可运行的环境

#自己部署一个项目

[root@localhost docker]# docker exec -it tomcat01 /bin/bash

root@b03ce995a321:/usr/local/tomcat# ls

BUILDING.txt NOTICE RUNNING.txt lib temp work

CONTRIBUTING.md README.md bin logs webapps

LICENSE RELEASE-NOTES conf native-jni-lib webapps.dist

root@b03ce995a321:/usr/local/tomcat# cd webapps.dist

root@b03ce995a321:/usr/local/tomcat/webapps.dist# ls

ROOT docs examples host-manager manager

root@b03ce995a321:/usr/local/tomcat/webapps.dist# cd ..

root@b03ce995a321:/usr/local/tomcat# cp -r webapps.dist/* webapps

root@b03ce995a321:/usr/local/tomcat# cd webapps

root@b03ce995a321:/usr/local/tomcat/webapps# ls

ROOT docs examples host-manager manager

root@b03ce995a321:/usr/local/tomcat/webapps#

3、docker 安装 ES+Kibana

#es 暴露的端口很多

#es 十分消耗内存

#es 的数据一般需要装置到安全目录!挂载

#--net somenetwork 网络配置

#启动 elasticsearch

docker run -d --name elasticsearch -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" elasticsearch:7.6.2

#启动后,发现linux非常卡

#测试ES是否成功

[root@localhost /]# curl localhost:9200

{

"name" : "8e675e6c90e2",

"cluster_name" : "docker-cluster",

"cluster_uuid" : "cz8No8q1QtqcOnceLQHMYw",

"version" : {

"number" : "7.6.2",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "ef48eb35cf30adf4db14086e8aabd07ef6fb113f",

"build_date" : "2020-03-26T06:34:37.794943Z",

"build_snapshot" : false,

"lucene_version" : "8.4.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

#docker stats查看CPU使用情况

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

8e675e6c90e2 elasticsearch 0.42% 1.233GiB / 1.753GiB 70.32% 1.18kB / 942B 0B / 0B 47

#赶紧停止ES

#关闭后增加内存限制,修改配置文件 -e 环境配置修改

docker run -d --name elasticsearch02 -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" -e ES_JAVA_OPTS="-Xms64m -Xmx512m" elasticsearch:7.6.2

#启动新的 elasticsearch(最小64秒,最大512m)

[root@localhost /]# docker run -d --name elasticsearch02 -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" -e ES_JAVA_OPTS="-Xms64m -Xmx512m" elasticsearch:7.6.2

6e9bb2cb5daa4e31c0d81d79d85b0218abc3caeb4a7ab29f7a54d0e8004b8edf

#docker stats再次查看CPU使用情况

[root@localhost /]# docker stats 6e9bb2cb5daa4e31c0d81d79d85b0218abc3caeb4a7ab29f7a54d0e8004b8edf

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

6e9bb2cb5daa elasticsearch02 2.04% 384.5MiB / 1.753GiB 21.42% 656B / 0B 0B / 0B 45

#再次测试ES是否成功

[root@localhost /]# curl localhost:9200

{

"name" : "6e9bb2cb5daa",

"cluster_name" : "docker-cluster",

"cluster_uuid" : "tFmZqnmMRHqgEHGHQ90VTg",

"version" : {

"number" : "7.6.2",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "ef48eb35cf30adf4db14086e8aabd07ef6fb113f",

"build_date" : "2020-03-26T06:34:37.794943Z",

"build_snapshot" : false,

"lucene_version" : "8.4.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

六、Commit 镜像

docker commit:提交容器成为一个新的镜像

#命令和git原理类似

docker commit -m="提交的描述信息" -a=“作者” 容器id 目标镜像名:[tag]

实战测试

#启动一个默认的tomcat

#发现这个tomcat是没有webapps应用的,镜像的原因:官方默认的webapps下面是没有文件的

#我自己拷贝进去了基本的文件

root@21108e094399:/usr/local/tomcat# cp -r webapps.dist/* webapps

root@21108e094399:/usr/local/tomcat# cd webapps

root@21108e094399:/usr/local/tomcat/webapps# ls

ROOT docs examples host-manager manager

#最后将我修改过的容器通过commit提交成一个新的镜像!以后就使用自己修改过的镜像

[root@localhost /]# docker commit -a="wuhao" -m="add webapps app" 21108e094399 tomcat02:1.0

sha256:8d36b6b2eb333b797657f9e0071724a96778689e9f7c15cd834312040d5d07ad

[root@localhost /]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

tomcat02 1.0 8d36b6b2eb33 23 seconds ago 652MB

tomcat latest 9a9ad4f631f8 6 days ago 647MB

mysql latest e3fcc9e1cc04 12 days ago 544MB

elasticsearch 7.6.2 f29a1ee41030 4 months ago 791MB

七、容器数据卷

什么是容器数据卷

容器之间可以有一个共享的技术,Docker容器中产生的数据同步到本地,

这就是卷技术。(也可以理解为目录的挂载,将我们容器内的目录挂载到linux上面)

总结:容器的持久化和同步操作!容器间也是可以数据共享的!

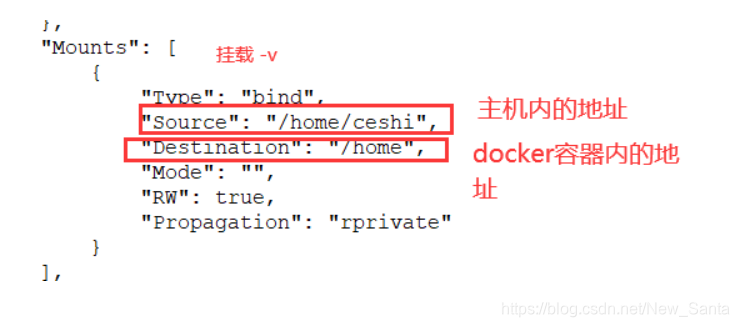

1.使用数据卷

方式一:直接用命令挂载 -v

docker run -it -v 主机目录:容器内目录

#测试

[root@localhost /]# docker run -it -v /home/ceshi:/home centos /bin/bash

Unable to find image 'centos:latest' locally

latest: Pulling from library/centos

6910e5a164f7: Pull complete

Digest: sha256:4062bbdd1bb0801b0aa38e0f83dece70fb7a5e9bce223423a68de2d8b784b43b

Status: Downloaded newer image for centos:latest

#启动容器后可以通过 docker inspect 容器id 查看

[root@localhost /]# docker inspect 2a0df3ac325f

"Mounts": [

{

"Type": "bind",

"Source": "/home/ceshi",

"Destination": "/home",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

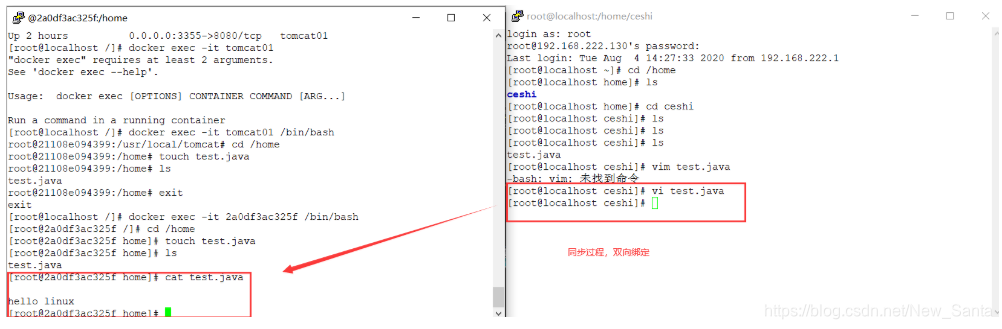

测试文件的同步(双向绑定)

再来测试

1.停止容器

2.宿主机上修改文件

3.启动容器

4.容器内的数据依旧是同步的

实战:安装Mysql

思考:Mysql的数据持久化的问题

#获取镜像

[root@localhost /]# docker pull mysql:5.7

#运行容器,需要做数据挂载。 #安装启动Mysql,需要配置密码的,这是注意点

#官方测试:docker run --name some-mysql -e MYSQL_ROOT_PASSWORD=my-secret-pw -d mysql:tag

#启动自己的

-d 后台运行

-p 端口映射

-v 卷挂载

-e 环境配置

--name 容器名字

[root@localhost /]# docker run -d -p 3310:3306 -v /home/mysql/conf:/etc/mysql/conf.d -v /home/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 --name=mysql02 mysql:5.7

#启动成功之后,使用本地的sqlyog来测试连接

#sqlyog-连接到服务器的3310-- 3310和容器内的3306映射,这个时候就能连接上

#在本地创建一个数据库,查看映射是否ok(多了一个test数据库)

[root@localhost /]# cd home

[root@localhost home]# ls

ceshi mysql

[root@localhost home]# cd mysql

[root@localhost mysql]# cd data

[root@localhost data]# ls

auto.cnf client-key.pem ib_logfile1 private_key.pem sys

ca-key.pem ib_buffer_pool ibtmp1 public_key.pem

ca.pem ibdata1 mysql server-cert.pem

client-cert.pem ib_logfile0 performance_schema server-key.pem

[root@localhost data]# ls

auto.cnf client-key.pem ib_logfile1 private_key.pem sys

ca-key.pem ib_buffer_pool ibtmp1 public_key.pem test

ca.pem ibdata1 mysql server-cert.pem

client-cert.pem ib_logfile0 performance_schema server-key.pem

#假设我们将容器删除

[root@localhost home]# docker rm -f mysql02

mysql02

[root@localhost home]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2a0df3ac325f centos "/bin/bash" 2 hours ago Up 2 hours festive_kepler

21108e094399 tomcat "catalina.sh run" 3 hours ago Up 3 hours 0.0.0.0:3355->8080/tcp tomcat01

6e9bb2cb5daa elasticsearch:7.6.2 "/usr/local/bin/dock…" 5 hours ago Exited (143) 3 hours ago elasticsearch02

db08101b3d31 mysql "docker-entrypoint.s…" 5 hours ago Exited (0) 5 hours ago mysql01

#发现挂载在本地的数据卷依旧没有丢失,这就实现了容器数据持久化功能

[root@localhost home]# cd mysql/data

[root@localhost data]# ls

auto.cnf ca.pem client-key.pem ibdata1 ib_logfile1 mysql private_key.pem server-cert.pem sys

ca-key.pem client-cert.pem ib_buffer_pool ib_logfile0 ibtmp1 performance_schema public_key.pem server-key.pem test

具名和匿名挂载

#匿名挂载

-v 容器内路径

docker run -d -P --name=nginx01 -v /etc/nginx nginx

#查看所有volume的情况

[root@localhost home]# docker volume ls

DRIVER VOLUME NAME

local 93281dfc1e95e7175875d8282f14f26236ee8a5eadcc8a88ffaaa6fa30a4e66e

local 3275472bde7776fda7d76cebd4283b0a081241145a73cf5c7573a01e34f81290

#这里发现这就是匿名挂载,这-v只写了容器内的路径,没有写容器为的路径

#具名挂载

[root@localhost home]# docker run -d -P --name nginx02 -v juming-nginx:/etc/nginx nginx

6a01e4f83b6def5f07efb240ba0be7b1945d3a76b2bf4f6c60ccd44cd9e73323

[root@localhost home]# docker volume ls

DRIVER VOLUME NAME

local 93281dfc1e95e7175875d8282f14f26236ee8a5eadcc8a88ffaaa6fa30a4e66e

local 3275472bde7776fda7d76cebd4283b0a081241145a73cf5c7573a01e34f81290

local juming-nginx

[root@localhost home]#

#通过-v 卷名:容器内路径

#查看一下卷

[root@localhost home]# docker volume inspect juming-nginx

[

{

"CreatedAt": "2020-08-04T20:03:42+08:00",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/juming-nginx/_data",

"Name": "juming-nginx",

"Options": null,

"Scope": "local"

}

]

#所有docker容器内的卷,在没有指定目录情况下都是在 /var/lib/docker/volumes/xxxxx/_data

#我们通过具名挂载可以方便的找到一个卷,大多数情况下使用的是‘具名挂载’

#如何确实是匿名挂载还是具名挂载,还是指定路径挂载

-v 容器内路径 #匿名挂载

-v 卷名:容器内路径 #具名挂载

-v /宿主机路径:容器内路径 #指定路径挂载

拓展:

#-v 容器内路径:ro rw 改变读写权限

ro readonly #只读

rw readwrite #读写

#一旦这个设置了容器权限,容器对挂载出来的内容就有限定了

docker run -d -P --name nginx02 -v juming-nginx:/etc/nginx:ro nginx

docker run -d -P --name nginx02 -v juming-nginx:/etc/nginx:rw nginx

#ro 只要看到ro,只说明这个路径只能能通过宿主机操作

八、Docker网络

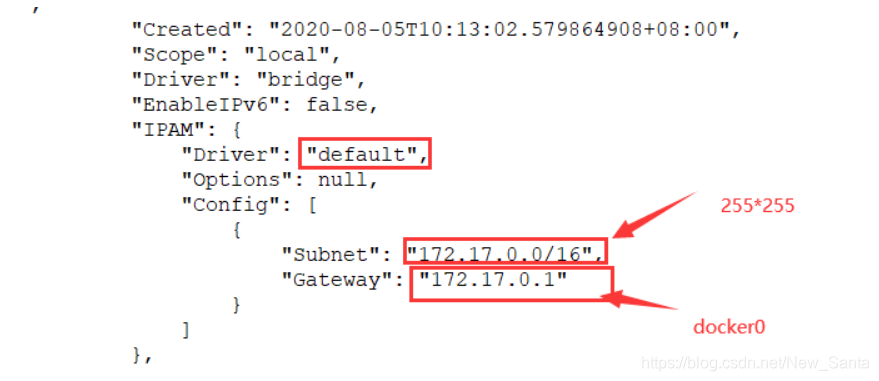

理解Docker0

清空所有环境

1、 测试

三个网络

#问题 docker是如何处理容器网络访问的

#[root@localhost ~]# docker run -d -P --name tomcat01 tomcat

#查看容器的内部网络地址 ip addr ,发现容器启动的时候会得到一个eth0@if5 ip地址,docker分配的

[root@localhost ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

#思考 linux能不能ping通容器内部

[root@localhost ~]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.155 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.063 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.065 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.064 ms

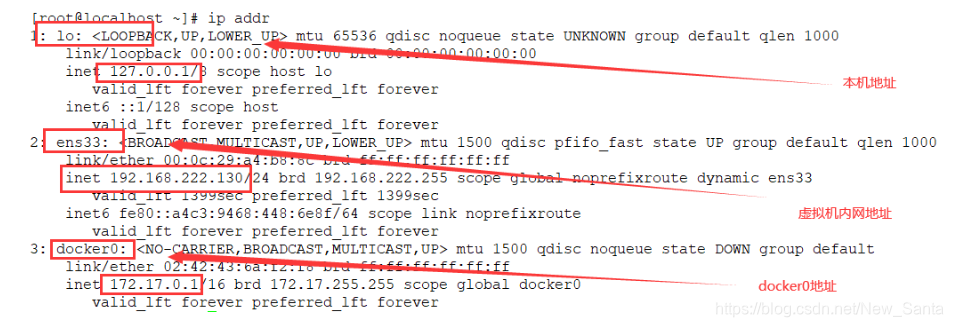

2、 原理

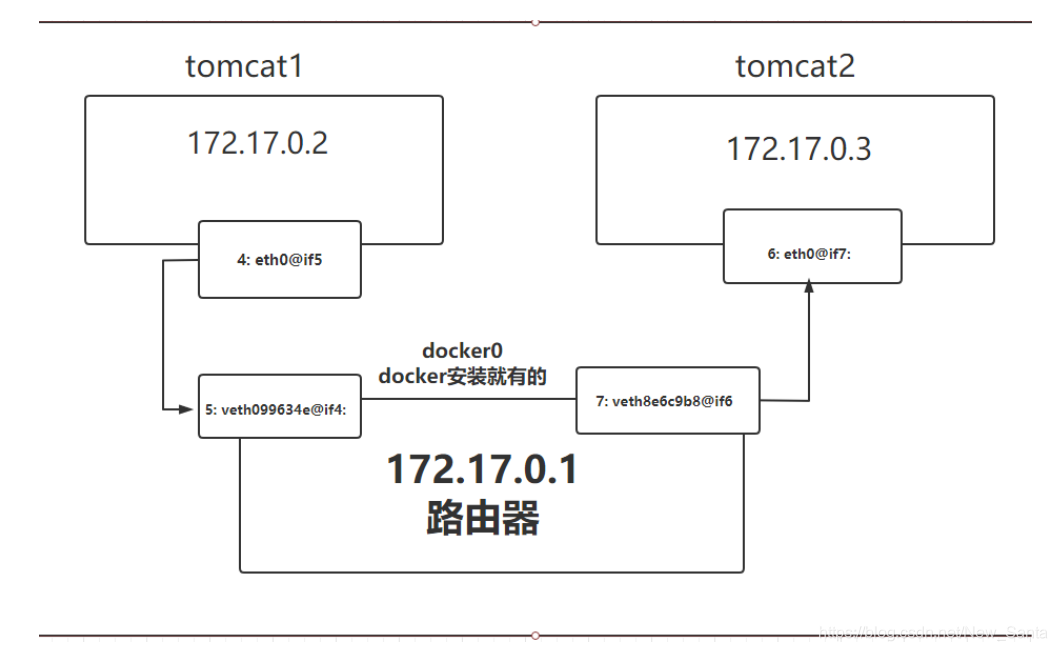

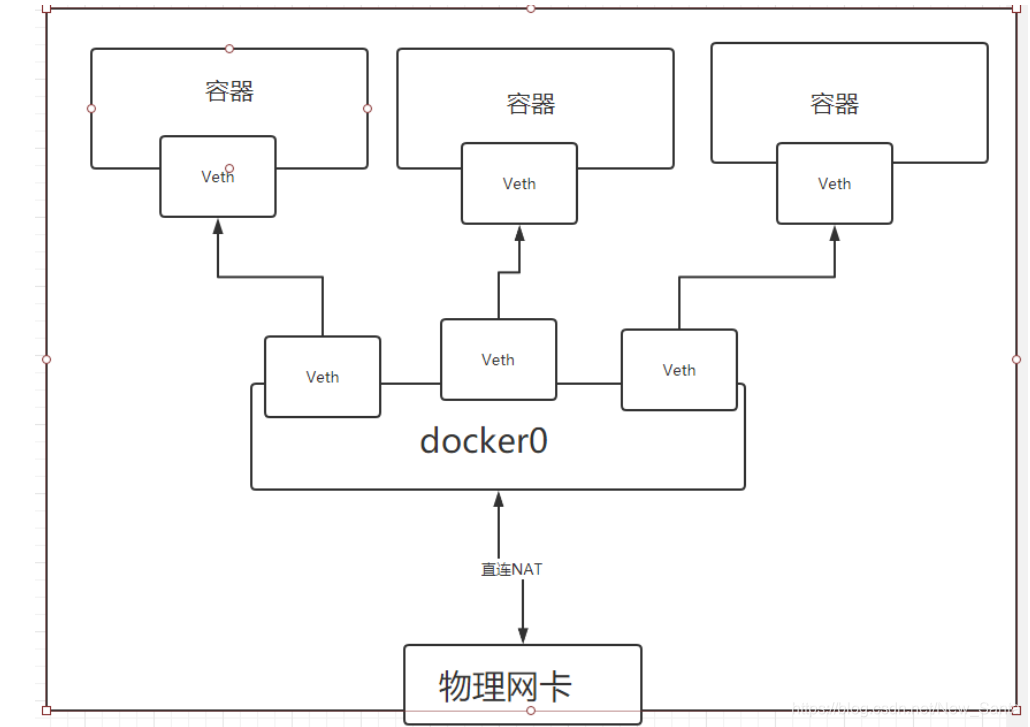

1、我们每启动一个docker容器,docker就会给docker容器分配一个ip,只要安装了docker,就会有一个网卡docker0。桥接模式,使用的技术是evth-pair技术!

再次测试ip addr

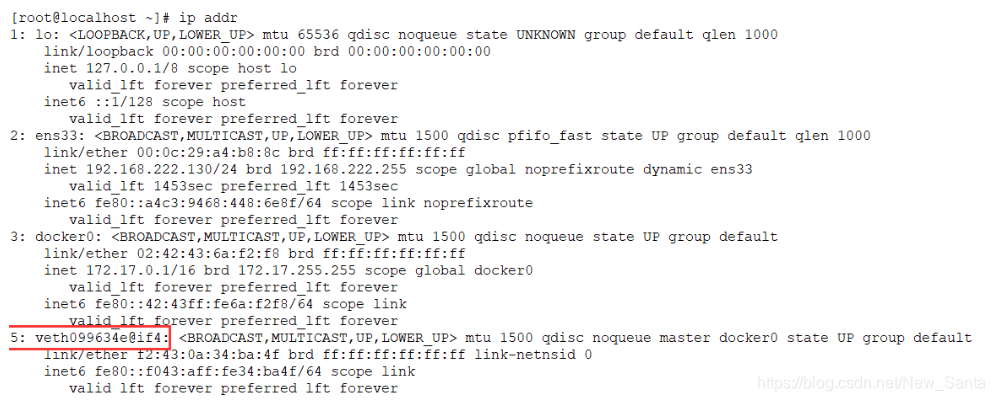

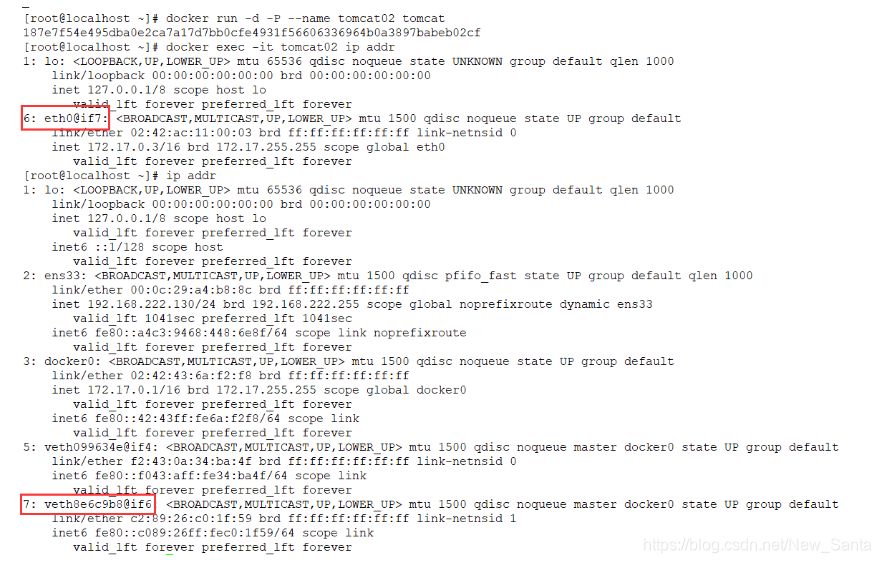

2、再启动一个容器,发现又多了一对网卡

#发现容器带来的网卡,都是一对对的

#evth-pair 就是一对的虚拟设备接口,它们都是成对出现的,一段连着协议,一段彼此相连

#正因为有这个特性,evth-pair 充当一个桥梁,连接各种虚拟网路设备的

#OpenStac, Docker容器之间的连接,OVS连接,都是使用evth-pair技术

3、测试一下tomcat01 和 tomcat02 是否能连通

[root@localhost ~]# docker exec -it tomcat01 ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.250 ms

64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.051 ms

64 bytes from 172.17.0.3: icmp_seq=3 ttl=64 time=0.043 ms

#结论:容器与容器是可以互相ping的

绘制一个网络模型图

结论:tomcat01 和 tomcat02是公用的一个路由器(docker0)

所有容器在不指定网络情况下都是docker0路由的,docker会给我们的容器分配一个默认的I可用IP

3、 小结

Docker使用的是Linuxd的桥接,宿主机中是一个Docker容器的网路(docker0)

Docker 中所有网络接口都是虚拟的,虚拟的转发效率高(eg:内网传文件)

只要容器删除,对应的一对网桥就是删除

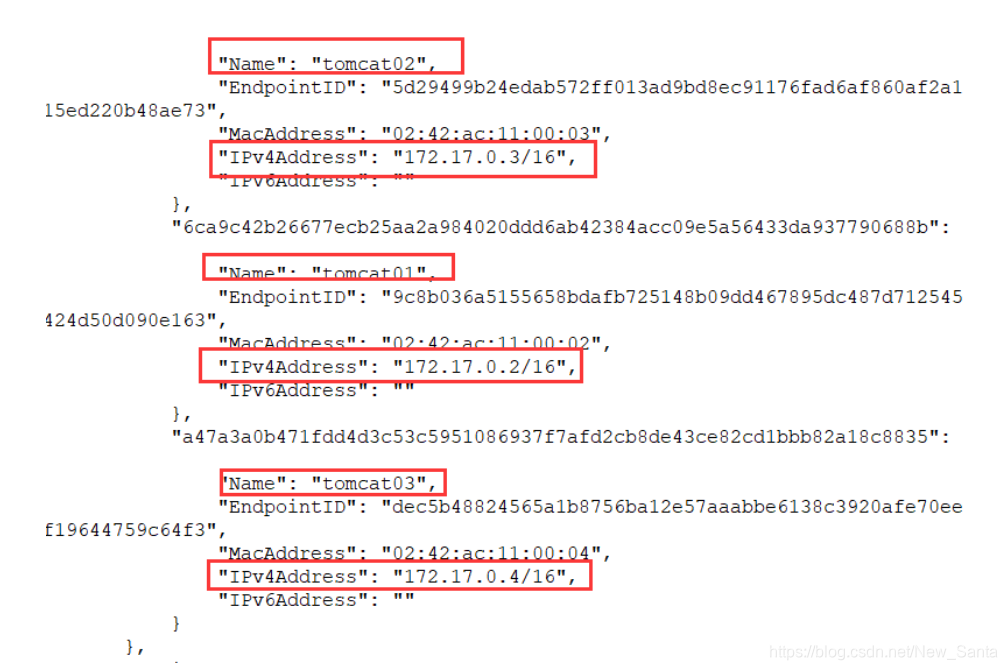

–link

思考一个场景,我们编写一个微服务,database url=ip;项目不重启,数据库ip换掉,我们希望可以处理这个问题,可以用名字来进行访问容器

[root@localhost ~]# docker exec -it tomcat01 ping tomcat02

ping: tomcat02: Name or service not known

#如何可以解决呢?

#通过--link 即可解决网络连通问题

[root@localhost ~]# docker run -d -P --name tomcat03 --link tomcat02 tomcat

a47a3a0b471fdd4d3c53c5951086937f7afd2cb8de43ce82cd1bbb82a18c8835

[root@localhost ~]# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.289 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.047 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.101 ms

#反向可以ping吗?

[root@localhost ~]# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Name or service not known

1、探究: inspect !

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

dd239eba8b50 bridge bridge local

d8fc167e18d7 host host local

4c3b8a98dd1a none null local

[root@localhost ~]# docker network inspect dd239eba8b50

其实是tomcat03在本地配置了tomcat02的配置

#查看host配置,在这里发现原理

[root@localhost ~]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 187e7f54e495

172.17.0.4 a47a3a0b471f

2、本质探究

本质探究:–link代表了就是在hosts配置中添加了一个172.17.0.3 tomcat02 187e7f54e495

我们现在玩docker已经不推荐使用–link

自定义网络不适用于docker0

docker0问题:它不支持容器名连接访问!

自定义网络

1、查看网络

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

dd239eba8b50 bridge bridge local

d8fc167e18d7 host host local

4c3b8a98dd1a none null local

网络模式

bridge : 桥接 docker(默认,自己创建也是用桥接模式)

none : 不配置网络

host :和宿主机共享网络

containter :容器网络连通!(用的少!局限性大)

测试

#我们直接启动的命令 --net bridge ,而这个就是我们的docker0

[root@localhost ~]# docker run -d -P --name tomcat01 tomcat

[root@localhost ~]# docker run -d -P --name tomcat01 --net bridge tomcat

#docker0的特点:默认,域名不可以访问,--link可以打通连接

2、自定义网络

docker network create --driver 网络模式 --subnet 子网端口 --gateway 起始端口 端口名

#我们可以自定义网络

#--driver bridge

#--subnet 192.168.0.0/16

#--gateway 192.168.0.1

[root@localhost ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

0cbed26d0e489dde6a1a863995a7b6def10360620a5841c4289de9abee2543ff

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

dd239eba8b50 bridge bridge local

d8fc167e18d7 host host local

0cbed26d0e48 mynet bridge local

4c3b8a98dd1a none null local

[root@localhost ~]#

#自定义网络就建好了

[root@localhost ~]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "0cbed26d0e489dde6a1a863995a7b6def10360620a5841c4289de9abee2543ff",

"Created": "2020-08-05T17:04:08.391944894+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

#将tomcat发布到自定义网络

[root@localhost ~]# docker run -d -P --name tomcat-net-01 --net mynet tomcat

28288c52482ef1f02de66075c2441c85e19e82dbbb9018db930127aca8d4928d

[root@localhost ~]# docker run -d -P --name tomcat-net-02 --net mynet tomcat

189bee452f63f13a60dce43efab964b2cd2849461ac3d6039bd56c978a736d32

[root@localhost ~]# docker network inspect mynet

[

"ConfigOnly": false,

"Containers": {

"189bee452f63f13a60dce43efab964b2cd2849461ac3d6039bd56c978a736d32": {

"Name": "tomcat-net-02",

"EndpointID": "03e91dbfebddb04dd55c9e0923038f8cd78bdf845f9fe66f7dea169faf58dfa5",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"28288c52482ef1f02de66075c2441c85e19e82dbbb9018db930127aca8d4928d": {

"Name": "tomcat-net-01",

"EndpointID": "b8b0025806ea9f023830bdbeafcaaf49eaca9c96559e89b0cfab4160cc00faad",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

#再次测试ping连接

[root@localhost ~]# docker exec -it tomcat-net-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=5.23 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.082 ms

64 bytes from 192.168.0.3: icmp_seq=3 ttl=64 time=0.053 ms

^C

--- 192.168.0.3 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 4ms

rtt min/avg/max/mdev = 0.053/1.788/5.230/2.433 ms

#不使用--link也可以ping名字了

[root@localhost ~]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.055 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.092 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.060 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=4 ttl=64 time=0.066 ms

^C

--- tomcat-net-02 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 4ms

rtt min/avg/max/mdev = 0.055/0.068/0.092/0.015 ms

我们自定义的docker网络都已经维护好了对应的关系,推荐我们平时这样使用网络

好处:

redis集群:不同集群使用不同的网络,保证集群是安全健康的

mysql集群:不同集群使用不同的网络,保证集群是安全健康的

网络连通

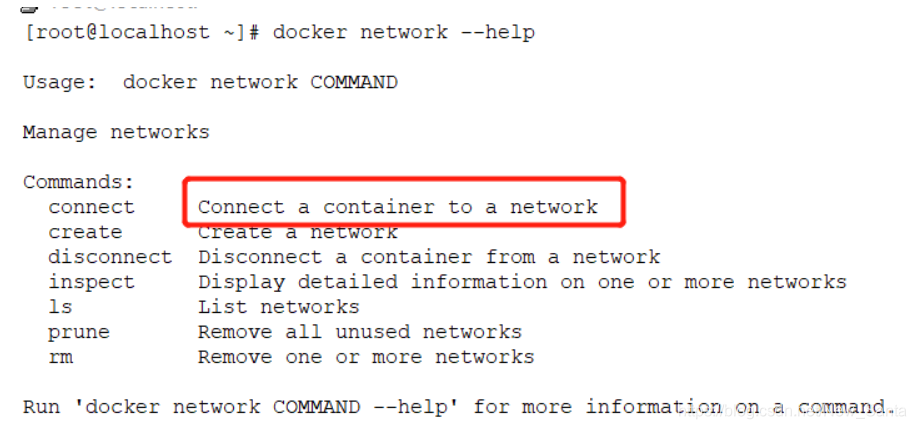

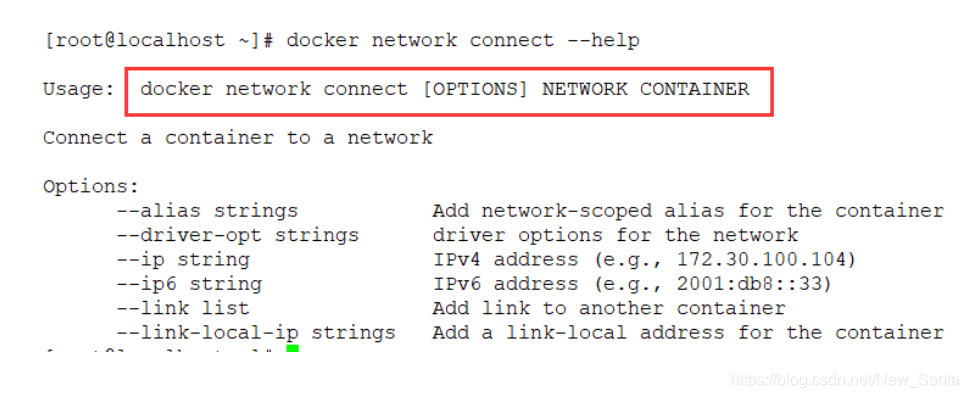

1、查看网络连通方法定义

2、打通网络

#测试打通 -mynet tomcat01

[root@localhost ~]# docker network connect mynet tomcat01

[root@localhost ~]# docker network inspect mynet

"Containers": {

"189bee452f63f13a60dce43efab964b2cd2849461ac3d6039bd56c978a736d32": {

"Name": "tomcat-net-02",

"EndpointID": "03e91dbfebddb04dd55c9e0923038f8cd78bdf845f9fe66f7dea169faf58dfa5",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"28288c52482ef1f02de66075c2441c85e19e82dbbb9018db930127aca8d4928d": {

"Name": "tomcat-net-01",

"EndpointID": "b8b0025806ea9f023830bdbeafcaaf49eaca9c96559e89b0cfab4160cc00faad",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"d6ac64aa21d1db40bd1a89930c641339e9246fea71825494580a9a73dffa604e": {

"Name": "tomcat01",

"EndpointID": "ecbd521c8f0b7b4747729673ab1c56e375944388f28f289dd1340dbcd3d5a1a7",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

}

#连通之后就是将tomcat01 放到了 mynet网络下

#一个容器两个id

#测试ping

[root@localhost ~]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.209 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.075 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.061 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=4 ttl=64 time=0.071 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=5 ttl=64 time=0.070 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=6 ttl=64 time=0.063 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=7 ttl=64 time=0.083 ms

3、结论

结论;假设要跨网络操作别人,就需要使用docker network connect 连通**

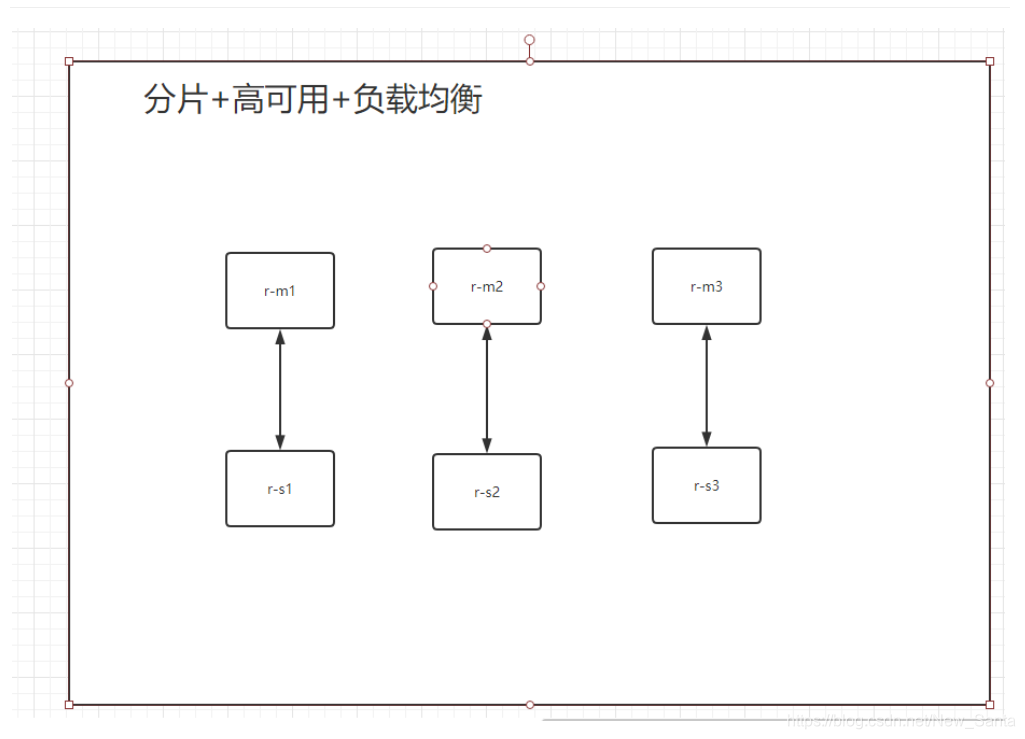

九、 实战:部署redis集群

操作

#创建用于redis集群的网络

[root@localhost ~]# docker network create redis --subnet 172.38.0.0/16

1c90b823cc2fdd07ef05b5bfd0626376cf8d033df71866081fe5099fc58f5045

#查看网络

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

dd239eba8b50 bridge bridge local

d8fc167e18d7 host host local

0cbed26d0e48 mynet bridge local

4c3b8a98dd1a none null local

1c90b823cc2f redis bridge local

#进redis网络查看

[root@localhost ~]# docker network inspect redis

[

{

"Name": "redis",

"Id": "1c90b823cc2fdd07ef05b5bfd0626376cf8d033df71866081fe5099fc58f5045",

"Created": "2020-08-05T18:20:22.384547748+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.38.0.0/16"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

#通过脚本创建六个redis配置

for port in $(seq 1 6);\

do \

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat <<EOF >/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

#查看六个脚本是否创建成功

[root@localhost ~]# cd /mydata

[root@localhost mydata]# ls

redis

[root@localhost mydata]# cd redis

[root@localhost redis]# ls

node-1 node-2 node-3 node-4 node-5 node-6

[root@localhost redis]# cd node-1

[root@localhost node-1]# ls

conf

[root@localhost node-1]# cd conf

[root@localhost conf]# ls

redis.conf

[root@localhost conf]# cat redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.11

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

#启动一个redis容器

docker run -p 6371:6379 -p 16371:16379 --name redis-1 \

-v /mydata/redis/node-1/data:/data \

-v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

#开启成功

[root@localhost conf]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e9cbeb4a896c redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 20 seconds ago Up 17 seconds 0.0.0.0:6371->6379/tcp, 0.0.0.0:16371->16379/tcp redis-1

#再开启多个redis容器(如此类推启动)

docker run -p 6372:6379 -p 16372:16379 --name redis-2 \

-v /mydata/redis/node-2/data:/data \

-v /mydata/redis/node-2/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.12 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6373:6379 -p 16373:16379 --name redis-3 \

-v /mydata/redis/node-3/data:/data \

-v /mydata/redis/node-3/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.13 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6374:6379 -p 16374:16379 --name redis-4 \

-v /mydata/redis/node-4/data:/data \

-v /mydata/redis/node-4/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.14 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6375:6379 -p 16375:16379 --name redis-5 \

-v /mydata/redis/node-5/data:/data \

-v /mydata/redis/node-5/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.15 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6376:6379 -p 16376:16379 --name redis-6 \

-v /mydata/redis/node-6/data:/data \

-v /mydata/redis/node-6/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.16 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

#查看是否全部开成功

[root@localhost conf]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0bc8cad03885 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 5 seconds ago Up 3 seconds 0.0.0.0:6376->6379/tcp, 0.0.0.0:16376->16379/tcp redis-6

f8a4ad0617f8 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 39 seconds ago Up 38 seconds 0.0.0.0:6375->6379/tcp, 0.0.0.0:16375->16379/tcp redis-5

8cbc974c2434 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" About a minute ago Up About a minute 0.0.0.0:6374->6379/tcp, 0.0.0.0:16374->16379/tcp redis-4

6e3fb80cdf32 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes 0.0.0.0:6373->6379/tcp, 0.0.0.0:16373->16379/tcp redis-3

25dd4eef0497 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 4 minutes ago Up 4 minutes 0.0.0.0:6372->6379/tcp, 0.0.0.0:16372->16379/tcp redis-2

e9cbeb4a896c redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 6 minutes ago Up 6 minutes 0.0.0.0:6371->6379/tcp, 0.0.0.0:16371->16379/tcp redis-1

#创建redis集群

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: 280bda613bd48f17db047979982244a5b4a41d11 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: e30ac384e7fc069e49d7e8688ab137d332103ebb 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: 4a11729acb351604f0f2d595dfbaa73e2b71b159 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: d3fb3593655c70206f7903731099fb8e3594116e 172.38.0.14:6379

replicates 4a11729acb351604f0f2d595dfbaa73e2b71b159

S: a4fe50c48bf4f5ef086872680605a16a8f0c3c34 172.38.0.15:6379

replicates 280bda613bd48f17db047979982244a5b4a41d11

S: 1cf7386a961028ff4f2d3df310474387329b2a2a 172.38.0.16:6379

replicates e30ac384e7fc069e49d7e8688ab137d332103ebb

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

..

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: 280bda613bd48f17db047979982244a5b4a41d11 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 4a11729acb351604f0f2d595dfbaa73e2b71b159 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 1cf7386a961028ff4f2d3df310474387329b2a2a 172.38.0.16:6379

slots: (0 slots) slave

replicates e30ac384e7fc069e49d7e8688ab137d332103ebb

S: a4fe50c48bf4f5ef086872680605a16a8f0c3c34 172.38.0.15:6379

slots: (0 slots) slave

replicates 280bda613bd48f17db047979982244a5b4a41d11

M: e30ac384e7fc069e49d7e8688ab137d332103ebb 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: d3fb3593655c70206f7903731099fb8e3594116e 172.38.0.14:6379

slots: (0 slots) slave

replicates 4a11729acb351604f0f2d595dfbaa73e2b71b159

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

#查看redis集群信息

/data # redis-cli -c

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:344

cluster_stats_messages_pong_sent:329

cluster_stats_messages_sent:673

cluster_stats_messages_ping_received:324

cluster_stats_messages_pong_received:344

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:673

#查看redis集群节点

127.0.0.1:6379> cluster nodes

4a11729acb351604f0f2d595dfbaa73e2b71b159 172.38.0.13:6379@16379 master - 0 1596626009675 3 connected 10923-16383

1cf7386a961028ff4f2d3df310474387329b2a2a 172.38.0.16:6379@16379 slave e30ac384e7fc069e49d7e8688ab137d332103ebb 0 1596626009000 6 connected

a4fe50c48bf4f5ef086872680605a16a8f0c3c34 172.38.0.15:6379@16379 slave 280bda613bd48f17db047979982244a5b4a41d11 0 1596626009000 5 connected

e30ac384e7fc069e49d7e8688ab137d332103ebb 172.38.0.12:6379@16379 master - 0 1596626010000 2 connected 5461-10922

d3fb3593655c70206f7903731099fb8e3594116e 172.38.0.14:6379@16379 slave 4a11729acb351604f0f2d595dfbaa73e2b71b159 0 1596626010681 4 connected

280bda613bd48f17db047979982244a5b4a41d11 172.38.0.11:6379@16379 myself,master - 0 1596626009000 1 connected 0-5460

#测试redis集群

127.0.0.1:6379> set a b

-> Redirected to slot [15495] located at 172.38.0.13:6379

OK

#停止172.38.0.13:6379的redis-3容器,检查是否可以高可用

[root@localhost ~]# docker stop redis-3

redis-3

#高可用成功

127.0.0.1:6379> get a

-> Redirected to slot [15495] located at 172.38.0.14:6379

"b"

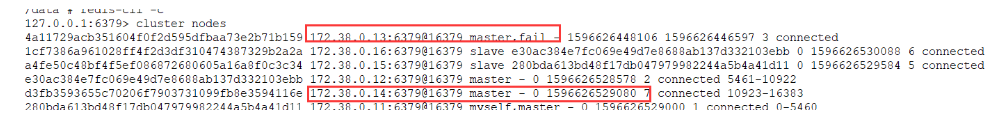

#查看关闭redis-3后节点情况

127.0.0.1:6379> cluster nodes

4a11729acb351604f0f2d595dfbaa73e2b71b159 172.38.0.13:6379@16379 master,fail - 1596626448106 1596626446597 3 connected

1cf7386a961028ff4f2d3df310474387329b2a2a 172.38.0.16:6379@16379 slave e30ac384e7fc069e49d7e8688ab137d332103ebb 0 1596626530088 6 connected

a4fe50c48bf4f5ef086872680605a16a8f0c3c34 172.38.0.15:6379@16379 slave 280bda613bd48f17db047979982244a5b4a41d11 0 1596626529584 5 connected

e30ac384e7fc069e49d7e8688ab137d332103ebb 172.38.0.12:6379@16379 master - 0 1596626528578 2 connected 5461-10922

d3fb3593655c70206f7903731099fb8e3594116e 172.38.0.14:6379@16379 master - 0 1596626529080 7 connected 10923-16383

280bda613bd48f17db047979982244a5b4a41d11 172.38.0.11:6379@16379 myself,master - 0 1596626529000 1 connected 0-5460

redis-4变成了master了

docker搭建redis集群完成