对抗性攻击

As artificial intelligence(AI) and deep learning evolves to become more mainstream in software solutions, they are going to carry with them other disciplines in the technology space. Security is one of those areas that needs to quickly evolve to keep up with the advancements in deep learning technology. While we typically think about deep learning in a positive context with algorithms trying to improve the intelligence of the solution, deep learning models can also be used to orchestrates security sophisticated attacks. Even more interesting is the fact that deep learning models can be used to compromise the safety of other intelligent model.

随着人工智能(AI)和深度学习在软件解决方案中变得越来越主流,它们将与技术领域的其他学科一起发展。 安全是需要快速发展以跟上深度学习技术进步的领域之一。 尽管我们通常会在积极的环境中考虑深度学习,而算法会尝试提高解决方案的智能性,但深度学习模型也可用于组织安全性复杂的攻击。 更有趣的是,可以使用深度学习模型来损害其他智能模型的安全性。

The idea of deep neural networks attacking other neural networks seems like an inevitable fact in the evolution of the space. As software becomes more intelligent, the security techniques used to attack and defend that software are likely to natively leverage a similar level of intelligence. Deep learning posses challenges for the security space that we haven’t seen before, as we can have software that is able to rapidly adapt and generate new forms of attacks. The deep learning space includes a subdiscipline known as adversarial networks that focuses on creating neural networks that can disrupt the functionality of other models. While adversarial networks are often seen as a game theory artifact to improve the robustness of a deep learning model, they can also be used to create security attacks.

深度神经网络攻击其他神经网络的想法似乎是空间演化中不可避免的事实。 随着软件变得更加智能,用于攻击和防御该软件的安全技术很可能会自然地利用相似的智能水平。 深度学习给安全领域带来了前所未有的挑战,因为我们可以拥有能够快速适应并产生新形式攻击的软件。 深度学习空间包括一个称为对抗网络的子学科,该子学科专注于创建可以破坏其他模型功能的神经网络。 尽管对抗网络通常被视为提高深度学习模型的鲁棒性的博弈论产物,但它们也可用于制造安全攻击。

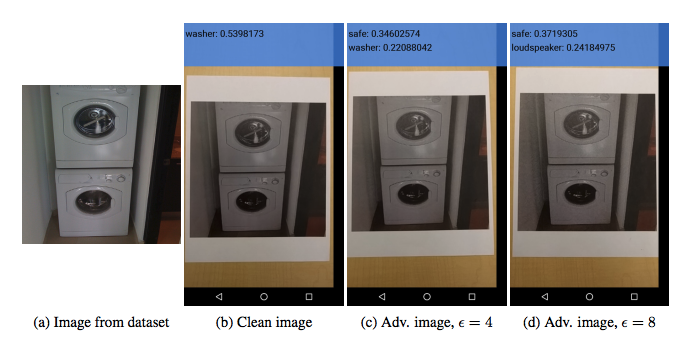

One of the most common scenarios of using adversarial examples to disrupt deep learning classifiers. Adversarial examples are inputs to deep learning models that another network has designed to induce a mistake. In the context of classification models, you can think of adversarial attacks as optical illusions for deep learning agents ? The following image shows you how a small change in the input dataset causes a model to misclassify a washing machine for a speaker.

使用对抗性示例破坏深度学习分类器的最常见场景之一。 对抗性示例是另一个网络旨在引起错误的深度学习模型的输入。 在分类模型的上下文中,您可以将对抗性攻击视为深度学习代理的错觉?下图显示了输入数据集中的微小变化如何导致模型将扬声器的洗衣机错误分类。

If all adversarial attacks were like the example above they wouldn’t be a big deal, However, imagine the same technique used to disrupt an autonomous vehicle by using stickers or paint that project the image of a stop sign. Deep learning luminary Ina Goodfellow describes that approach in a research paper titled Practical Black-Box Attacks Against Machine Learning published a few years ago.

如果所有对抗性攻击都像上面的示例那样,那么没什么大不了的。但是,请想象一下,使用粘贴停车牌图像的贴纸或油漆破坏自动驾驶汽车的相同技术。 深度学习专家Ina Goodfellow在几年前发表的名为“ 针对机器学习的实用黑匣子攻击”的研究论文中描述了这种方法。

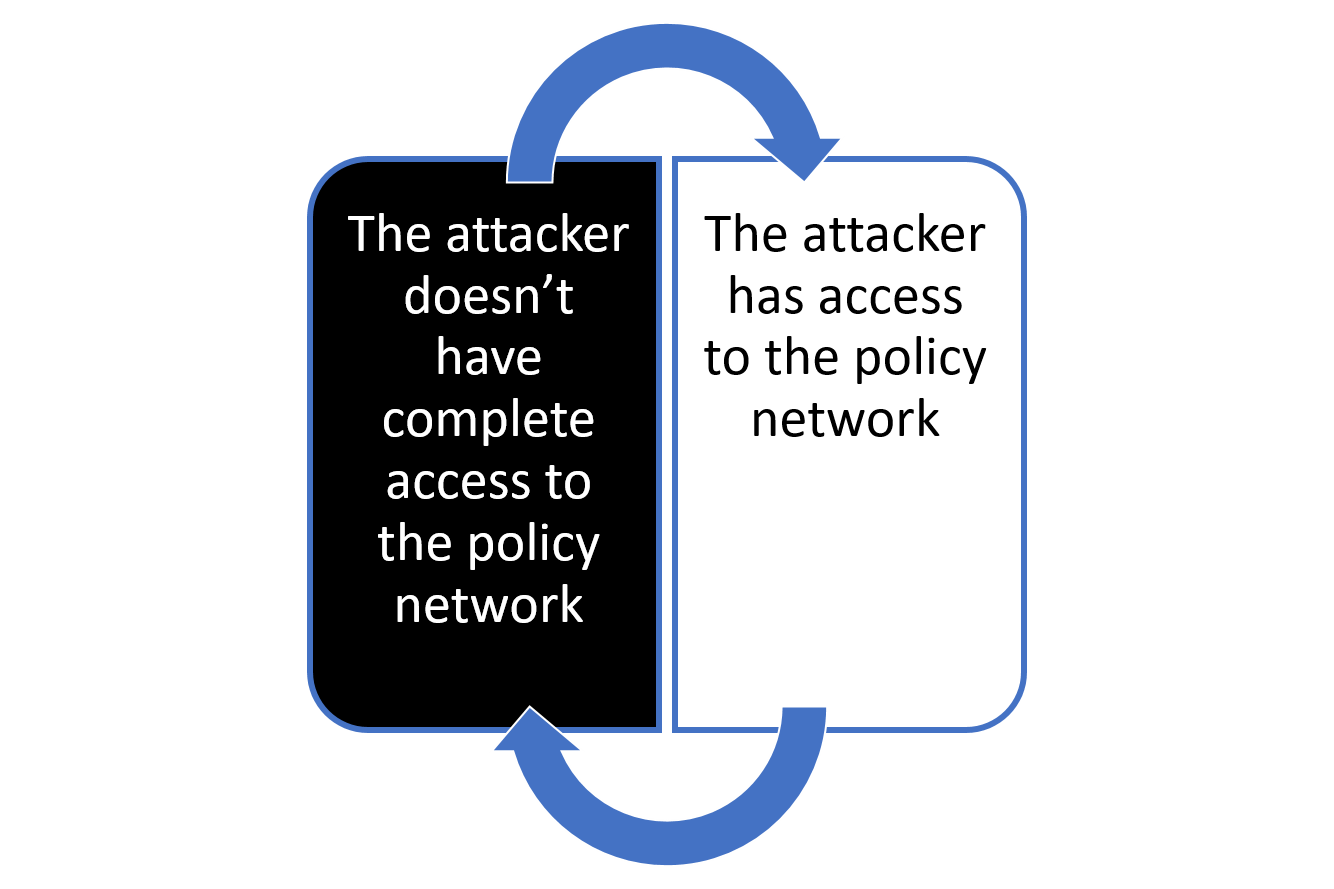

Adversarial attacks are more effective in unsupervised architectures such as reinforcement learning. Unlike supervised learning applications, where a fixed dataset of training examples is processed during learning, in reinforcement learning(RL) these examples are gathered throughout the training process. In simpler terms, an RL model trains a policy and, despite the model objectives being the same, training policies can be significantly different. From the adversarial examples perspective, we can imagine the attack techniques are very different whether it has access to the policy network than when it doesn’t. Using that criterial, deep learning researchers typically classify adversarial attacks in two main groups: black-box vs. white-box.

在无人监督的架构(例如强化学习)中,对抗性攻击更为有效。 与监督学习应用程序不同,在学习过程中会处理固定的训练示例数据集,而在强化学习(RL)中,这些示例会在整个训练过程中收集。 简而言之,RL模型训练策略,并且尽管模型目标相同,但训练策略却可能大不相同。 从对抗性示例的角度来看,我们可以想象到攻击技术是否可以访问策略网络与何时没有访问策略网络都大不相同。 使用该标准,深度学习研究人员通常将对抗性攻击分为两大类:黑盒与白盒。

In another recent research paper, Ian Goodfellow and colleagues highlight a series of white-box and black-box attacks against RL models. The researchers used adversarial attacks against a group of well-known RL models such as A3C, TRPO, and DQN which learned how to play different games such as Atari 2600, Chopper Command, Pong, Seaquest, or Space Invaders.

在最近的另一篇研究论文中 ,Ian Goodfellow及其同事强调了针对RL模型的一系列白盒和黑盒攻击。 研究人员对一组著名的RL模型(例如A3C,TRPO和DQN)进行了对抗攻击,从而学习了如何玩Atari 2600,Chopper Command,Pong,Seaquest或Space Invaders等不同的游戏。

白盒对抗攻击 (White-Box Adversarial Attacks)

The white-box adversarial attacks describe scenarios in which the attacker has access to the underlying training policy network of the target model. The research found that even introducing small pertubations in the training policy can drastically affect the performance of the model. The following video illustrates those results.

白盒对抗攻击描述了攻击者可以访问目标模型的基础训练策略网络的情况。 研究发现,即使在训练策略中引入较小的插管也会极大地影响模型的性能。 以下视频说明了这些结果。

黑匣子对抗攻击 (Black-Box Adversarial Attacks)

Black-box adversarial attacks describe scenarios in which the attacker does not have complete access to the policy network. The research referenced above classifies black-box attacks into two main groups:

黑盒对抗攻击描述了攻击者无法完全访问策略网络的情况。 上面引用的研究将黑盒攻击分为两大类:

1) The adversary has access to the training environment and knowledge of the training algorithm and hyperparameters. It knows the neural network architecture of the target policy network, but not its random initialization. They refer to this model as transferability across policies.

1)对手可以访问培训环境,并了解培训算法和超参数。 它知道目标策略网络的神经网络架构,但不知道其随机初始化。 他们将此模型称为跨策略的可转移性。

2) The adversary additionally has no knowledge of the training algorithm or hyperparameters. They refer to this model as transferability across algorithms.

2)对手还不知道训练算法或超参数。 他们将此模型称为跨算法的可传递性。

Not surprisingly, the experiments showed that we find that the less the adversary knows about the target policy, the less effective the adversarial examples are. Transferability across algorithms is less effective at decreasing agent performance than transferability across policies, which is less effective than white-box attacks.

毫不奇怪,实验表明,我们发现对手对目标政策的了解越少,对手的例子就越不有效。 跨算法的可传递性在降低代理性能方面的效率不如跨策略的可传递性,后者的效率低于白盒攻击。

对抗性攻击