作业文档 提取码 crqa

一、实验目的

- 理解HDFS在Hadoop体系结构中的角色;

- 熟练使用HDFS操作常用的Shell命令;

- 熟悉HDFS操作常用的Java API。

二、实验平台

- 操作系统:Linux(建议CentOS);

- Hadoop版本:2.6.1;

- JDK版本:1.7或以上版本;

- Java IDE:Eclipse。

三、实验步骤

(一)编程实现以下功能,并利用Hadoop提供的Shell命令完成相同任务:

将HDFS中指定文件的内容输出到终端中;

shell实现

#!/bin/bash

hadoop fs -ls /

read -p "please select file you want to output: " filename

if hadoop fs -test -e /$filename

then

hadoop fs -cat /$filename

else

echo "the file not exist, output failed"

fi

java api实现

import org.apache.hadoop.conf.Configuration;

import java.io.BufferedReader;

import java.io.InputStreamReader;

import org.apache.hadoop.fs.*;

import java.util.Scanner;

public class hsfs2 {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

System.out.println("please select file you want to output: ");

Scanner sc = new Scanner(System.in);

String filename = sc.nextLine();

Path filepath = new Path(filename);

if (fs.exists(filepath)) {

FSDataInputStream inputStream= fs.open(filepath);

BufferedReader bf = new BufferedReader(new InputStreamReader(inputStream));

String line;

while ((line = bf.readLine()) != null){

System.out.println(line);

}

sc.close();

fs.close();

} else {

System.out.println("文件不存在");

}

} catch (Exception e) {

e.printStackTrace();

}

}

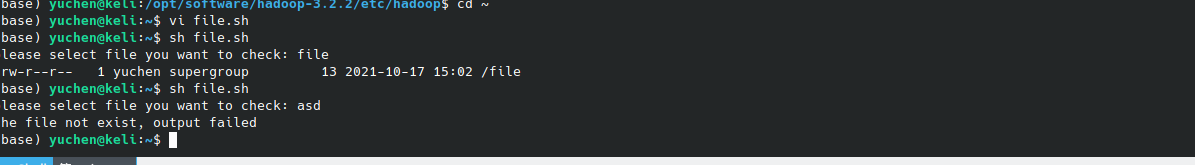

}显示HDFS中指定的文件的读写权限、大小、创建时间、路径等信息;

read -p "please select file you want to check: " filename

if hadoop fs -test -e /$filename

then

hadoop fs -ls -h /$filename

else

echo "the file not exist, output failed"

fi

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.util.Scanner;

public class hsfs2 {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

System.out.println("please select file you want to check: ");

Scanner sc = new Scanner(System.in);

String filename = sc.nextLine();

Path filepath = new Path(filename);

if (fs.exists(filepath)) {

System.out.println(fs.getFileStatus(filepath).getPermission());

System.out.println(fs.getFileStatus(filepath).getBlockSize());

System.out.println(fs.getFileStatus(filepath).getAccessTime());

System.out.println(fs.getFileStatus(filepath).getPath());

} else {

System.out.println("文件不存在");

}

sc.close();

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

给定HDFS中某一个目录,输出该目录下的所有文件的读写权限、大小、创建时间、路径等信息,如果该文件是目录,则递归输出该目录下所有文件相关信息;

read -p "enter a path: " path

hadoop fs -ls -R $path

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.util.Scanner;

public class hsfs2 {

public static void ls(FileSystem fs, Path filepath) throws IOException{

FileStatus[] filestatuse = fs.listStatus(filepath);

System.out.println(i.toString());

for (FileStatus i : filestatuse){

if (i.isDirectory())

ls(fs, i.getPath());

}

}

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

System.out.println("please select file you want to check: ");

Scanner sc = new Scanner(System.in);

String filename = sc.nextLine();

Path filepath = new Path(filename);

if (fs.exists(filepath)) {

ls(fs, filepath);

} else {

System.out.println("文件不存在");

}

sc.close();

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

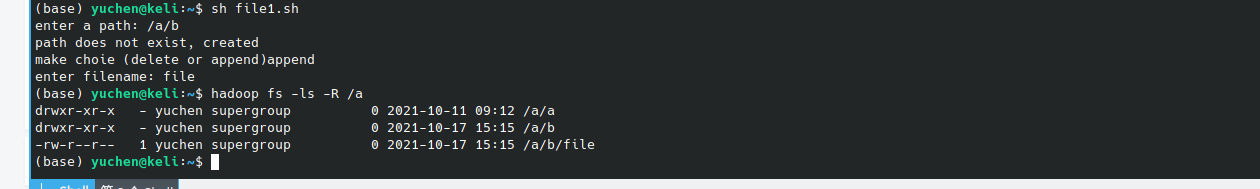

}提供一个HDFS内的文件的路径,对该文件进行创建和删除操作。如果文件所在目录不存在,则自动创建目录;

#!/bin/bash

read -p "enter a path: " path

if $(hadoop fs -test -d $path);

then

read -p "make choie (delete or append)" choice

if [ $choice == "delete" ]

then

read -p "enter filename: " filename

hadoop fs -rm -r {$path}/{$filename}

else

read -p "enter filename: " filename

hadoop fs -touchz $path/$filename

fi

else

echo "path does not exist, created"

hadoop fs -mkdir -p $path

read -p "make choie (delete or append)" choice

if [ $choice == "delete" ]

then

read -p "enter filename: " filename

hadoop fs -rm -r {$path}/{$filename}

else

read -p "enter filename: " filename

hadoop fs -touchz $path/$filename

fi

fi

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.util.Scanner;

public class hsfs2 {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

System.out.println("enter a path ");

Scanner sc = new Scanner(System.in);

String filename = sc.nextLine();

Path filepath = new Path(filename);

if (fs.exists(filepath)) {

System.out.println("文件已存在");

} else {

System.out.println("文件不存在,已创建");

fs.create(filepath);

}

String choice = "0";

System.out.println("please choose your option: delete ,other");

choice = sc.next();

if((choice.equals("delete")))

{

fs.delete(filepath, true);

System.out.println("文件已删除");

}

sc.close();

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

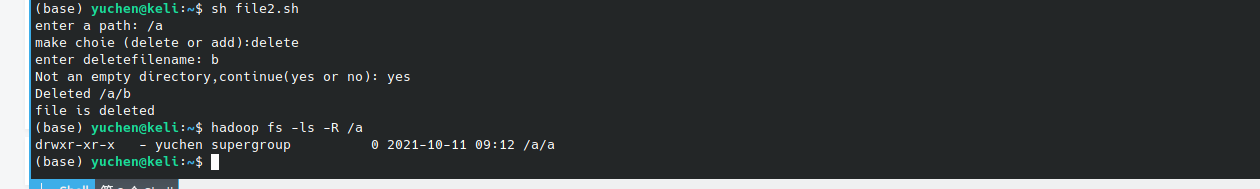

提供一个HDFS的目录的路径,对该目录进行创建和删除操作。创建目录时,如果目录文件所在目录不存在,则自动创建相应目录;删除目录时,由用户指定当该目录不为空时是否还删除该目录;

#!/bin/bash

read -p "enter a path: " path

if $(hadoop fs -test -d $path);

then

read -p "make choie (delete or add):" choice

if [ $choice == "delete" ]

then

read -p "enter deletefilename: " filename

isEmpty=$(hadoop fs -count $path/$filename | awk '{print $2}')

if [[ $isEmpty -eq 0 ]]

then

hadoop fs -rm -r $path/$filename

else

read -p "Not an empty directory,continue(yes or no): " choice2

if [ choice2 == "yes" ]

then

hadoop fs -rm -r $path/$filename

fi

fi

else

read -p "enter creatfilename: " filename

hadoop fs -touchz $path/$filename

fi

else

echo "path does not exist, created"

hadoop fs -mkdir -p $path

read -p "make choie (delete or append)" choice

if [ $choice == "delete" ]

then

read -p "enter filename: " filename

hadoop fs -rm -r {$path}/{$filename}

else

read -p "enter filename: " filename

hadoop fs -touchz $path/$filename

fi

fi

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.util.Scanner;

public class hsfs2 {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

System.out.println("enter a path ");

Scanner sc = new Scanner(System.in);

String filename = sc.nextLine();

Path filepath = new Path(filename);

if (fs.exists(filepath)) {

System.out.println("文件已存在");

} else {

System.out.println("文件不存在,已创建");

fs.create(filepath);

}

FileStatus[] status = fs.listStatus(filepath);

String choice = "0";

if(status.length!=0) {

System.out.println("Not an empty directory,please choose your option: delete ,other");

choice = sc.next();

}

if((choice.equals("delete")))

{

fs.delete(filepath, true);

System.out.println("文件已删除");

}

sc.close();

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

向HDFS中指定的文件追加内容,由用户指定内容追加到原有文件的开头或结尾;

#!/bin/bash

read -p "Enter the file path where you want to add content: " path

if hadoop fs -test -f $path

then

read -p "make choie (head or end)" choice

if [ $choice == "head" ]

then

read -p "enter content: " filename

hadoop fs -copyFromLocal -f $filename $path

else

read -p "enter content: " filename

hadoop fs -appendToFile $filename $path

fi

else

echo "file does not exist, created"

hadoop fs -mkdir -p $path/../

hadoop fs -touchz $path

read -p "make choie (head or end)" choice

if [ $choice == "head" ]

then

read -p "enter content: " filename

hadoop fs -copyFromLocal -f $filename $path

else

read -p "enter content: " filename

hadoop fs -appendToFile $filename $path

fi

fi

import org.apache.commons.lang3.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.util.Scanner;

public class hsfs2 {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

System.out.println("Enter the file path where you want to add content: ");

Scanner sc = new Scanner(System.in);

String path = sc.nextLine();

Path filepath = new Path(path);

if (!fs.exists(filepath)) {

fs.create(filepath);

System.out.println("文件不存在,已创建");

}

System.out.println("make choice (head or end)");

String choice = sc.nextLine();

System.out.println("enter content(end with ctrl + d):");

if(choice.equals("head"))

{

FSDataOutputStream data = fs.create(filepath);

while (sc.hasNext()){

String line = sc.nextLine();

if (!StringUtils.isBlank(line))

data.writeChars(line +'\n');

}

}

else {

FSDataOutputStream data = fs.append(filepath);

while (sc.hasNext()){

String line = sc.nextLine();

if (!StringUtils.isBlank(line))

data.writeChars(line +'\n');

}

}

sc.close();

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

删除HDFS中指定的文件;

read -p "enter a path you want to delete: " path

hadoop fs -rm -R $path

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.util.Scanner;

public class hsfs2 {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

System.out.println("enter a path you want delete");

Scanner sc = new Scanner(System.in);

String filename = sc.nextLine();

Path filepath = new Path(filename);

if (fs.exists(filepath)) {

fs.delete(filepath,true);

System.out.println("文件已删除");

} else {

System.out.println("文件不存在,已创建");

}

sc.close();

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

在HDFS中,将文件从源路径移动到目的路径。

read -p "enter sourcepath: " sourcepath

read -p "enter target path: " targetpath

hadoop fs -mv $sourcepath $targetpath

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.util.Scanner;

public class hsfs2 {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

System.out.println("enter sourcepath ");

Scanner sc = new Scanner(System.in);

Path sourcepath = new Path(sc.nextLine());

System.out.println("enter targetpath ");

Path targetpath = new Path(sc.nextLine());

if (fs.exists(targetpath)) {

fs.rename(sourcepath, targetpath);

System.out.println("移动成功");

} else {

System.out.println("目标路径不存在");

}

sc.close();

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

选做:

(二)编程实现一个类“MyFSDataInputStream”,该类继承“org.apache.hadoop.fs.FSDataInputStream”,要求如下:实现按行读取HDFS中指定文件的方法“readLine()”,如果读到文件末尾,则返回空,否则返回文件一行的文本。

(三)查看Java帮助手册或其它资料,用“java.net.URL”和“org.apache.hadoop.fs.FsURLStreamHandlerFactory”编程完成输出HDFS中指定文件的文本到终端中。

import java.net.URL;

import org.apache.hadoop.io.IOUtils;

import java.io.InputStream;

import java.io.IOException;

import org.apache.hadoop.fs.*;

import java.util.Scanner;

public class hsfs2 {

static{;

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

}

public static void cat(String FilePath){

try(InputStream in=new URL("hdfs","localhost",9000,FilePath).openStream()){

IOUtils.copyBytes(in, System.out, 4096, false);

IOUtils.closeStream(in);

}catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

try{

System.out.println("enter path ");

Scanner sc = new Scanner(System.in);

String filepath = sc.nextLine();

System.out.println("去读文件:"+filepath);

hdfsclass.cat(filepath);

System.out.println("\n 读取完成");

}catch(Exception e){

e.printStackTrace();

}

}

}

- 实验总结及问题

1、学会使用什么做什么事情;

学会使用hdfs shell基本命令,使用hdfs 基本api

2、在实验过程中遇到了什么问题?是如何解决的?

许多方法尚未掌握,靠查阅官方文档和与同学交流,或者查看博客

3、还有什么问题尚未解决?可能是什么原因导致的。

有部分功能并未完全实现,需要继续调试