Prometheus 简介

Prometheus是一个开源系统监控和警报工具包,最初在 SoundCloud构建。自 2012 年成立以来,许多公司和组织都采用了 Prometheus,该项目拥有非常活跃的开发者和用户社区。

它现在是一个独立的开源项目,独立于任何公司维护。为了强调这一点,并明确项目的治理结构,Prometheus 于 2016 年加入 云原生计算基金会,成为继Kubernetes之后的第二个托管项目。

Prometheus 将其指标收集并存储为时间序列数据,即指标信息与记录时的时间戳以及称为标签的可选键值对一起存储。

官方网站:https://prometheus.io/

项目地址:https://github.com/prometheus/prometheus

Prometheus Operator简介

Prometheus Operator 涉及多个项目,主要区别如下:

- prometheus-operator: 只包含一个operator,该operator管理和操作Prometheus和Alertmanager集群。

- kube Prometheus:以Prometheus Operator和一系列manifests文件为基础,以帮助你快速在kubernetes集群中部署Prometheus监控系统。

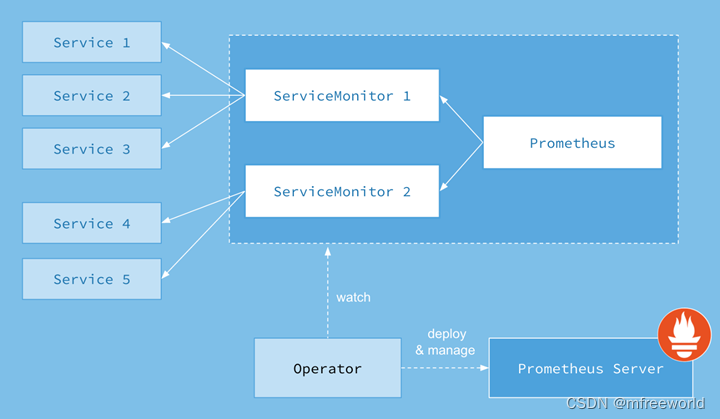

Prometheus Operator 的一个核心功能是监控 Kubernetes API 服务器对特定对象的更改,并确保当前的 Prometheus 部署与这些对象匹配。Operator 主要组件如下:

- Prometheus:主服务,它定义了所需的 Prometheus 部署,用于存储时间序列数据,负责从 Exporter 拉取和存储监控数据,并提供一套灵活的查询语言(PromQL)供用户使用。

- Alertmanager:它定义了所需的 Alertmanager部署,它能够实现短信或邮件报警。

- ServiceMonitor:它以声明方式指定应如何监视 Kubernetes 服务组。Operator 根据 API

服务器中对象的当前状态自动生成 Prometheus 抓取配置。 - Exporters :暴露metrics,收集监控指标,并以一种规定的数据格式通过 HTTP 接口提供给Prometheus-采集监控对象数据,安装在监控目标的主机上。

- grafana:监控Dashbord,UI展示,设置Prometheus Server地址即可自定义监控Dashbord- UI展示

helm 部署 prometheus

部署环境:

- prometheus-operator:v0.57.0

- kubernetes:v1.24.0

- OS:ubuntu 22.04 LTS

部署参考:

https://artifacthub.io/packages/helm/prometheus-community/kube-prometheus-stack

https://github.com/prometheus-community/helm-charts/tree/main/charts/kube-prometheus-stack

1、添加helm repo

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

2、安装prometheus

helm upgrade --install prometheus \

--namespace monitoring --create-namespace \

--set grafana.service.type=NodePort \

--set prometheus.service.type=NodePort \

--set alertmanager.service.type=NodePort \

--set prometheus.prometheusSpec.serviceMonitorSelectorNilUsesHelmValues=false \

prometheus-community/kube-prometheus-stack

查看values配置

helm show values prometheus-community/kube-prometheus-stack

默认该chat安装了以下依赖charts,也可以在安装期间禁用依赖项。

3、检查所有组件是否部署正确。

root@ubuntu:~# kubectl -n monitoring get pods

NAME READY STATUS RESTARTS AGE

alertmanager-prometheus-kube-prometheus-alertmanager-0 2/2 Running 0 3m44s

prometheus-grafana-cc56f546c-h2wzt 3/3 Running 0 4m2s

prometheus-kube-prometheus-operator-798bfb99f8-tj5f6 1/1 Running 0 4m2s

prometheus-kube-state-metrics-6545694994-cbclj 1/1 Running 0 4m2s

prometheus-prometheus-kube-prometheus-prometheus-0 2/2 Running 0 3m43s

prometheus-prometheus-node-exporter-8vbq5 1/1 Running 0 4m2s

prometheus-prometheus-node-exporter-hv6zf 1/1 Running 0 4m2s

prometheus-prometheus-node-exporter-nmf82 1/1 Running 0 4m2s

查看 Prometheus

root@ubuntu:~# kubectl -n monitoring get prometheus

NAME VERSION REPLICAS AGE

prometheus-kube-prometheus-prometheus v2.36.1 1 8m56s

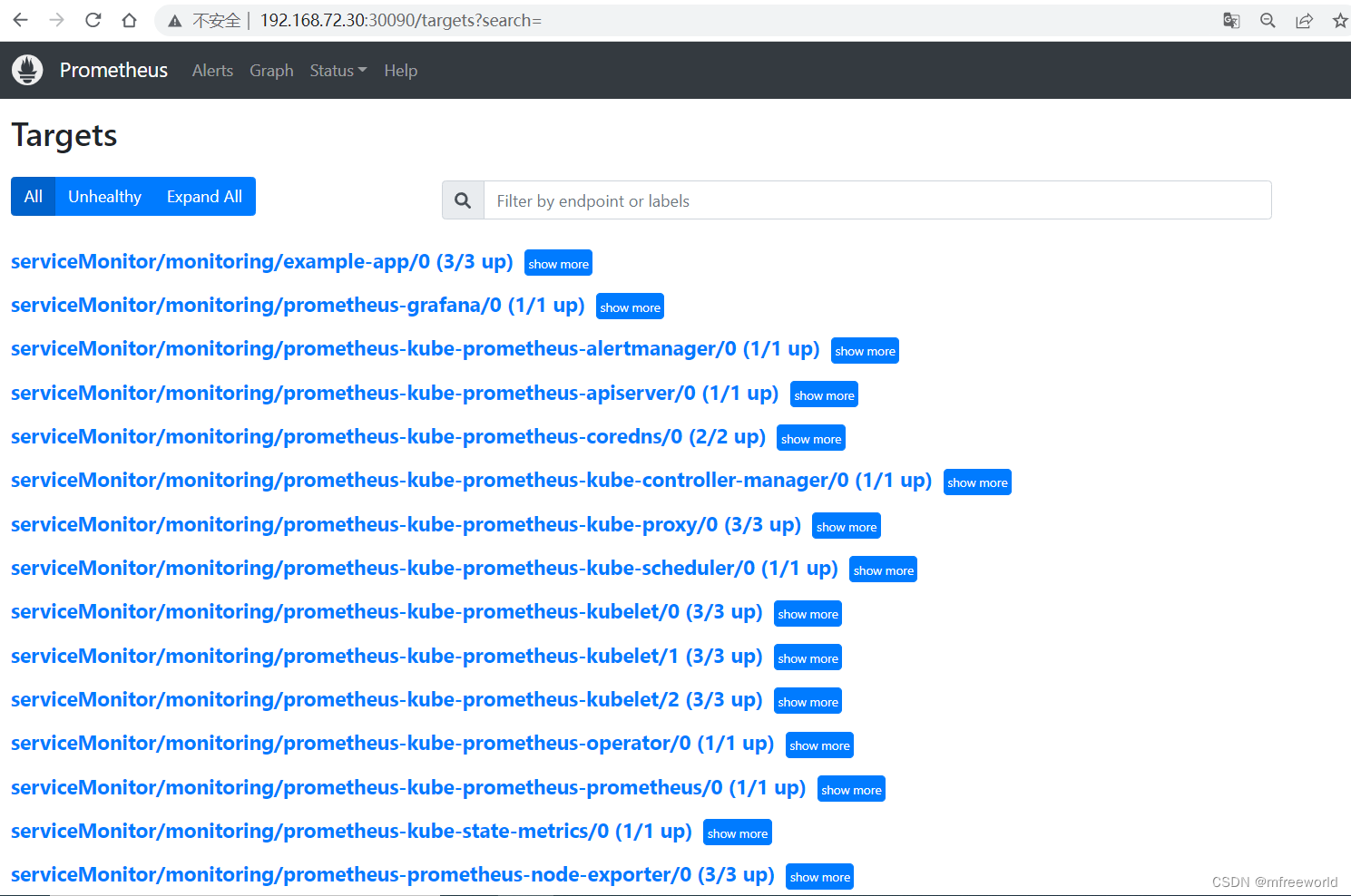

查看 ServiceMonitor

root@ubuntu:~# kubectl -n monitoring get ServiceMonitor

NAME AGE

prometheus-grafana 7m28s

prometheus-kube-prometheus-alertmanager 7m28s

prometheus-kube-prometheus-apiserver 7m28s

prometheus-kube-prometheus-coredns 7m28s

prometheus-kube-prometheus-kube-controller-manager 7m28s

prometheus-kube-prometheus-kube-etcd 7m28s

prometheus-kube-prometheus-kube-proxy 7m28s

prometheus-kube-prometheus-kube-scheduler 7m28s

prometheus-kube-prometheus-kubelet 7m28s

prometheus-kube-prometheus-operator 7m28s

prometheus-kube-prometheus-prometheus 7m28s

prometheus-kube-state-metrics 7m28s

prometheus-prometheus-node-exporter 7m28s

查看 crds

root@ubuntu:~# kubectl get crds |grep monitoring

alertmanagerconfigs.monitoring.coreos.com 2022-06-27T02:54:08Z

alertmanagers.monitoring.coreos.com 2022-06-27T02:54:08Z

podmonitors.monitoring.coreos.com 2022-06-27T02:54:09Z

probes.monitoring.coreos.com 2022-06-27T02:54:09Z

prometheuses.monitoring.coreos.com 2022-06-27T02:54:09Z

prometheusrules.monitoring.coreos.com 2022-06-27T02:54:09Z

servicemonitors.monitoring.coreos.com 2022-06-27T02:54:09Z

thanosrulers.monitoring.coreos.com 2022-06-27T02:54:09Z

访问 prometheus

1、查看service ,获取 Nodeport 端口号

root@ubuntu:~# kubectl -n monitoring get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 4h41m

prometheus-grafana NodePort 10.233.38.101 <none> 80:32437/TCP 4h44m

prometheus-kube-prometheus-alertmanager NodePort 10.233.59.123 <none> 9093:30903/TCP 4h44m

prometheus-kube-prometheus-operator ClusterIP 10.233.29.238 <none> 443/TCP 4h44m

prometheus-kube-prometheus-prometheus NodePort 10.233.37.90 <none> 9090:30090/TCP 4h44m

prometheus-kube-state-metrics ClusterIP 10.233.27.45 <none> 8080/TCP 4h44m

prometheus-operated ClusterIP None <none> 9090/TCP 4h41m

prometheus-prometheus-node-exporter ClusterIP 10.233.44.239 <none> 9100/TCP 4h44m

2、访问 prometheus 界面

修复kube-proxy target connection refused问题

$ kubectl edit cm/kube-proxy -n kube-system

## Change from

metricsBindAddress: ### <--- Too secure

## Change to

metricsBindAddress: 0.0.0.0:10249

$ kubectl delete pod -l k8s-app=kube-proxy -n kube-system

2、访问 grafana 界面

如果使用Prometheus Operator部署,密码默认为

user: admin

pass: prom-operator

可以使用以下命令获取

root@ubuntu:~# kubectl -n monitoring get secret prometheus-grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

prom-operator

登陆界面如下

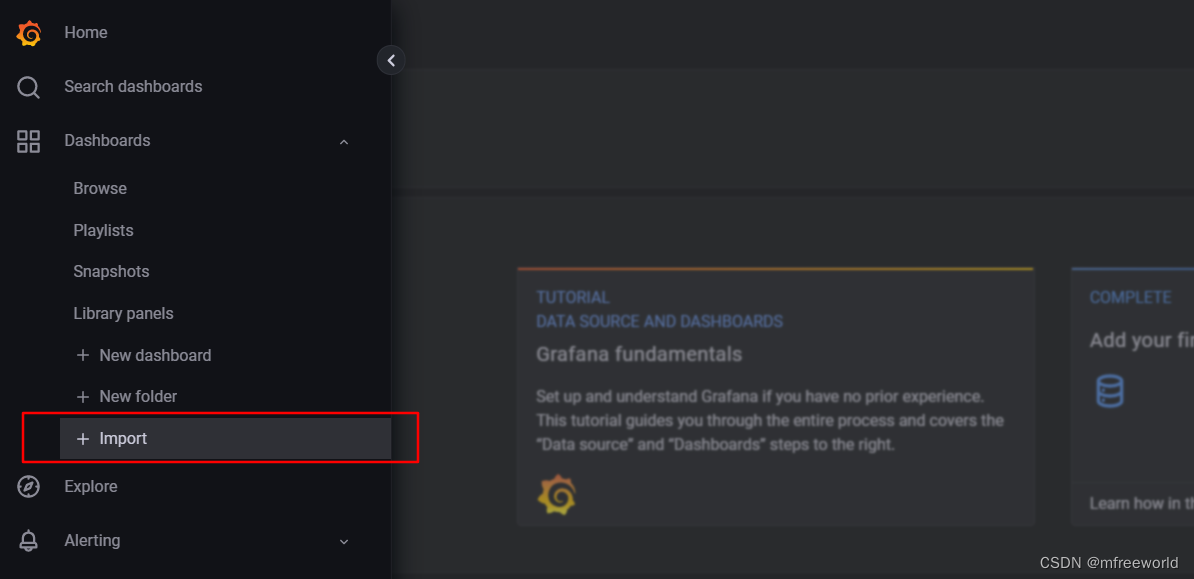

grafana 导入 dashboard

导入ID 为 1860 的 Node Exporter dashboard

prometheus 配置 service monitor

确认 prometheus selector 相关配置

root@ubuntu:~# kubectl -n monitoring get prometheus -o yaml |grep podMonitor -A2

podMonitorNamespaceSelector: {}

podMonitorSelector:

matchLabels:

release: prometheus

root@ubuntu:~# kubectl -n monitoring get prometheus -o yaml |grep serviceMonitor -A2

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

shards: 1

storage:

1、创建应用

apiVersion: apps/v1

kind: Deployment

metadata:

name: example-app

spec:

replicas: 3

selector:

matchLabels:

app: example-app

template:

metadata:

labels:

app: example-app

spec:

containers:

- name: example-app

image: fabxc/instrumented_app

ports:

- name: web

containerPort: 8080

2、创建service

kind: Service

apiVersion: v1

metadata:

name: example-app

labels:

app: example-app

spec:

selector:

app: example-app

ports:

- name: web

port: 8080

3、创建ServiceMonitors

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: example-app

labels:

team: frontend

spec:

selector:

matchLabels:

app: example-app

endpoints:

- port: web

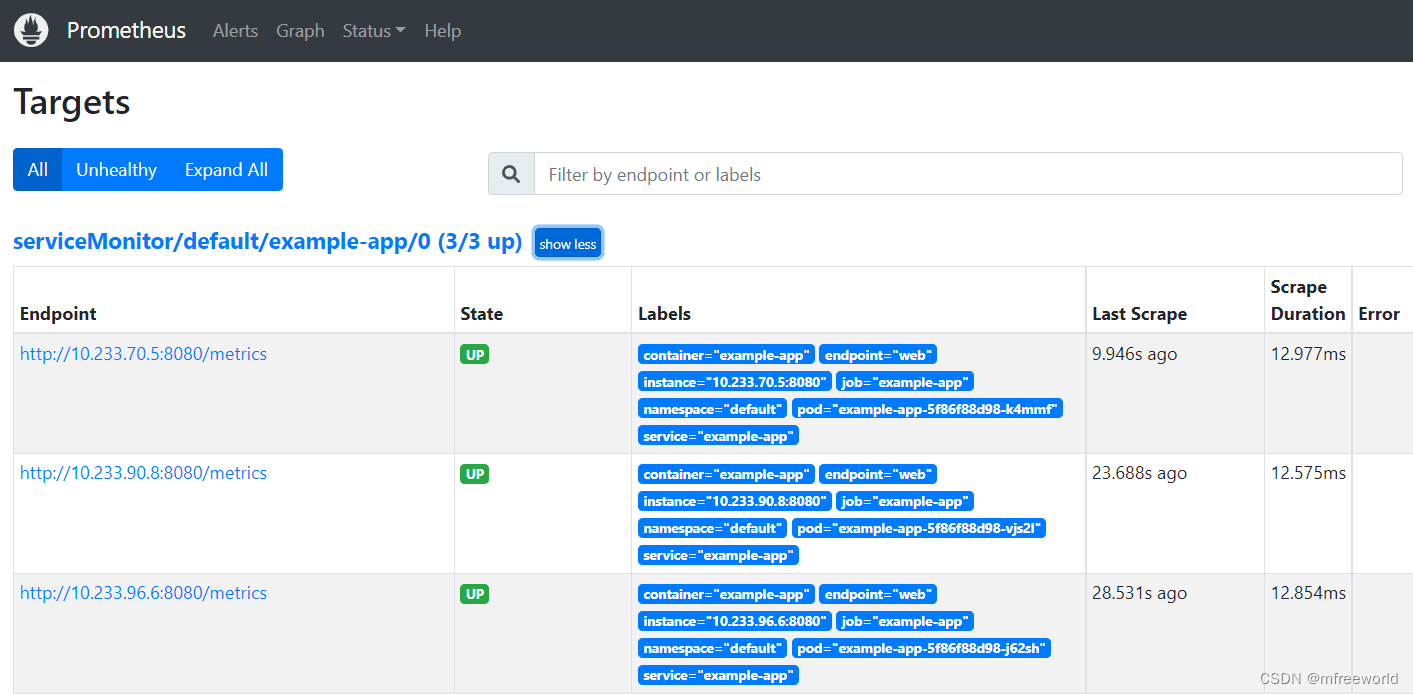

4、查看target

prometheus 使用持久存储

集群外准备一个节点使用docker部署nfs-server

docker run -d --name nfs-server \

--privileged \

--restart always \

-p 2049:2049 \

-v /nfs-share:/nfs-share \

-e SHARED_DIRECTORY=/nfs-share \

itsthenetwork/nfs-server-alpine:latest

集群内部署nfs-subdir-external-provisione

helm install nfs-subdir-external-provisioner \

--namespace=nfs-provisioner --create-namespace \

--set image.repository=registry.cn-shenzhen.aliyuncs.com/cnmirror/nfs-subdir-external-provisioner \

--set storageClass.defaultClass=true \

--set nfs.server=192.168.72.15 \

--set nfs.path=/ \

nfs-subdir-external-provisioner/nfs-subdir-external-provisioner

创建values.yaml 定义 stoargeclass 配置

cat <<EOF>values.yaml

alertmanager:

alertmanagerSpec:

storage:

volumeClaimTemplate:

spec:

storageClassName: nfs-client

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 50Gi

prometheus:

prometheusSpec:

storageSpec:

volumeClaimTemplate:

spec:

storageClassName: nfs-client

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 50Gi

EOF

使用helm部署prometheus

helm upgrade --install prometheus \

--namespace monitoring --create-namespace \

--set grafana.service.type=NodePort \

--set prometheus.service.type=NodePort \

--set alertmanager.service.type=NodePort \

--set prometheus.prometheusSpec.serviceMonitorSelectorNilUsesHelmValues=false \

--set grafana.persistence.enabled=true \

--set grafana.persistence.storageClassName=nfs-client \

-f values.yaml \

prometheus-community/kube-prometheus-stack

查看创建的pv及pvc存储

root@ubuntu:~# kubectl -n monitoring get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

alertmanager-prometheus-kube-prometheus-alertmanager-db-alertmanager-prometheus-kube-prometheus-alertmanager-0 Bound pvc-6cfb0e68-919f-47e0-a3c4-1eba42a8b65e 50Gi RWO nfs-client 3m39s

prometheus-grafana Bound pvc-a6e0b3dc-1104-44a3-a190-a4ff8992b366 10Gi RWO nfs-client 3m43s

prometheus-prometheus-kube-prometheus-prometheus-db-prometheus-prometheus-kube-prometheus-prometheus-0 Bound pvc-323dbd51-4329-4cbe-bbf9-cd3d31fb845c 50Gi RWO nfs-client 3m39s

root@ubuntu:~# kubectl -n monitoring get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-323dbd51-4329-4cbe-bbf9-cd3d31fb845c 50Gi RWO Delete Bound monitoring/prometheus-prometheus-kube-prometheus-prometheus-db-prometheus-prometheus-kube-prometheus-prometheus-0 nfs-client 3m39s

pvc-6cfb0e68-919f-47e0-a3c4-1eba42a8b65e 50Gi RWO Delete Bound monitoring/alertmanager-prometheus-kube-prometheus-alertmanager-db-alertmanager-prometheus-kube-prometheus-alertmanager-0 nfs-client 3m40s

pvc-a6e0b3dc-1104-44a3-a190-a4ff8992b366 10Gi RWO Delete Bound monitoring/prometheus-grafana nfs-client 3m44s