本文主要介绍一个框架nlp-basictasks

nlp-basictasks是利用PyTorch深度学习框架所构建一个简单的库,旨在快速搭建模型完成一些基础的NLP任务,如分类、匹配、序列标注、语义相似度计算等。

下面利用该框架实现BERT+CRF模型做NER任务

数据集介绍

数据集来源

来源CLUE实体识别数据集

导入包

import torch,json

import sys,os

import pandas as pd

import random

import numpy as np

from nlp_basictasks.tasks import Ner

from nlp_basictasks.evaluation import nerEvaluator

from nlp_basictasks.readers.ner import InputExample

获取数据

def _create_examples(input_path,mode):

examples = []

with open(input_path, 'r') as f:

idx = 0

for line in f:

json_d = {}

line = json.loads(line.strip())

text = line['text']

label_entities = line.get('label', None)

words = list(text)

labels = ['O'] * len(words)

if label_entities is not None:

for key, value in label_entities.items():

for sub_name, sub_index in value.items():

for start_index, end_index in sub_index:

assert ''.join(words[start_index:end_index + 1]) == sub_name

if start_index == end_index:

labels[start_index] = 'S-' + key

else:

labels[start_index] = 'B-' + key

labels[start_index + 1:end_index + 1] = ['I-' + key] * (len(sub_name) - 1)

json_d['id'] = f"{mode}_{idx}"

json_d['context'] = " ".join(words)

json_d['tag'] = " ".join(labels)

json_d['raw_context'] = "".join(words)

idx += 1

examples.append(json_d)

return examples

data=_create_examples('/data/nfs14/nfs/aisearch/asr/xhsun/datasets/cluener/train.json',mode='train')

构造训练集和验证集

train_examples=[]

for example in data:

seq_in=example['context'].strip().split(' ')

seq_out=example['tag'].strip().split(' ')

assert len(seq_in)==len(seq_out)

train_examples.append(InputExample(seq_in=seq_in,seq_out=seq_out))

dev_examples=train_examples[-2000:]

dev_seq_in=[]

dev_seq_out=[]

for example in dev_examples:

dev_seq_in.append(example.seq_in)

dev_seq_out.append(example.seq_out)

train_examples=train_examples[:-2000]

label_set=set()

for examples in data:

label_list=examples['tag'].strip().split(' ')

for label in label_list:

label_set.add(label)

label2id={'[PAD]':0}

for label in label_set:

label2id[label]=len(label2id)

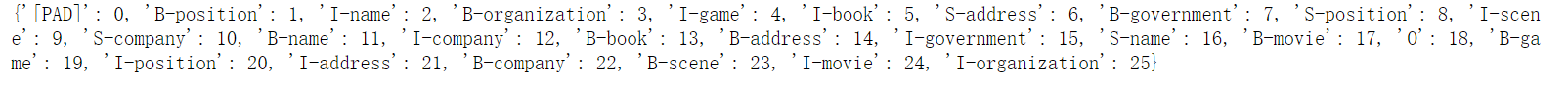

print(label2id)

定义路径加载模型

model_path=''#你下载BERT模型位置,比如:'chinese-roberta-wwm/'

device='cuda'

ner_model=Ner(model_path,label2id=label2id,use_crf=True,use_bilstm=True,device=device,batch_first=True)

#可以指定是否使用CRF或者BiLSTM

训练模型

from torch.utils.data import DataLoader

batch_size=32

train_dataloader = DataLoader(train_examples, shuffle=True, batch_size=batch_size)

evaluator=nerEvaluator(label2id=label2id,seq_in=dev_seq_in,seq_out=dev_seq_out)

output_path=""#output_path是训练后保存模型的路径

ner_model.fit(train_dataloader=train_dataloader,evaluator=evaluator,epochs=5,output_path=output_path)

上图就是训练过程中各个实体的precision、recall以及f1得分

模型预测

不用100行代码即可完成NER任务,相关教程见nlp-basictasks框架做NER任务,觉得好用的话还请点个star,谢谢

版权声明:本文为m0_45478865原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。