第一次将例程跑起来了,有些兴趣。

参考的是如下URL:

http://www.yidianzixun.com/article/0KNz7OX1

本来是比较Keras和Tensorflow的,我现在的水平,只能是跑通一个算一个啦。

因为要比较CPU和GPU的性能,两个DOCKER如下:

tensorflow/tensorflow:1.12.0-gpu-py3

tensorflow/tensorflow:1.12.0-py3

CIFAR-10的数据自已从网上下载,所以出现如下错误时,要自己更改成一个内网URL地址:

Traceback (most recent call last): File "train_network_tf.py", line 26, in <module> split = tf.keras.datasets.cifar10.load_data() File "/usr/local/lib/python3.5/dist-packages/tensorflow/python/keras/datasets/cifar10.py", line 40, in load_data path = get_file(dirname, origin=origin, untar=True) File "/usr/local/lib/python3.5/dist-packages/tensorflow/python/keras/utils/data_utils.py", line 251, in get_file raise Exception(error_msg.format(origin, e.errno, e.reason)) Exception: URL fetch failure on https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz: None -- [Errno -3] Temporary failure in name resolution

/usr/local/lib/python3.5/dist-packages/tensorflow/python/keras/datasets/cifar10.py这个文件40行是一个网址,更改一下就OK了。

一,modle层实现代码

pyimagesearch/minivggnettf.py

# import the necessary packages import tensorflow as tf class MiniVGGNetTF: @staticmethod def build(width, height, depth, classes): # initialize the input shape and channel dimension, assuming # TensorFlow/channels-last ordering inputShape = (height, width, depth) chanDim = -1 # define the model input inputs = tf.keras.layers.Input(shape=inputShape) # first (CONV => RELU) * 2 => POOL layer set x = tf.keras.layers.Conv2D(32, (3, 3), padding="same")(inputs) x = tf.keras.layers.Activation("relu")(x) x = tf.keras.layers.BatchNormalization(axis=chanDim)(x) x = tf.keras.layers.Conv2D(32, (3, 3), padding="same")(x) x = tf.keras.layers.Lambda(lambda t: tf.nn.crelu(x))(x) x = tf.keras.layers.BatchNormalization(axis=chanDim)(x) x = tf.keras.layers.MaxPooling2D(pool_size=(2, 2))(x) x = tf.keras.layers.Dropout(0.25)(x) # second (CONV => RELU) * 2 => POOL layer set x = tf.keras.layers.Conv2D(64, (3, 3), padding="same")(x) x = tf.keras.layers.Lambda(lambda t: tf.nn.crelu(x))(x) x = tf.keras.layers.BatchNormalization(axis=chanDim)(x) x = tf.keras.layers.Conv2D(64, (3, 3), padding="same")(x) x = tf.keras.layers.Lambda(lambda t: tf.nn.crelu(x))(x) x = tf.keras.layers.BatchNormalization(axis=chanDim)(x) x = tf.keras.layers.MaxPooling2D(pool_size=(2, 2))(x) x = tf.keras.layers.Dropout(0.25)(x) # first (and only) set of FC => RELU layers x = tf.keras.layers.Flatten()(x) x = tf.keras.layers.Dense(512)(x) x = tf.keras.layers.Lambda(lambda t: tf.nn.crelu(x))(x) x = tf.keras.layers.BatchNormalization()(x) x = tf.keras.layers.Dropout(0.5)(x) # softmax classifier x = tf.keras.layers.Dense(classes)(x) x = tf.keras.layers.Activation("softmax")(x) # create the model model = tf.keras.models.Model(inputs, x, name="minivggnet_tf") # return the constructed network architecture return model

二,数据训练

train_network_tf.py

# USAGE # python train_network_tf.py # set the matplotlib backend so figures can be saved in the background import matplotlib matplotlib.use("Agg") # import the necessary packages from pyimagesearch.minivggnettf import MiniVGGNetTF from sklearn.preprocessing import LabelBinarizer from sklearn.metrics import classification_report import matplotlib.pyplot as plt import tensorflow as tf import numpy as np import argparse # construct the argument parser and parse the arguments ap = argparse.ArgumentParser() ap.add_argument("-p", "--plot", type=str, default="plot_tf.png", help="path to output loss/accuracy plot") args = vars(ap.parse_args()) # load the training and testing data, then scale it into the # range [0, 1] print("[INFO] loading CIFAR-10 data...") split = tf.keras.datasets.cifar10.load_data() ((trainX, trainY), (testX, testY)) = split trainX = trainX.astype("float") / 255.0 testX = testX.astype("float") / 255.0 # convert the labels from integers to vectors lb = LabelBinarizer() trainY = lb.fit_transform(trainY) testY = lb.transform(testY) # initialize the label names for the CIFAR-10 dataset labelNames = ["airplane", "automobile", "bird", "cat", "deer", "dog", "frog", "horse", "ship", "truck"] # initialize the initial learning rate, total number of epochs to # train for, and batch size INIT_LR = 0.01 EPOCHS = 5 BS = 32 # initialize the optimizer and model print("[INFO] compiling model...") opt = tf.keras.optimizers.SGD(lr=INIT_LR, decay=INIT_LR / EPOCHS) model = MiniVGGNetTF.build(width=32, height=32, depth=3, classes=len(labelNames)) model.compile(loss="categorical_crossentropy", optimizer=opt, metrics=["accuracy"]) # train the network print("[INFO] training network for {} epochs...".format(EPOCHS)) H = model.fit(trainX, trainY, validation_data=(testX, testY), batch_size=BS, epochs=EPOCHS, verbose=1) # evaluate the network print("[INFO] evaluating network...") predictions = model.predict(testX, batch_size=32) print(classification_report(testY.argmax(axis=1), predictions.argmax(axis=1), target_names=labelNames)) # plot the training loss and accuracy plt.style.use("ggplot") plt.figure() plt.plot(np.arange(0, EPOCHS), H.history["loss"], label="train_loss") plt.plot(np.arange(0, EPOCHS), H.history["val_loss"], label="val_loss") plt.plot(np.arange(0, EPOCHS), H.history["acc"], label="train_acc") plt.plot(np.arange(0, EPOCHS), H.history["val_acc"], label="val_acc") plt.title("Training Loss and Accuracy on Dataset") plt.xlabel("Epoch #") plt.ylabel("Loss/Accuracy") plt.legend(loc="lower left") plt.savefig(args["plot"])

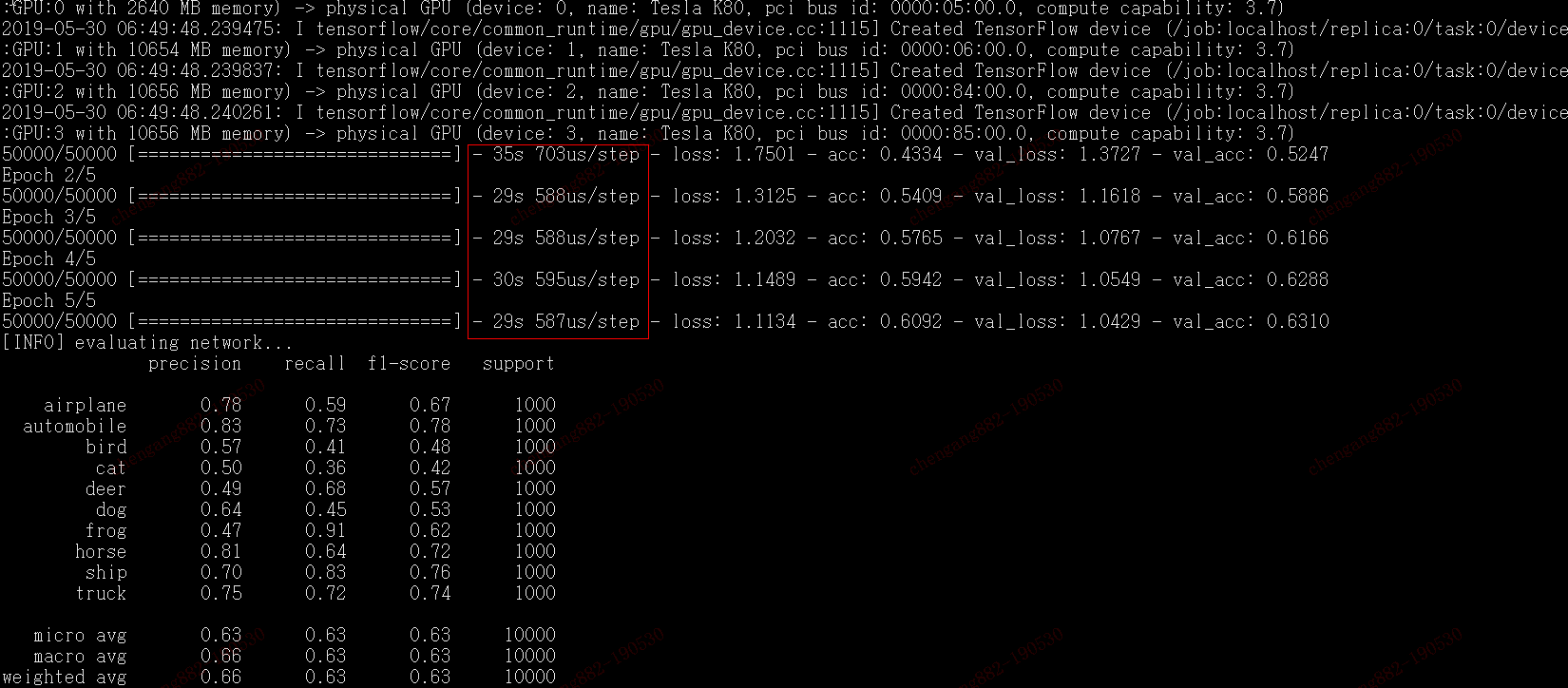

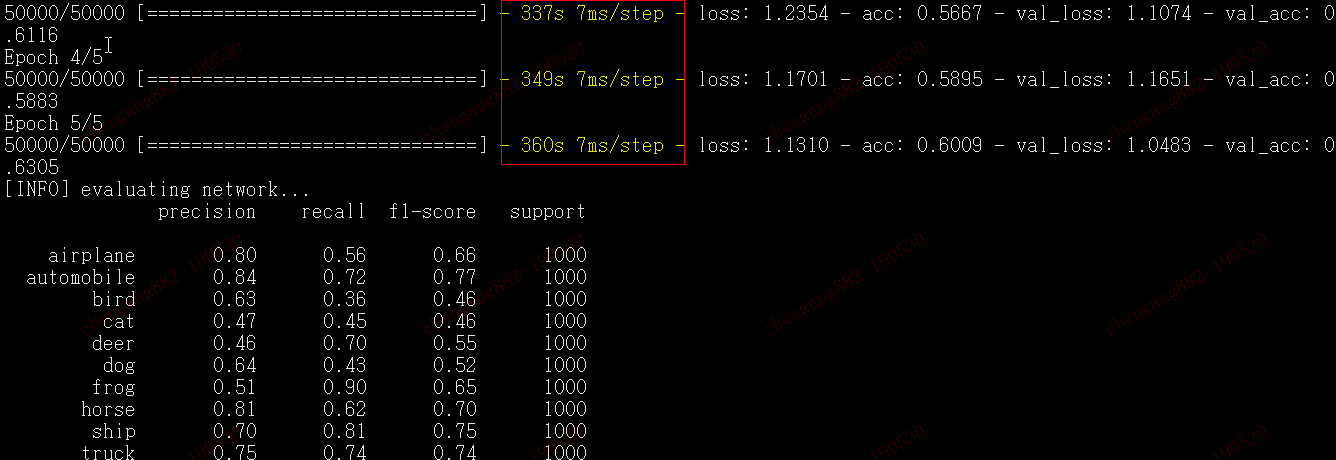

三,结果对比:

当使用GPU吧,一个批次完成需要30秒上下。

而只使用CPU的话,一个批次完成则需要330秒以上。

效率提高10倍以上啊。

转载于:https://www.cnblogs.com/aguncn/p/10949579.html