据说ERNIE 在中文 NLP 任务中比Bert更为优秀,看论文感觉是在bert基础上做了一些训练的技巧.

https://github.com/nghuyong/ERNIE-Pytorch 转化模型代码项目

测试代码附上可直接执行的:

#!/usr/bin/env python

# encoding: utf-8

import torch

from pytorch_transformers import BertTokenizer, BertModel,BertForMaskedLM

tokenizer = BertTokenizer.from_pretrained('./ERNIE-converted')

input_tx = "[CLS] [MASK] [MASK] [MASK] 是中国神魔小说的经典之作,与《三国演义》《水浒传》《红楼梦》并称为中国古典四大名著。[SEP]"

tokenized_text = tokenizer.tokenize(input_tx)

indexed_tokens = tokenizer.convert_tokens_to_ids(tokenized_text)

tokens_tensor = torch.tensor([indexed_tokens])

segments_tensors = torch.tensor([[0]*47])

model = BertForMaskedLM.from_pretrained('./ERNIE-converted')

outputs = model(tokens_tensor, token_type_ids=segments_tensors)

predictions = outputs[0]

predicted_index = [torch.argmax(predictions[0, i]).item() for i in range(0,46)]

predicted_token = [tokenizer.convert_ids_to_tokens([predicted_index[x]])[0] for x in range(1,46)]

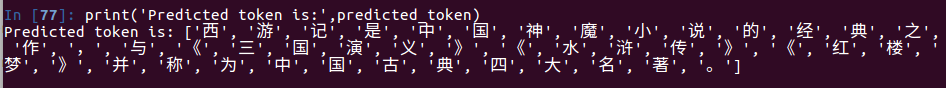

print('Predicted token is:',predicted_token)

版权声明:本文为u011930705原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。