之前用给我们自己设计的一个3层卷积网络在CIFAR-10上进行了实验,后期发现网络参数太少,在保证泛化性能的前提下拟合能力不足,所以需要加深网络,plain网络不如res网络好,所以我们就不设计plain网络了,直接用ResNet-18来做实验。

1.ResNet简介

参考链接:https://blog.csdn.net/shwan_ma/article/details/78163921

这个现象很有趣,训练的error是会比测试的error高的,我们训练时也遇到过同样的现象,难道是因为数增强使训练集变难了?

2.网络结构

参考链接:https://blog.csdn.net/sunqiande88/article/details/80100891

import torch

import torch.nn as nn

import torch.nn.functional as F

class ResidualBlock(nn.Module):

#初始化中定义了两种网络组成部分left、shortcut

def __init__(self, inchannel, outchannel, stride=1):

super(ResidualBlock, self).__init__()

#left是正常的传播通路

self.left = nn.Sequential(

nn.Conv2d(inchannel, outchannel, kernel_size=3, stride=stride, padding=1, bias=False),

nn.BatchNorm2d(outchannel),

nn.ReLU(inplace=True),

nn.Conv2d(outchannel, outchannel, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(outchannel)

)

self.shortcut = nn.Sequential()

if stride != 1 or inchannel != outchannel:

self.shortcut = nn.Sequential(

nn.Conv2d(inchannel, outchannel, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(outchannel)

)

def forward(self, x):

out = self.left(x)

out += self.shortcut(x)

out = F.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, ResidualBlock, num_classes=10):

super(ResNet, self).__init__()

self.inchannel = 64

self.conv1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(64),

nn.ReLU()

)

self.layer1 = self.make_layer(ResidualBlock, 64, 2, stride=1)

self.layer2 = self.make_layer(ResidualBlock, 128, 2, stride=2)

self.layer3 = self.make_layer(ResidualBlock, 256, 2, stride=2)

self.layer4 = self.make_layer(ResidualBlock, 512, 2, stride=2)

self.fc = nn.Linear(512, num_classes)

def make_layer(self, block, channels, num_blocks, stride):

strides = [stride] + [1]*(num_blocks -1)

layers = []

for stride in strides:

layers.append(block(self.inchannel, channels, stride))

self.inchannel = channels

return nn.Sequential(*layers)

def forward(self, x):

out = self.conv1(x)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = F.avg_pool2d(out, 4)

out = out.view(out.size(0),-1)

out = self.fc(out)

return out

def ResNet18():

return ResNet(ResidualBlock)

net = ResNet18()

print(net)通过对ResNet-18的学习,我们发现网络pytorch编写网络可以很灵活,尤其是大规模网络时,可以设计一些子模块( ResidualBlock),并通过一些规则(make_layer)来生成网络。

2.初步测试

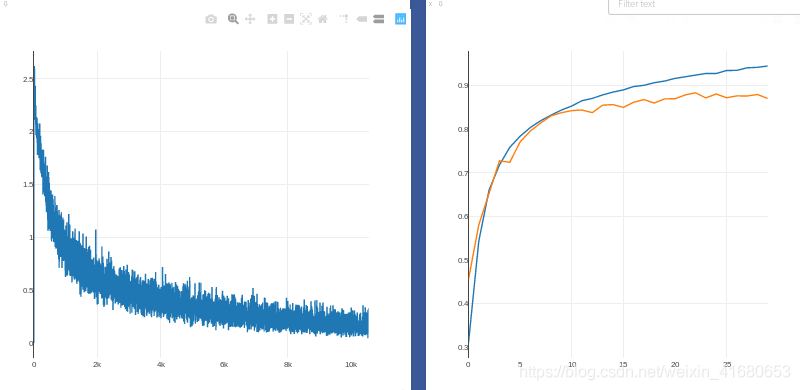

1)初次尝试

Test Loss:0.460295, Test ACC:0.849189

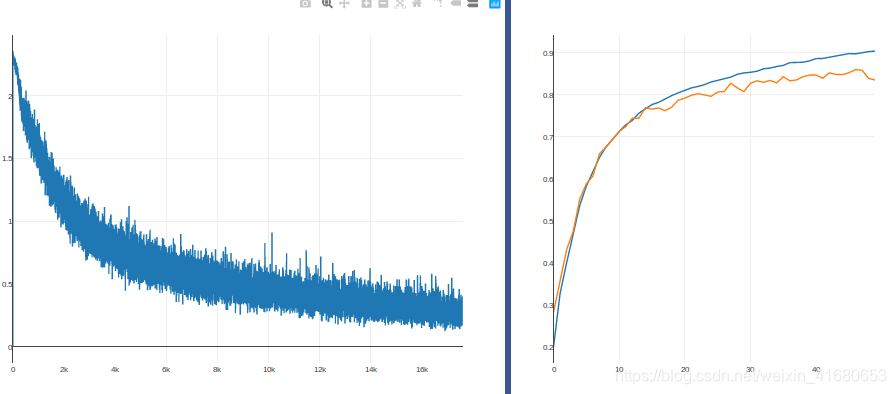

0.0005学习率,50个epoch测试集就达到了85%!loss也很低

2)改进

文章里用的学习率0.1,我们也尝试一下

optimizer = torch.optim.SGD(net.parameters(), lr=0.1, momentum=0.9, weight_decay=1e-5)

scheduler = lr_scheduler.ReduceLROnPlateau(optimizer, mode='max', factor=0.5, patience=10,

verbose=True, threshold=0.0003, threshold_mode='rel', cooldown=0, min_lr=0, eps=1e-08)

torch.save(net.state_dict(), 'my_resnet_params_2.pkl')

对比之前自己设计的浅层网络,这个学习率可以设置的很大。

3)按照论文的学习率设计

optimizer = torch.optim.SGD(net.parameters(), lr=0.1, momentum=0.9, weight_decay=1e-5)

scheduler = lr_scheduler.MultiStepLR(optimizer, [30, 60,90], 0.1)('epoch = ', 99)

('train_acc:', 0.994140625)

('val_acc:', 0.9044)

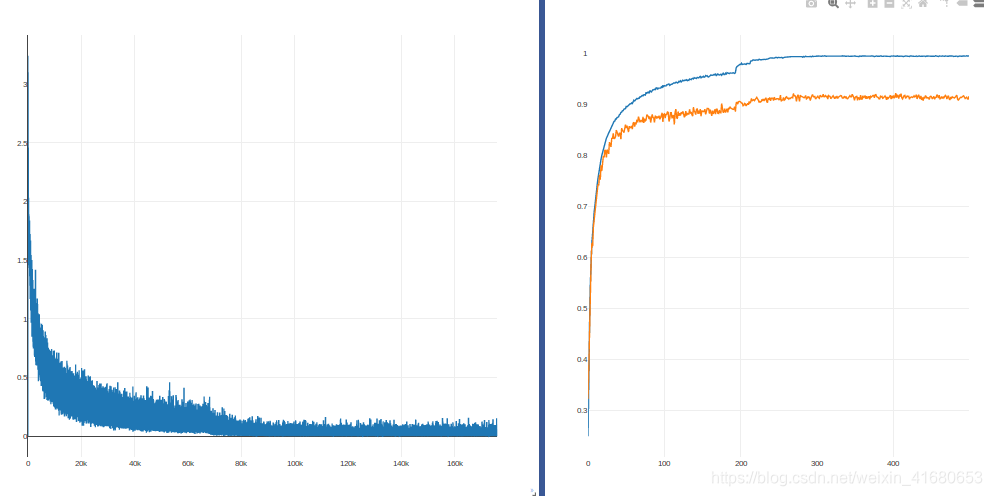

可以看到Res_net不仅拟合能力强,收敛速度也快,最终训练准确度到达 99%,验证准确度90%,测试准确度90%,但是还是有一定程度的过拟合,论文中测试集能达到93%,我们再调试一下

4)分段训练

每10个epoch保存一次参数,加载再训练

发现一个现象:每个session后期训练不动时,保存参数开始下一个session,加载再训练时训练、验证准确率都会提高一点,按理说不应该是连续的吗?训练不动了重新加载参数再训练还应该是训练不动呀,为什么会出现跳跃呢?除了修改学习率的session,学习率相同的session开头应该是一个iteration的提升

0.1~0.01

| 6898 | 716 | 7863 | 8161 | 8609 | 8618 | 8883 | 9156 | 9269 | 9344 | 9333 | 9356 | 9392 | 9389 | 9442 |

| 6834 | 706 | 7864 | 792 | 8216 | 8602 | 8604 | 9048 | 921 | 9258 | 9328 | 9329 | 9328 | 9488 | 939 |

0.001

| 9467 | 9484 | 9482 | 9502 | 9493 | 9511 |

| 9458 | 9522 | 9524 | 9480 | 5)最终测试9528 | 9528 |

0.0005

| 9507 | 9513 | 9513 | 9495 | 9507 | 9530 |

| 9484 | 9515 | 9562 | 9532 | 9516 | 949 |

0.0001

| 9721 | 9724 |

| 9766 | 9784 |

测试集准确率91%,过拟合了

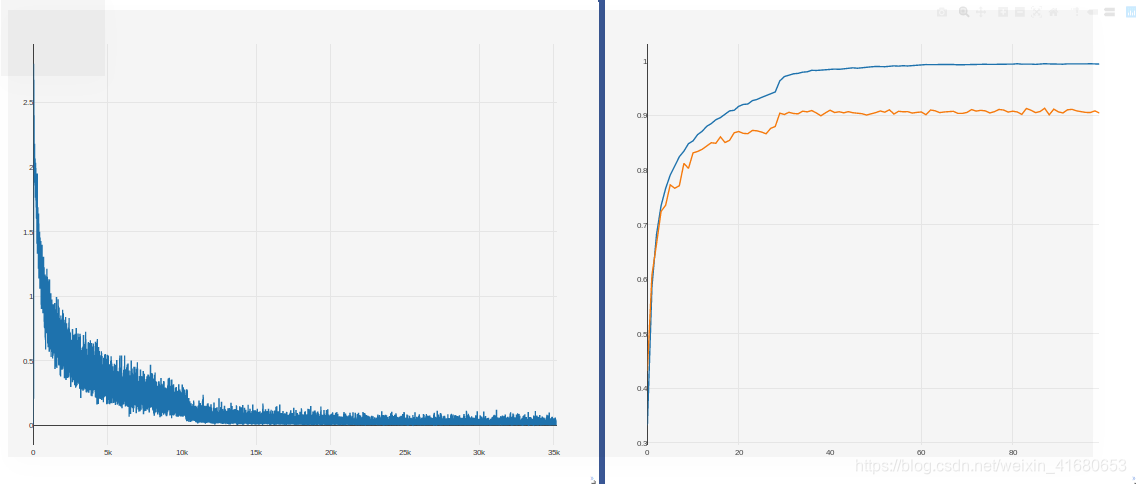

5)最终测试

数据增强只用随机剪裁和随机翻转,没有用色彩变换,因为测试集中并不包含带色彩变换的图片,尽量是训练数据分布特性与测试数据相同;

随机失活设置了0.5尽量避免过拟合;

500个epoch,保证训练次数足够长。

Test Loss:0.403510, Test ACC:0.926325