1、准备环境

虚拟机操作系统: Centos7

角色 IP

Master 192.168.150.140

Node1 192.168.150.141

Node2 192.168.150.142

2、系统初始化工作

在三台虚拟机上进行如下操作:

2、1 关闭防火墙

$ systemctl stop firewalld

$ systemctl disable firewalld2、2 关闭selinux

$ sed -i 's/enforcing/disabled/' /etc/selinux/config

$ setenforce 0 2、3 关闭swap

$ swapoff -a

$ sed -ri 's/.*swap.*/#&/' /etc/fstab 2、4 设置主机名

$ hostnamectl set-hostname <hostname>注意:设置完主机名需要重启机器

2、5 在master添加hosts

$ cat >> /etc/hosts << EOF

192.168.150.140 k8smaster

192.168.150.141 k8snode1

192.168.150.142 k8snode2

EOF2、6 将桥接的IPv4流量传递到iptables的链

$ cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

$ sysctl --system2、7 设置时间同步

$ yum install ntpdate -y

$ ntpdate time.windows.com3、所有节点安装Docker/kubeadm/kubelet

3、1 安装Docker

$ yum -y install wget

$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

$ yum -y install docker-ce-18.06.1.ce-3.el7

$ systemctl enable docker && systemctl start docker验证Docker是否安装:

$ docker --version设置docker hub镜像仓库为阿里云镜像仓库加速器

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://自己的阿里云镜像加速器地址"]

}

重启Docker

$ systemctl daemon-reload

$ systemctl restart docker验证容器镜像仓库地址是否更换:

$ docker info

3、2 添加阿里云YUM软件镜像源

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF3、3 安装kubeadm,kubelet和kubectl

由于版本更新频繁,这里指定版本号部署:

$ yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

$ systemctl enable kubelet4、部署Master

$ kubeadm init \

--apiserver-advertise-address=自己Master节点的IP \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.18.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16注意:

① service-cidr 和 pod-network-cidr 网段地址不能冲突

② 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

执行结果如下:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.150.140:6443 --token 6to0ov.bek8o9giq6rdo3en \

--discovery-token-ca-cert-hash sha256:bc493beffc38c272713b70fc7e7dd4bd6da2b23aa23173296d9597fd3499dfc4

使用kubectl工具:

在Master节点执行:

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config注意:此命令是在执行 kubeadm init 时产生的

5、部署Node:

$ kubeadm join 192.168.1.11:6443 --token esce21.q6hetwm8si29qxwn \

--discovery-token-ca-cert-hash sha256:00603a05805807501d7181c3d60b478788408cfe6cedefedb1f97569708be9c5注意:此命令也是在kubeadm init 时产生的 且默认token有效期为24小时,当过期之后,该token就不可用了。这时就需要重新创建token,操作如下:

$ kubeadm token create --print-join-command验证三个节点是否在集群中:

$ kubectl get nodes执行结果如下:

NAME STATUS ROLES AGE VERSION

k8smaster NotReady master 6m32s v1.18.0

k8snode1 NotReady <none> 26s v1.18.0

k8snode2 NotReady <none> 27s v1.18.0

可以看到三个节点已经加入到集群中,但是状态为NotReady,因为此时没有网络,需要在Master节点安装网络插件。

6、安装CNI网络插件:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

注意:这里可能执行不成功,因此需要进行如下的操作

kube-flannel.yml内容如下:

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unsed in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"cniVersion": "0.2.0",

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: lizhenliang/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: lizhenliang/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

将此文件上传到虚拟机,然后执行

$ kubectl apply -f kube-flannel.yml查看k8s集群状态:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster Ready master 7m25s v1.18.0

k8snode1 Ready <none> 6m16s v1.18.0

k8snode2 Ready <none> 6m6s v1.18.0可以看到此时三个节点的状态为Ready。

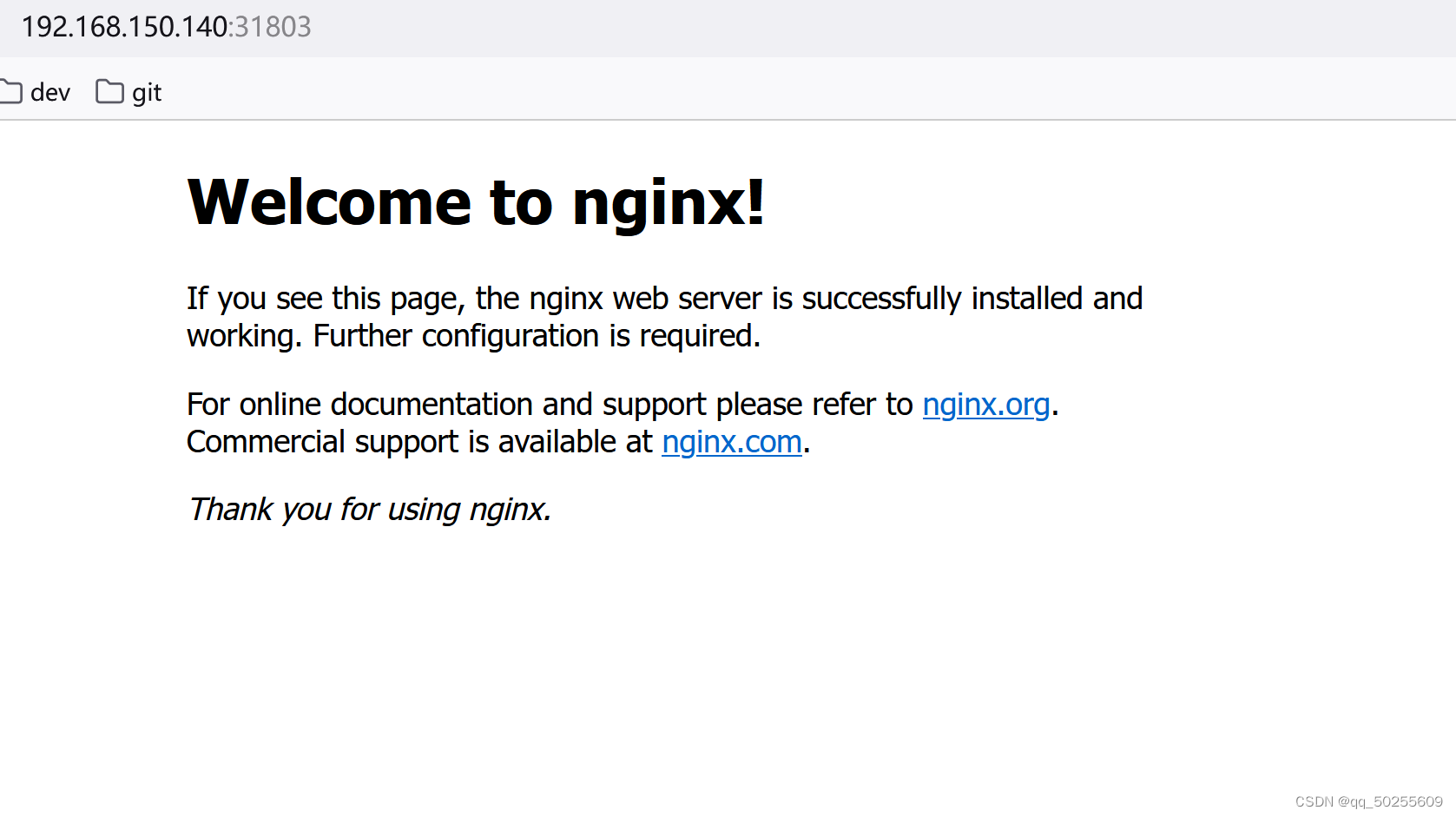

7、测试k8s集群

$ kubectl create deployment nginx --image=nginx

$ kubectl expose deployment nginx --port=80 --type=NodePort

$ kubectl get pod,svc访问nginx:http://任意节点IP:端口号

效果如下:

此时,kubeadm快速部署k8s集群已经成功。

版权声明:本文为qq_50255609原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。