一、pom文件中build修改

<build>

<finalName>spark-hdfs-show</finalName>

<sourceDirectory>src/main/scala</sourceDirectory>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<version>2.15.2</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.6.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.19</version>

<configuration>

<skip>true</skip>

</configuration>

</plugin>

</plugins>

</build>

二、如果需要将配置文件也打包进入jar包可使用如下内容

<resources>

<resource>

<directory>src/main/resources</directory>

<includes>

<include>**/*.properties</include>

</includes>

</resource>

</resources>

三、clean->package打包,并将打包好的jar包复制到虚拟机某目录下

四、成功启动spark后,进入spark的bin目录

[root@jzy1 ~]# cd /opt/soft/spark234/bin/

五、在该目录下输入如下指令

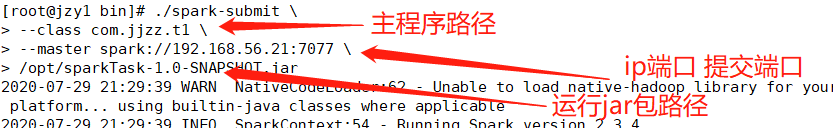

[root@jzy1 bin]# ./spark-submit \

> --class com.jjzz.t1 \

> --master spark://192.168.56.21:7077 \

> /opt/sparkTask-1.0-SNAPSHOT.jar

注:若是单机模式可如上 省略–master local,若是yarn模式则不可省略–master yarn --deploy-mode client

版权声明:本文为weixin_42487460原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。