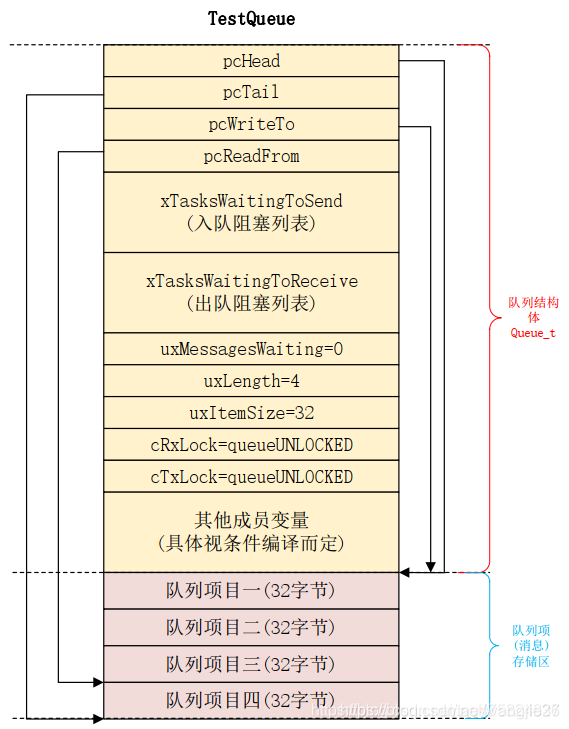

1,队列中比较重要的结构体:xQUEUE (Queue_t)

2,入队相关API :

xQueueSendToFront

xQueueSendToBack

xQueueSend

xQueueOverwrite

最后调用的都是xQueueGenericSend函数中,会调用prvCopyDataToQueue来做真实的拷贝。

prvCopyDataToQueue就是根据入参xPosition的不同,将pvItemToQueue拷贝到队列中不同的地方,之后修改指针pcWriteTo或者pcReadFrom。如果是队尾入队queueSEND_TO_BACK,就是正常FIFO,拷贝到pcWriteTo所指的地方,然后把pcWriteTo后移uxItemSize长度,如果pcWriteTo达到了尾部pcTail,就把pcWriteTo指回开头pcHead,构成循环。如果是队首入队queueSEND_TO_FRONT,拷贝到pcReadFrom所指的地方,然后把pcReadFrom前移uxItemSize长度,如果pcReadFrom达到了首部pcHead,就把pcReadFrom指回最后一个队列项( pxQueue->u.xQueue.pcTail - pxQueue->uxItemSize ),构成循环。

两种情况,queueSEND_TO_BACK时pcWriteTo后移很好理解。

static BaseType_t prvCopyDataToQueue( Queue_t * const pxQueue, const void *pvItemToQueue, const BaseType_t xPosition )

{

BaseType_t xReturn = pdFALSE;

UBaseType_t uxMessagesWaiting;

/* This function is called from a critical section. */

uxMessagesWaiting = pxQueue->uxMessagesWaiting;

if( pxQueue->uxItemSize == ( UBaseType_t ) 0 )

{

#if ( configUSE_MUTEXES == 1 )

{

if( pxQueue->uxQueueType == queueQUEUE_IS_MUTEX )

{

/* The mutex is no longer being held. */

xReturn = xTaskPriorityDisinherit( pxQueue->u.xSemaphore.xMutexHolder );

pxQueue->u.xSemaphore.xMutexHolder = NULL;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#endif /* configUSE_MUTEXES */

}

else if( xPosition == queueSEND_TO_BACK )

{

( void ) memcpy( ( void * ) pxQueue->pcWriteTo, pvItemToQueue, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 !e418 !e9087 MISRA exception as the casts are only redundant for some ports, plus previous logic ensures a null pointer can only be passed to memcpy() if the copy size is 0. Cast to void required by function signature and safe as no alignment requirement and copy length specified in bytes. */

pxQueue->pcWriteTo += pxQueue->uxItemSize; /*lint !e9016 Pointer arithmetic on char types ok, especially in this use case where it is the clearest way of conveying intent. */

if( pxQueue->pcWriteTo >= pxQueue->u.xQueue.pcTail ) /*lint !e946 MISRA exception justified as comparison of pointers is the cleanest solution. */

{

pxQueue->pcWriteTo = pxQueue->pcHead;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

( void ) memcpy( ( void * ) pxQueue->u.xQueue.pcReadFrom, pvItemToQueue, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 !e9087 !e418 MISRA exception as the casts are only redundant for some ports. Cast to void required by function signature and safe as no alignment requirement and copy length specified in bytes. Assert checks null pointer only used when length is 0. */

pxQueue->u.xQueue.pcReadFrom -= pxQueue->uxItemSize;

if( pxQueue->u.xQueue.pcReadFrom < pxQueue->pcHead ) /*lint !e946 MISRA exception justified as comparison of pointers is the cleanest solution. */

{

pxQueue->u.xQueue.pcReadFrom = ( pxQueue->u.xQueue.pcTail - pxQueue->uxItemSize );

}

else

{

mtCOVERAGE_TEST_MARKER();

}

if( xPosition == queueOVERWRITE )

{

if( uxMessagesWaiting > ( UBaseType_t ) 0 )

{

/* An item is not being added but overwritten, so subtract

one from the recorded number of items in the queue so when

one is added again below the number of recorded items remains

correct. */

--uxMessagesWaiting;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

pxQueue->uxMessagesWaiting = uxMessagesWaiting + ( UBaseType_t ) 1;

return xReturn;

}

queueSEND_TO_FRONT这里为什么pcReadFrom是前移?

prvCopyDataToQueue中,为什么queueSEND_TO_FRONT时,这里pcReadFrom是前移,这样下次read的时候读就是之前值。

xQueueGenericSend函数分析:

aseType_t xQueueGenericSend( QueueHandle_t xQueue, const void * const pvItemToQueue, TickType_t xTicksToWait, const BaseType_t xCopyPosition )

{

BaseType_t xEntryTimeSet = pdFALSE, xYieldRequired;

TimeOut_t xTimeOut;

Queue_t * const pxQueue = ( Queue_t * ) xQueue;

configASSERT( pxQueue );

configASSERT( !( ( pvItemToQueue == NULL ) && ( pxQueue->uxItemSize != ( UBaseType_t ) 0U ) ) );

configASSERT( !( ( xCopyPosition == queueOVERWRITE ) && ( pxQueue->uxLength != 1 ) ) );

#if ( ( INCLUDE_xTaskGetSchedulerState == 1 ) || ( configUSE_TIMERS == 1 ) )

{

configASSERT( !( ( xTaskGetSchedulerState() == taskSCHEDULER_SUSPENDED ) && ( xTicksToWait != 0 ) ) );

}

#endif

/* This function relaxes the coding standard somewhat to allow return

statements within the function itself. This is done in the interest

of execution time efficiency. */

for( ;; )

{

taskENTER_CRITICAL();

{

/* Is there room on the queue now? The running task must be the

highest priority task wanting to access the queue. If the head item

in the queue is to be overwritten then it does not matter if the

queue is full. */

if( ( pxQueue->uxMessagesWaiting < pxQueue->uxLength ) || ( xCopyPosition == queueOVERWRITE ) )

{

traceQUEUE_SEND( pxQueue );

xYieldRequired = prvCopyDataToQueue( pxQueue, pvItemToQueue, xCopyPosition );

#if ( configUSE_QUEUE_SETS == 1 )

{

if( pxQueue->pxQueueSetContainer != NULL )

{

if( prvNotifyQueueSetContainer( pxQueue, xCopyPosition ) != pdFALSE )

{

/* The queue is a member of a queue set, and posting

to the queue set caused a higher priority task to

unblock. A context switch is required. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

/* If there was a task waiting for data to arrive on the

queue then unblock it now. */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) != pdFALSE )

{

/* The unblocked task has a priority higher than

our own so yield immediately. Yes it is ok to

do this from within the critical section - the

kernel takes care of that. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else if( xYieldRequired != pdFALSE )

{

/* This path is a special case that will only get

executed if the task was holding multiple mutexes

and the mutexes were given back in an order that is

different to that in which they were taken. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

}

#else /* configUSE_QUEUE_SETS */

{

/* If there was a task waiting for data to arrive on the

queue then unblock it now. */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) != pdFALSE )

{

/* The unblocked task has a priority higher than

our own so yield immediately. Yes it is ok to do

this from within the critical section - the kernel

takes care of that. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else if( xYieldRequired != pdFALSE )

{

/* This path is a special case that will only get

executed if the task was holding multiple mutexes and

the mutexes were given back in an order that is

different to that in which they were taken. */

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#endif /* configUSE_QUEUE_SETS */

taskEXIT_CRITICAL();

return pdPASS;

}

else

{

if( xTicksToWait == ( TickType_t ) 0 )

{

/* The queue was full and no block time is specified (or

the block time has expired) so leave now. */

taskEXIT_CRITICAL();

/* Return to the original privilege level before exiting

the function. */

traceQUEUE_SEND_FAILED( pxQueue );

return errQUEUE_FULL;

}

else if( xEntryTimeSet == pdFALSE )

{

/* The queue was full and a block time was specified so

configure the timeout structure. */

vTaskSetTimeOutState( &xTimeOut );

xEntryTimeSet = pdTRUE;

}

else

{

/* Entry time was already set. */

mtCOVERAGE_TEST_MARKER();

}

}

}

taskEXIT_CRITICAL();

/* Interrupts and other tasks can send to and receive from the queue

now the critical section has been exited. */

vTaskSuspendAll();

prvLockQueue( pxQueue );

/* Update the timeout state to see if it has expired yet. */

if( xTaskCheckForTimeOut( &xTimeOut, &xTicksToWait ) == pdFALSE )

{

if( prvIsQueueFull( pxQueue ) != pdFALSE )

{

traceBLOCKING_ON_QUEUE_SEND( pxQueue );

vTaskPlaceOnEventList( &( pxQueue->xTasksWaitingToSend ), xTicksToWait );

/* Unlocking the queue means queue events can effect the

event list. It is possible that interrupts occurring now

remove this task from the event list again - but as the

scheduler is suspended the task will go onto the pending

ready last instead of the actual ready list. */

prvUnlockQueue( pxQueue );

/* Resuming the scheduler will move tasks from the pending

ready list into the ready list - so it is feasible that this

task is already in a ready list before it yields - in which

case the yield will not cause a context switch unless there

is also a higher priority task in the pending ready list. */

if( xTaskResumeAll() == pdFALSE )

{

portYIELD_WITHIN_API();

}

}

else

{

/* Try again. */

prvUnlockQueue( pxQueue );

( void ) xTaskResumeAll();

}

}

else

{

/* The timeout has expired. */

prvUnlockQueue( pxQueue );

( void ) xTaskResumeAll();

traceQUEUE_SEND_FAILED( pxQueue );

return errQUEUE_FULL;

}

}

}xQueueGenericSend代码流程图解析 :

3,pcTail指向队列存储区结束后的下一个字节。pcTail指的内容已经不属于队列了,pcHead指的是队列的第一项,也是队列的第一个字节。然后 pxQueue->u.xQueue.pcTail = pxQueue->pcHead + ( pxQueue->uxLength * pxQueue->uxItemSize ); ,可见pcTail指的不属于队列了。比如一个队列的初始区域,从0x10地址开始,4个队列项,每个队列项是2字节。即0x10,0x11为第一个队列项,0x12,0x13是第二个,0x14,015是第三个,0x16,0x17是第四个。pcHead = 0x10,而pcTail = pcHead + 4 *2 = 0x18,指的区域已经不是队列内的了。

4,#define xQueueSend( xQueue, pvItemToQueue, xTicksToWait )

xQueueGenericSend( ( xQueue ), ( pvItemToQueue ), ( xTicksToWait ), queueSEND_TO_BACK )

xYieldRequired = prvCopyDataToQueue( pxQueue, pvItemToQueue, xCopyPosition );

static BaseType_t prvCopyDataToQueue( Queue_t * const pxQueue, const void *pvItemToQueue, const BaseType_t xPosition )

{

BaseType_t xReturn = pdFALSE;

UBaseType_t uxMessagesWaiting;

/* This function is called from a critical section. */

uxMessagesWaiting = pxQueue->uxMessagesWaiting;

if( pxQueue->uxItemSize == ( UBaseType_t ) 0 )

{

#if ( configUSE_MUTEXES == 1 )

{

if( pxQueue->uxQueueType == queueQUEUE_IS_MUTEX )

{

/* The mutex is no longer being held. */

xReturn = xTaskPriorityDisinherit( ( void * ) pxQueue->pxMutexHolder );

pxQueue->pxMutexHolder = NULL;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#endif /* configUSE_MUTEXES */

}

else if( xPosition == queueSEND_TO_BACK )

{

( void ) memcpy( ( void * ) pxQueue->pcWriteTo, pvItemToQueue, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 !e418 MISRA exception as the casts are only redundant for some ports, plus previous logic ensures a null pointer can only be passed to memcpy() if the copy size is 0. */

pxQueue->pcWriteTo += pxQueue->uxItemSize;

if( pxQueue->pcWriteTo >= pxQueue->pcTail ) /*lint !e946 MISRA exception justified as comparison of pointers is the cleanest solution. */

{

pxQueue->pcWriteTo = pxQueue->pcHead;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

( void ) memcpy( ( void * ) pxQueue->u.pcReadFrom, pvItemToQueue, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 MISRA exception as the casts are only redundant for some ports. */

pxQueue->u.pcReadFrom -= pxQueue->uxItemSize;

if( pxQueue->u.pcReadFrom < pxQueue->pcHead ) /*lint !e946 MISRA exception justified as comparison of pointers is the cleanest solution. */

{

pxQueue->u.pcReadFrom = ( pxQueue->pcTail - pxQueue->uxItemSize );

}

else

{

mtCOVERAGE_TEST_MARKER();

}

if( xPosition == queueOVERWRITE )

{

if( uxMessagesWaiting > ( UBaseType_t ) 0 )

{

/* An item is not being added but overwritten, so subtract

one from the recorded number of items in the queue so when

one is added again below the number of recorded items remains

correct. */

--uxMessagesWaiting;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

pxQueue->uxMessagesWaiting = uxMessagesWaiting + 1;

return xReturn;

}