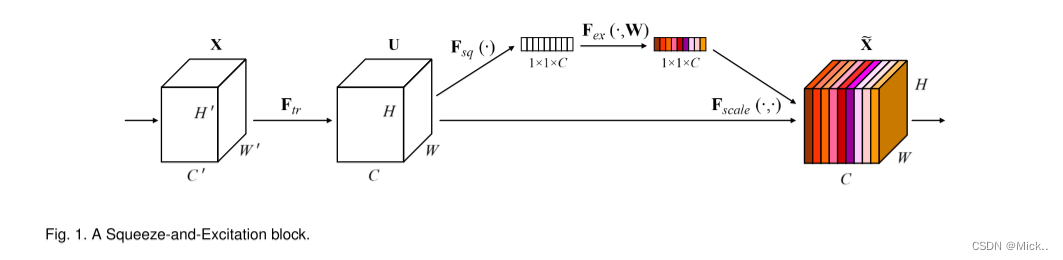

卷积神经网络的核心是卷积算子。卷积通过一组滤波器沿着通道维度获取局部空间和通道信息。通过叠加卷积层和非线性激活函数来得到全局感受野。目前研究的主题还是寻找更加高效的特征表示。最近的一些研究也集成了学习机制到cnn中来捕获特征之间的空间关系。比如inception架构,加入了多尺度处理,实现更好的效果。进一步的工作寻求更好地建模空间依赖性 [7],[8],并将空间注意力纳入网络结构 [9]。

class SELayer(nn.Module):

def __init__(self, channel, reduction=16):

super(SELayer, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.fc = nn.Sequential(

nn.Linear(channel, channel // reduction, bias=False),

nn.ReLU(inplace=True),

nn.Linear(channel // reduction, channel, bias=False),

nn.Sigmoid()

)

def forward(self, x):

b, c, _, _ = x.size()

y = self.avg_pool(x).view(b, c)

y = self.fc(y).view(b, c, 1, 1)

return x * y.expand_as(x)

class SEBottleneck(nn.Module):

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample=None, reduction=16):

super(SEBottleneck, self).__init__()

self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride,

padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False)

self.bn3 = nn.BatchNorm2d(planes * 4)

self.relu = nn.ReLU(inplace=True)

self.se = SELayer(planes * 4, reduction)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out = self.se(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out参考文献

senet.pytorch/se_resnet.py at master · moskomule/senet.pytorch (github.com)

版权声明:本文为qq_40107571原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。