将分区表中的数据导入mysql

- 创建mysql表

create table if not exists test.test_product_id (

product_id bigint(11) comment "商品ID",

product_name varchar(255) comment "商品名称",

settle_price decimal(20, 2) comment "结算价格",

product_main_id bigint(11) comment "主商品ID",

product_brand_id bigint(11) comment "品牌ID",

p_day date comment '分区日期'

) comment '商品表';

- 导出脚本

sqoop export \

-Dmapreduce.job.queuename=crm \

-Dmapreduce.job.max.split.locations=2000 \

--mapreduce-job-name tmp_constellation \

--connect jdbc:mysql://localhost:3306/test \

--username username\

--password password\

--table test_product_id \

--columns product_id,product_name,settle_price,product_main_id,product_brand_id,p_day \

--hcatalog-database secoo_dim \

--hcatalog-table dim_product_basic_p_day \

--hcatalog-partition-keys p_day \

--hcatalog-partition-values 2020-06-09 \

-m 8

测试null-string和null-non-string参数

不使用null-string和null-non-string参数

- 准备测试环境

-- 新建hive表,并构造测试数据

create table if not exists tmp.tmp_test_sqoop_hcatalog (

user_id bigint,

user_name string,

age int,

score double

) comment ''

stored as parquet;

--构造数据

insert overwrite table tmp.tmp_test_sqoop_hcatalog

select 110, 'ray', 20, 3.14

union all select 111, 'kay', 30, 5.21

union all select 113, 'jay', 22, 3.22

union all select 114, 'hay', 22, 5.23

union all select 115, null, null, null;

--建立mysql表

create table if not exists test.tmp_test_sqoop_hcatalog (

user_id bigint(11),

user_name varchar(255),

age int(11),

score double

) comment '';

- 导数脚本

sqoop export \

-Dmapreduce.job.queuename=crm \

-Dmapreduce.job.max.split.locations=2000 \

--mapreduce-job-name tmp_constellation \

--connect jdbc:mysql://localhost:3306/test \

--username username\

--password password\

--table tmp_test_sqoop_hcatalog \

--columns user_id,user_name,age,score \

--hcatalog-database tmp \

--hcatalog-table tmp_test_sqoop_hcatalog \

-m 2

- 验证数据

mysql> select * from test.tmp_test_sqoop_hcatalog;

+---------+-----------+------+-------+

| user_id | user_name | age | score |

+---------+-----------+------+-------+

| 110 | ray | 20 | 3.14 |

| 111 | kay | 30 | 5.21 |

| 113 | jay | 22 | 3.22 |

| 114 | hay | 22 | 5.23 |

| 115 | NULL | NULL | NULL |

+---------+-----------+------+-------+

5 rows in set (0.00 sec)

- 结论,由上述数据可知,

hcatalog可以自动将hive表中的null值转换为mysql中对应的null值,不需要单独使用--input-null-string和--input-null-non-string参数了。

使用null-string和null-non-string参数

sqoop export \

-Dmapreduce.job.queuename=crm \

-Dmapreduce.job.max.split.locations=2000 \

--mapreduce-job-name tmp_constellation \

--connect jdbc:mysql://locahost:3306/test \

--username username \

--password password\

--table tmp_test_sqoop_hcatalog \

--columns user_id,user_name,age,score \

--hcatalog-database tmp \

--hcatalog-table tmp_test_sqoop_hcatalog \

--input-null-string 'world' \

--input-null-non-string 'hello' \

-m 2

- 验证数据

mysql> select * from test.tmp_test_sqoop_hcatalog;

+---------+-----------+------+-------+

| user_id | user_name | age | score |

+---------+-----------+------+-------+

| 110 | ray | 20 | 3.14 |

| 111 | kay | 30 | 5.21 |

| 113 | jay | 22 | 3.22 |

| 114 | hay | 22 | 5.23 |

| 115 | NULL | NULL | NULL |

+---------+-----------+------+-------+

- 结论,

hcatalog不支持null-string和null-non-string参数,其内部自己做了null-string和null-non-string处理。

测试-m参数

- 准备数据

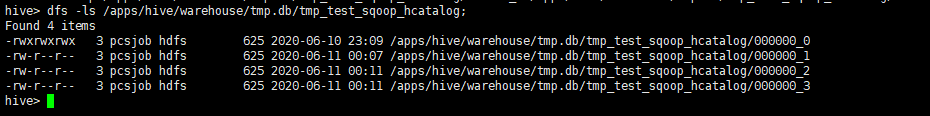

如上图所示,表tmp.tmp_test_sqoop_hcatalog下有4个文件

不使用-m参数,使用默认值

- 导数脚本

sqoop export \

-Dmapreduce.job.queuename=crm \

-Dmapreduce.job.max.split.locations=2000 \

--mapreduce-job-name tmp_constellation \

--connect jdbc:mysql://localhost:3306/test \

--username username\

--password password\

--table tmp_test_sqoop_hcatalog \

--columns user_id,user_name,age,score \

--hcatalog-database tmp \

--hcatalog-table tmp_test_sqoop_hcatalog

- 结论,由上图可知,当使用

hcatalog导出数据时,默认是根据文件的个数和文件的大小来决定map的数量的,map数量的计算公式与mr中mapper任务的计算公式相同。

使用-m参数

- 导数脚本

sqoop export \

-Dmapreduce.job.queuename=crm \

-Dmapreduce.job.max.split.locations=2000 \

--mapreduce-job-name tmp_constellation \

--connect jdbc:mysql://localhost:3306/test \

--username username\

--password password\

--table tmp_test_sqoop_hcatalog \

--columns user_id,user_name,age,score \

--hcatalog-database tmp \

--hcatalog-table tmp_test_sqoop_hcatalog \

-m 2

- 结论,由上图可知

map的数量与指定的值相同,都是2,-m参数是生效的。

测试导入源数据使用逗号分隔的数据

- 准备数据

--创建hive表

create table if not exists tmp.tmp_test_sqoop_hcatalog_01 (

user_id bigint,

user_name string,

age int,

score double

) comment ''

row format delimited

fields terminated by ','

lines terminated by '\n';

--生成数据

insert overwrite table tmp.tmp_test_sqoop_hcatalog_01

select 110, 'ray', 20, 3.14

union all select 111, 'kay', 30, 5.21

union all select 113, 'jay', 22, 3.22

union all select 114, 'hay', 22, 5.23

union all select 115, null, null, null;

--创建mysql表

create table if not exists test.tmp_test_sqoop_hcatalog_01 (

user_id bigint(11),

user_name varchar(255),

age int(11),

score double

) comment '';

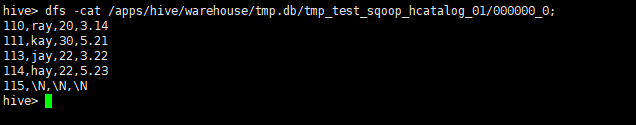

源数据是用逗号分隔的

- 导数脚本

sqoop export \

-Dmapreduce.job.queuename=crm \

-Dmapreduce.job.max.split.locations=2000 \

--mapreduce-job-name tmp_constellation \

--connect jdbc:mysql://localhost:3306/test \

--username username\

--password password\

--table tmp_test_sqoop_hcatalog_01 \

--columns user_id,user_name,age,score \

--hcatalog-database tmp \

--hcatalog-table tmp_test_sqoop_hcatalog_01 \

-m 2

- 结论,

hcatalog可以导入以逗号,或者其他分隔符分隔的数据,不需要使用input-null-non-string和input-null-string指定分隔符。

遇到的问题

导出过程中有警告信息:WARN split.JobSplitWriter: Max block location exceeded for split: org.apache.sqoop.mapreduce.hcat.SqoopHCatInputSplit@50580f9b splitsize: 22 maxsize: 10

原因分析

hive任务中间数据产生大量小文件,导致split超过了maxsize,引起了任务失败解决办法

--增加maxBlockLocations的大小,默认为10

set mapreduce.job.max.split.locations=200000;

# 在sqoop中添加参数

-Dmapreduce.job.max.split.locations=200000

为什么hadoop要设置maxBlockLocations呢?

what’s the recommended value of mapreduce.job.max.split.locations ?

This configuration is involved since MR v1. It serves as an up limit for DN locations of job split which intend to protect the JobTracker from overloaded by jobs with huge numbers of split locations. For YARN in Hadoop 2, this concern is lessened as we have per job AM instead of JT. However, it will still impact RM as RM will potentially see heavy request from the AM which tries to obtain many localities for the split. With hitting this limit, it will truncate location number to given limit with sacrifice a bit data locality but get rid of the risk to hit bottleneck of RM.

Depends on your job’s priority (I believer it is a per job configuration now), you can leave it as a default (for lower or normal priority job) or increase to a larger number. Increase this value to larger than DN number will be the same impact as set it to DN’s number.