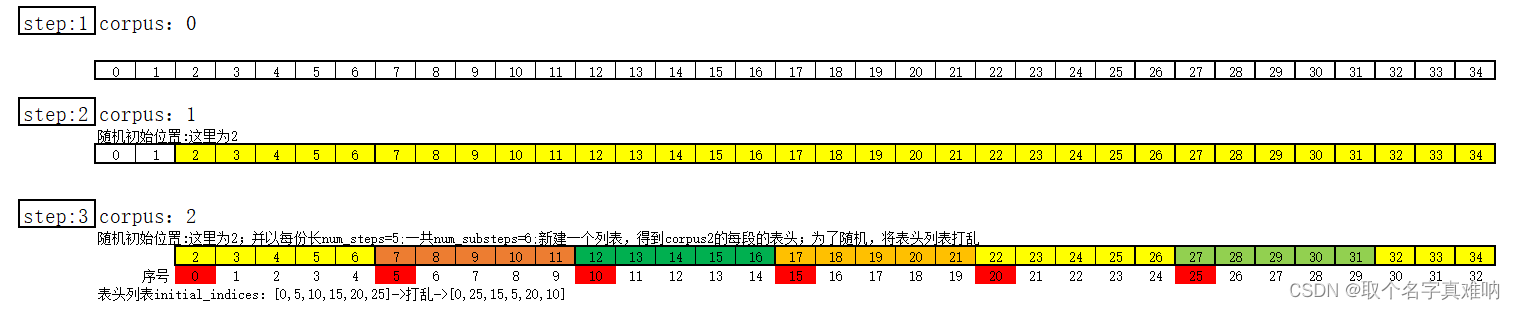

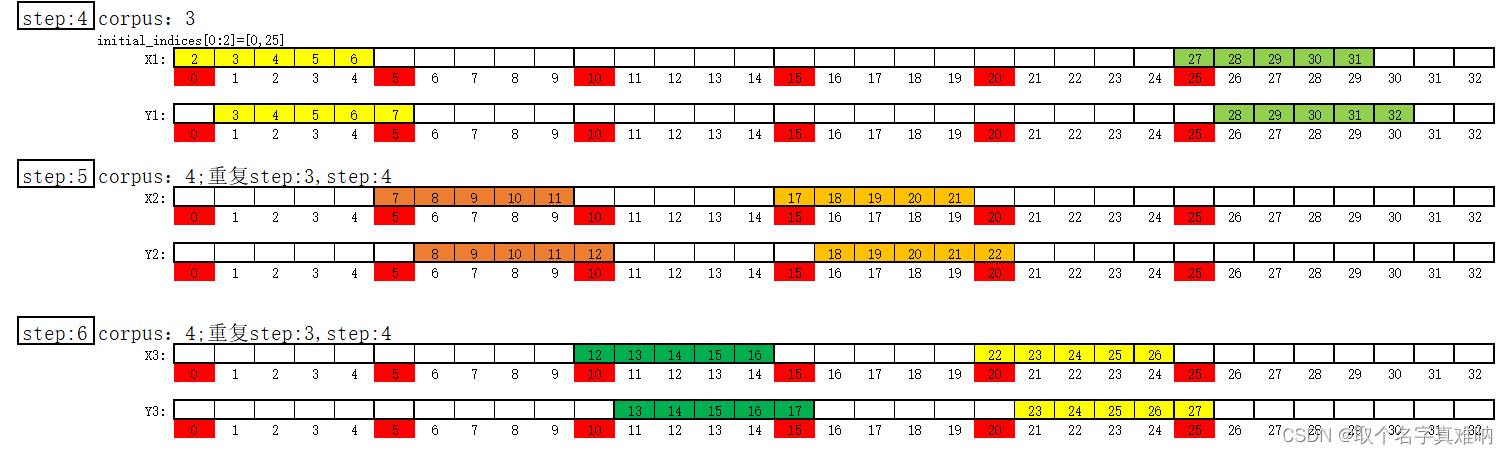

1. 随机采样

1.1 示意图

1.2 代码

import torch

from d2l import torch as d2l

import random

def seq_data_iter_random(corpus, batch_size, num_steps):

# 随机初始化位置对corpus进行切割得到新的额corpus列表

corpus = corpus[random.randint(0, num_steps - 1):]

num_subseqs = (len(corpus) - 1) // num_steps

# 创建一个新的列表initial_indices:[0,5,10,15,20,25]

initial_indices = list(range(0, num_subseqs * num_steps, num_steps))

# 随机打乱列表initial_indices=[5,25,0,10,20,15]:表示的是num_steps对应的表头序号

random.shuffle(initial_indices)

# 根据坐标位置来获取courpus列表中的值

def data(pos):

return corpus[pos:pos + num_steps]

# num_batches=3,batch_size=2

num_batches = num_subseqs // batch_size

# i in [0,2,4]

for i in range(0, batch_size * num_batches, batch_size):

# initial_indices_per_batch 可以取batch_size个数值,就是为了取出表头序号

initial_indices_per_batch = initial_indices[i:i + batch_size]

X = [data(j) for j in initial_indices_per_batch]

Y = [data(j + 1) for j in initial_indices_per_batch]

yield torch.tensor(X), torch.tensor(Y)

my_seq = list(range(35))

for X, Y in seq_data_iter_random(my_seq, batch_size=2, num_steps=5):

print('X:', X, '\nY', Y)

1.3 结果

- 因为每次随机,故结果跟图示不一致很正常,但思路一致

X: tensor([[23, 24, 25, 26, 27],

[13, 14, 15, 16, 17]])

Y tensor([[24, 25, 26, 27, 28],

[14, 15, 16, 17, 18]])

X: tensor([[ 3, 4, 5, 6, 7],

[18, 19, 20, 21, 22]])

Y tensor([[ 4, 5, 6, 7, 8],

[19, 20, 21, 22, 23]])

X: tensor([[ 8, 9, 10, 11, 12],

[28, 29, 30, 31, 32]])

Y tensor([[ 9, 10, 11, 12, 13],

[29, 30, 31, 32, 33]])

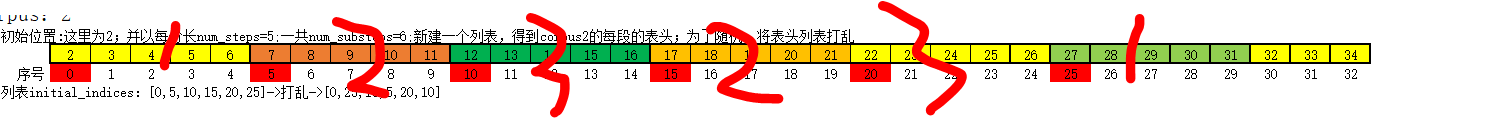

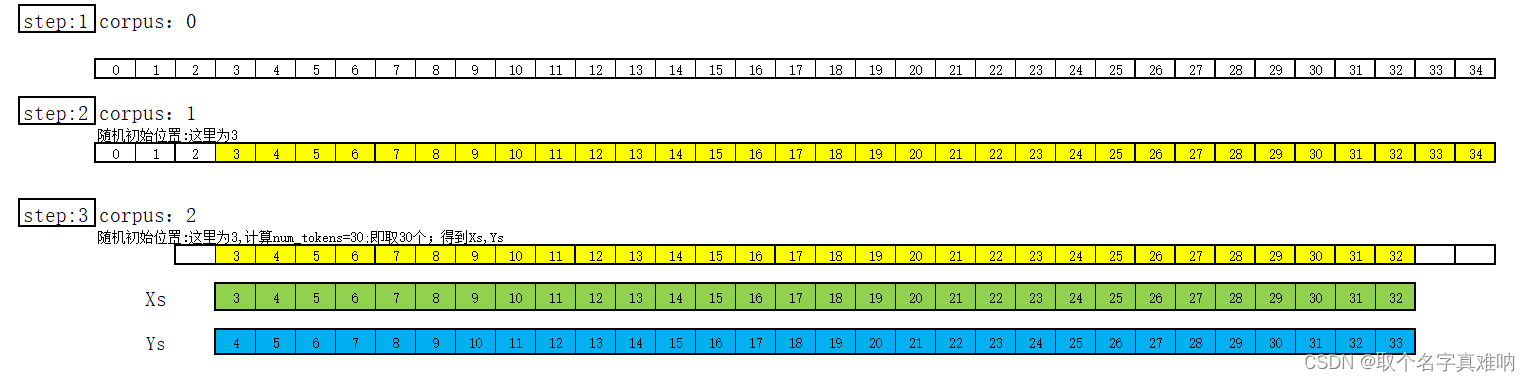

2. 顺序采样

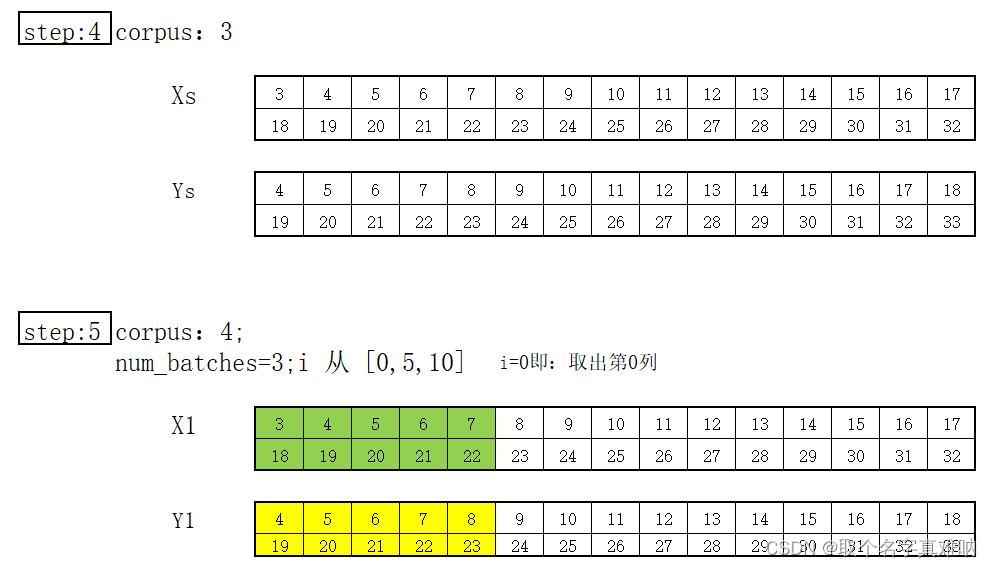

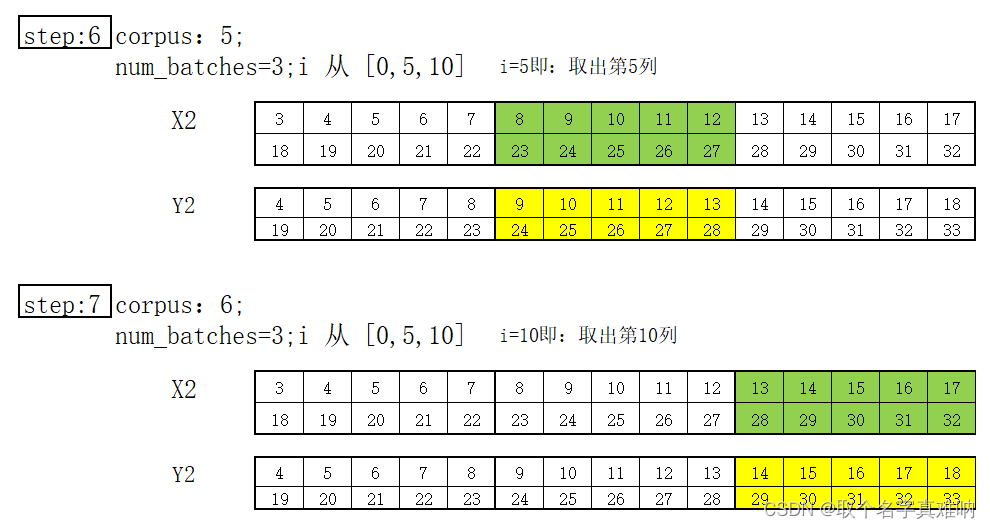

2.1 示意图

2.2 代码

def seq_data_iter_sequential(corpus, batch_size, num_steps):

# 分割的初始位置,提高泛化性

# offset=3

offset = random.randint(0, num_steps)

# num_tokens=30

num_tokens = ((len(corpus) - offset - 1) // batch_size) * batch_size

# Xs=[4,5,6,...,33];Ys=[5,6,7,...,,34]

Xs = torch.tensor(corpus[offset:offset + num_tokens])

Ys = torch.tensor(corpus[offset + 1:offset + 1 + num_tokens])

# 变形为batch_size大小;

# Xs.shape[2,16]=[[4,5,..,18],[19,20,...33]]

# Ys.shape[2,16]=[[5,6,..,19],[20,21,...34]]

Xs, Ys = Xs.reshape(batch_size, -1), Ys.reshape(batch_size, -1)

# num_batches=3;

# Xs.shape[1]=16;

# num_steps=5

num_batches = Xs.shape[1] // num_steps

# i 从 [0,5,10]取值

for i in range(0, num_steps * num_batches, num_steps):

# i=0

# X:tensor([[2,3,4,5,6],\n [18,19,20,21,22]])

# Y:tensor([[3,4,5,6,7],\n [19,20,21,22,23]])

# X:tensor([[7,8,9,10,11],\n [23,24,25,26,27]])

# Y:tensor([[8,9,10,11,12],\n [24,25,26,27,28]])

# X:tensor([[12,13,14,15,16],\n [13,14,15,16,17]])

# Y:tensor([[13,14,15,16,17],\n [29,30,31,32,33]])

X = Xs[:, i:i + num_steps]

Y = Ys[:, i:i + num_steps]

yield X, Y

for X, Y in seq_data_iter_sequential(my_seq, batch_size=2, num_steps=5):

print('X:', X, '\nY:', Y)

2.3 结果

- 因为每次随机,故结果跟图示不一致很正常,但思路一致

X: tensor([[ 2, 3, 4, 5, 6],

[18, 19, 20, 21, 22]])

Y: tensor([[ 3, 4, 5, 6, 7],

[19, 20, 21, 22, 23]])

X: tensor([[ 7, 8, 9, 10, 11],

[23, 24, 25, 26, 27]])

Y: tensor([[ 8, 9, 10, 11, 12],

[24, 25, 26, 27, 28]])

X: tensor([[12, 13, 14, 15, 16],

[28, 29, 30, 31, 32]])

Y: tensor([[13, 14, 15, 16, 17],

[29, 30, 31, 32, 33]])

3.类的封装

class SeqDataLoader:

def __init__(self,batch_size,num_steps,use_random_iter,max_tokens):

if use_random_iter:

self.data_iter_fn = d2l.seq_data_iter_random

else:

self.data_iter_fn = d2l.seq_data_iter_sequential

self.corpus,self.vocab = d2l.load_corpus_time_machine(max_tokens)

self.batch_size,self.num_steps=batch_size,num_steps

def __iter__(self):

return self.data_iter_fn(self.corpus,self.batch_size,self.num_steps)

版权声明:本文为scar2016原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。