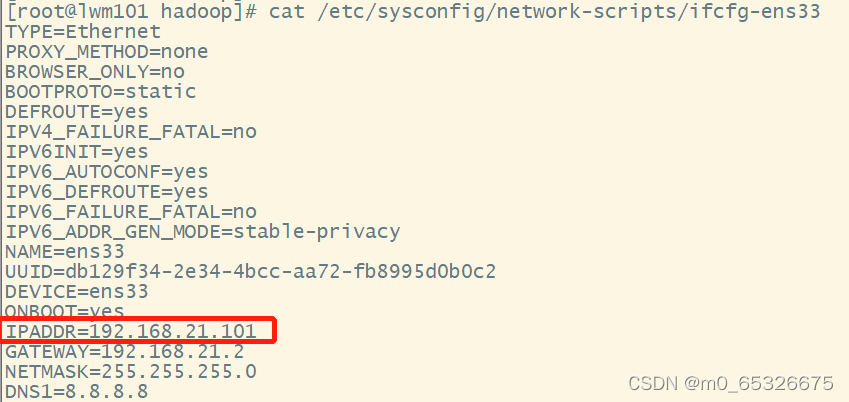

主机名 | ip地址 | 子网掩码 | 网关 | DNS1 |

lwm101 | 192.168.21.101 | 255.255.255.0 | 192.168.21.2 | 8.8.8.8 |

lwm102 | 192.168.21.102 | 255.255.255.0 | 192.168.21.2 | 8.8.8.8 |

lwm103 | 192.168.21.103 | 255.255.255.0 | 192.168.21.2 | 8.8.8.8 |

vi /etc/sysconfig/network-scripts/ifcfg-ens33把该改的改,改输入的输入

service network restart

把lwm101连接CRT操作

vi /etc/hosts

192.168.21.101 lwm101

192.168.21.102 lwm102

192.168.21.103 lwm103

tar -zxvf /opt/w/jdk-8u161-linux-x64.tar.gz -C /opt/m/

mv /opt/m/ jdk1.8.0_65 /opt/m/jdk

tar -zxvf /opt/w/zookeeper-3.4.10.tar.gz -C /opt/m/

cd /opt/m/zookeeper-3.4.10/conf/

cp zoo_sample.cfg zoo.cfg

dataDir=/opt/data/zookeeper/zkdata

server.1=lwm101:2888:3888

server.2=lwm102:2888:3888

server.3=lwm103:2888:3888

mkdir -p /opt/data/zookeeper/zkdata

cd /opt/data/zookeeper/zkdata

echo 1 > myid

tar -zxvf /opt/w/hadoop-2.7.4.tar.gz -C /opt/m/

cd /opt/m/hadoop-2.7.4/etc/hadoop/

vi hadoop-env.sh 把JAVA_HOME 目录改为/opt/jdk/

vi yarn-env.sh 把JAVA_HOME 目录改为/opt/jdk/

vi core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://master</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/m/hadoop-2.7.4/tmp</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>lwm101:2181,lwm102:2181,lwm103:2181</value>

</property>

vi hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/data/hadoop/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/data/hadoop/datanode</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>master</value>

</property>

<property>

<name>dfs.ha.namenodes.master</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.master.nn1</name>

<value>lwm101:9000</value>

</property>

<property>

<name>dfs.namenode.rpc-address.master.nn2</name>

<value>lwm102:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.master.nn1</name>

<value>lwm101:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.master.nn2</name>

<value>lwm102:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://lwm101:8485;lwm102:8485;lwm103:8485/ns1</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/data/hadoop/journaldata</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.master</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

cp mapred-site.xml.template mapred-site.xml

vi mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

vi yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarncluster</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>lwm101</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>lwm102</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>lwm101:2181,lwm102:2181,lwm103:2181</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

vi slaves

lwm101

lwm102

lwm103

vi /etc/profile

#配置jdk系统环境变量

export JAVA_HOME=/opt/m/jdk/

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

#配置zookeeper系统环境变量

export ZK_HOME=/opt/m/zookeeper-3.4.10

export PATH=$PATH:$ZK_HOME/bin

#配置Hadoop系统环境变量

export HADOOP_HOME=/opt/m/hadoop-2.7.4

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source /etc/profile

克隆出lwm102、lwm103

以lwm102为例

vi /etc/sysconfig/network-scripts/ifcfg-ens33把IP地址101改为102

service network restart

把lwm102连接CRT操作

sed -i '/UUID=/c\UUID='`uuidgen`'' /etc/sysconfig/network-scripts/ifcfg-ens33

hostnamectl set-hostname lwm102

cd /opt/data/zookeeper/zkdata

echo 2 > myid

source /etc/profile

lwm103重复以上克隆步骤(绿色的对应修改)

在lwm101操作

rpm -qa | grep openssh

service sshd status

ssh-keygen -t rsa

ssh-copy-id lwm101

ssh-copy-id lwm102

ssh-copy-id lwm103

分别在lwm101、lwm102、lwm103输入以下命令

systemctl stop firewalld.service

systemctl disable firewalld.service

zkServer.sh start

hadoop-daemon.sh start journalnode

在lwm101操作

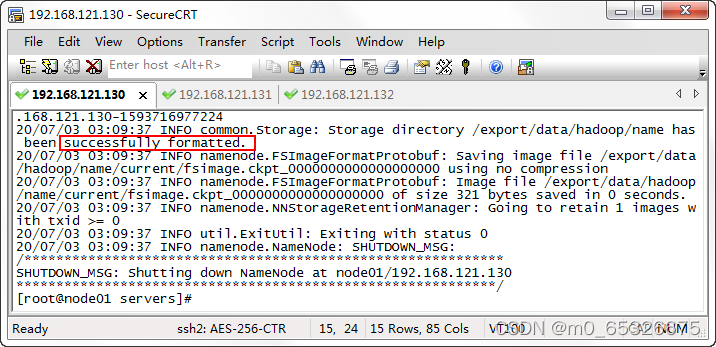

hdfs namenode -format

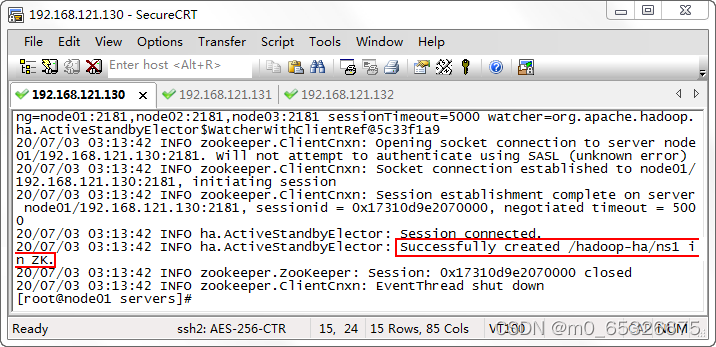

hdfs zkfc -formatZK

scp -r /opt/data/hadoop/namenode/ root@lwm102:/opt/data/hadoop/

start-dfs.sh

start-yarn.sh

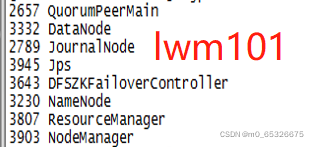

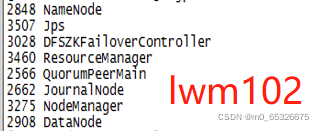

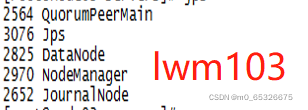

分别在lwm101、lwm102、lwm103执行jps查看

------------------------------------------

------------------------------------------

在lwm101:

# 下载并安装wget工具,wget是Linux中的一个下载文件的工具

yum install wget -y

下载MySQL 5.7的yum资源库,资源库文件会下载到当前目录下

wget -i -c http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm

# 安装MySQL 5.7的yum资源库

yum -y install mysql57-community-release-el7-10.noarch.rpm

# 安装MySQL 5.7服务

yum -y install mysql-community-server

rpm --import https://repo.mysql.com/RPM-GPG-KEY-mysql-2022

systemctl start mysqld.service

systemctl status mysqld.service

grep "password" /var/log/mysqld.log

# 修改密码为1qaz@WSX密码策略规则要求密码必须包含英文大小写、数字以及特殊符号

mysql> ALTER USER 'root'@'localhost' IDENTIFIED BY '1qaz@WSX';

# 刷新MySQL配置,使得配置生效

mysql> FLUSH PRIVILEGES;

tar -zxvf /opt/w/apache-hive-2.3.7-bin.tar.gz -C /opt/m/

cd /opt/m/apache-hive-2.3.7-bin/conf/

cp hive-env.sh.template hive-env.sh

vi hive-env.sh

export HADOOP_HOME=/opt/m/hadoop-2.7.4

export HIVE_CONF_DIR=/opt/m/apache-hive-2.3.7-bin/conf

export HIVE_AUX_JARS_PATH=/opt/m/apache-hive-2.3.7-bin/lib

export JAVA_HOME=/opt/m/jdk

vi hive-site.xml

<configuration>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive_local/warehouse</value>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp_local/hive</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&usessL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>1qaz@WSX</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

</configuration>

把mysql-connector-java-5.1.32.jar放到hive/lib

vi /etc/profile

export HIVE_HOME=/opt/m/apache-hive-2.3.7-bin

export PATH=$PATH:$HIVE_HOME/bin

source /etc/profile

scp -r /etc/profile root@lwm102:/etc/profile

schematool -initSchema -dbType mysql

#若初始化完成后出现“schemaTool completed”信息,则说明成功初始化MySQL。

hive --service hiveserver2 &

新建一个lwm101窗口:

cd /opt/m/apache-hive-2.3.7-bin

bin/beeline

在lwm102:

tar -zxvf /opt/w/apache-hive-2.3.7-bin.tar.gz -C /opt/m/

cd /opt/m/apache-hive-2.3.7-bin/conf/

touch hive-site.xml

<configuration>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive_local/warehouse</value>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp_local/hive</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>false</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://lwm101:9083</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>localhost</value>

</property>

</configuration>

Scp -r hive-site.xml root@lwm101:/opt/m/apache-hive-2.3.7-bin/conf

Source /etc/profile

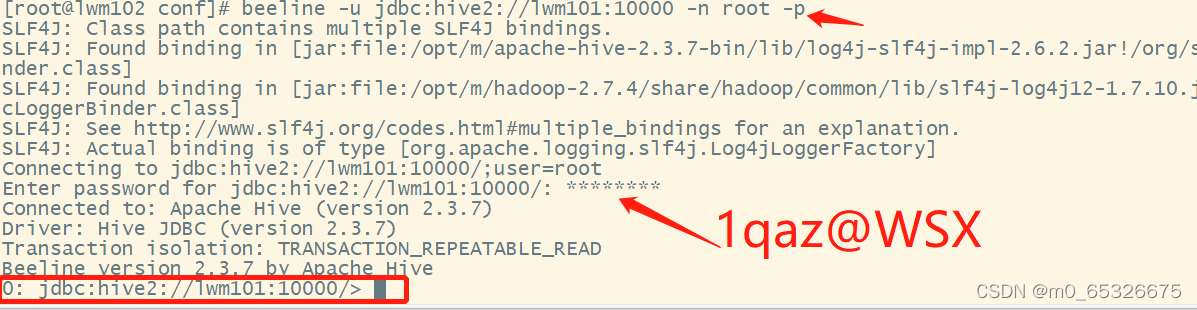

beeline -u jdbc:hive2://lwm101:10000 -n root -p

输入1qaz@WSX显示下面则成功