“alt+p”上传“apache-flume-1.9.0-bin.tar.gz”包并解压—“tar -zxvf apache-flume-1.9.0-bin.tar.gz -C app/”

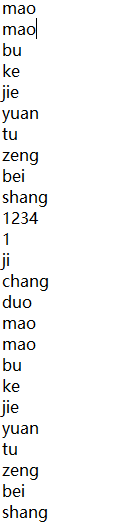

根目录新建“flumedata”—“mkdir flumedata”,写入数据

替换—“echo 1 > 1.log”

追加—“echo 1 >> 1.log”

进入“app/apache-flume-1.9.0-bin/conf/”路径

新建“my.conf”文件—“vi my.conf”,并写入

a1.sources = r1

a1.sinks = k1

a1.channels = c1

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /home/hadoop/flumedata/1.log

a1.sinks.k1.type = logger

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

进入“app/apache-flume-1.9.0-bin/”路径—“bin/flume-ng agent --conf conf --conf-file conf/my.conf --name a1 -Dflume.root.logger=INFO,console”

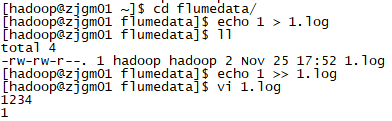

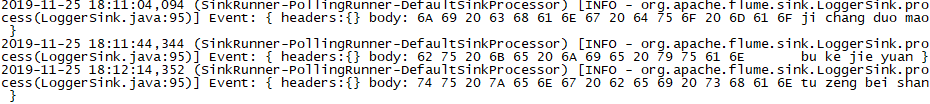

可看到结果

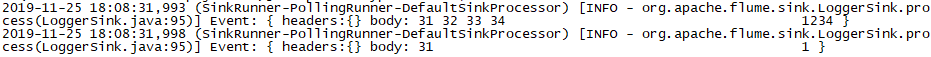

在“1.log”后追加数据

日志显示如下

进入“app/apache-flume-1.9.0-bin/conf/”路径

新建“my.conf”文件—“vi ks.conf”,并写入

a1.sources = r1

a1.sinks = k1

a1.channels = c1

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /home/hadoop/flumedata/1.log

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.brokerList= zjgm01:9092,zjgm02:9092,zjgm03:9092

a1.sinks.k1.topic=dsj

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

进入“app/zookeeper-3.4.5/bin/”路径,分别启动三台zookeeper—“./zkServer.sh start”

进入“app/kafka_2.11-0.11.0.2/”路径,分别启动三台kafka—“bin/kafka-server-start.sh config/server.properties”

进入“app/apache-storm-0.9.2-incubating/bin/”路径,分别启动三台storm—在“zjgm01”上启“./storm nimbus”,分别在“zjgm02”,“zjgm03”上启“./storm supervisor”

进入“app/apache-flume-1.9.0-bin/”路径—“bin/flume-ng agent --conf conf --conf-file conf/ks.conf --name a1 -Dflume.root.logger=INFO,console”

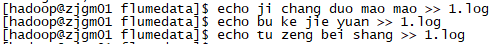

打开idea,启动“stormkafka”,在D盘“storm”文件夹中的文件部分内容如下图