import datetime

import torch

import torchvision.models

from torchvision import datasets

from torchvision import transforms as T

from torch.utils.data import DataLoader

import torch.nn as nn

import torch.optim as optim

import matplotlib.pyplot as plt

from PIL import Image

import xml.etree.ElementTree as ET

import os

import torch.nn.functional as F

# AlexNet

class AlexNet(nn.Module):

def __init__(self, num_classes=1000, init_weights=False):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 48, kernel_size=11, stride=4, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(48, 128, kernel_size=5, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(128, 192, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(192, 192, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(192, 128, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

)

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(128 * 6 * 6, 2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, num_classes)

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') # 何教授方法

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01) # 正态分布赋值

nn.init.constant_(m.bias, 0)

# VGG16

class VGG16(nn.Module):

def __init__(self, num_classes=1000, init_weights=False):

super(VGG16, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.layer2 = nn.Sequential(

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=128, out_channels=128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.layer3 = nn.Sequential(

nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.layer4 = nn.Sequential(

nn.Conv2d(in_channels=256, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.layer5 = nn.Sequential(

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.conv = nn.Sequential(

self.layer1,

self.layer2,

self.layer3,

self.layer4,

self.layer5

)

self.fc = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(4096, 1000),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(1000, num_classes)

)

def forward(self, x):

x = self.conv(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') # 何教授方法

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01) # 正态分布赋值

nn.init.constant_(m.bias, 0)

# ResNet50

class BottleNeck_1(nn.Module):

def __init__(self, in_chans, out_chans, stride):

super(BottleNeck_1, self).__init__()

self.conv1 = nn.Conv2d(in_chans, out_chans, kernel_size=1, stride=stride, bias=False)

self.batch_norm1 = nn.BatchNorm2d(out_chans)

self.conv2 = nn.Conv2d(out_chans, out_chans, kernel_size=3, stride=1, padding=1, bias=False)

self.batch_norm2 = nn.BatchNorm2d(out_chans)

self.conv3 = nn.Conv2d(out_chans, out_chans * 4, kernel_size=1, stride=1, bias=False)

self.batch_norm3 = nn.BatchNorm2d(out_chans * 4)

self.conv4 = nn.Conv2d(in_chans, out_chans * 4, kernel_size=1, stride=stride, bias=False)

self.batch_norm4 = nn.BatchNorm2d(out_chans * 4)

torch.nn.init.kaiming_normal_(self.conv1.weight, nonlinearity='relu')

torch.nn.init.constant_(self.batch_norm1.weight, 0.5)

torch.nn.init.zeros_(self.batch_norm1.bias)

torch.nn.init.kaiming_normal_(self.conv2.weight, nonlinearity='relu')

torch.nn.init.constant_(self.batch_norm2.weight, 0.5)

torch.nn.init.zeros_(self.batch_norm2.bias)

torch.nn.init.kaiming_normal_(self.conv3.weight, nonlinearity='relu')

torch.nn.init.constant_(self.batch_norm3.weight, 0.5)

torch.nn.init.zeros_(self.batch_norm3.bias)

torch.nn.init.kaiming_normal_(self.conv4.weight, nonlinearity='relu')

torch.nn.init.constant_(self.batch_norm4.weight, 0.5)

torch.nn.init.zeros_(self.batch_norm4.bias)

def forward(self, x):

out = self.conv1(x)

out = self.batch_norm1(out)

out = torch.relu(out)

out = self.conv2(out)

out = self.batch_norm2(out)

out = torch.relu(out)

out = self.conv3(out)

out = self.batch_norm3(out)

residual = self.conv4(x)

residual = self.batch_norm4(residual)

return torch.relu(out + residual)

class BottleNeck_2(nn.Module):

def __init__(self, n_chans):

super(BottleNeck_2, self).__init__()

self.conv1 = nn.Conv2d(n_chans, n_chans // 4, kernel_size=1, stride=1, bias=False)

self.batch_norm1 = nn.BatchNorm2d(n_chans // 4)

self.conv2 = nn.Conv2d(n_chans // 4, n_chans // 4, kernel_size=3, stride=1, padding=1, bias=False)

self.batch_norm2 = nn.BatchNorm2d(n_chans // 4)

self.conv3 = nn.Conv2d(n_chans // 4, n_chans, kernel_size=1, stride=1, bias=False)

self.batch_norm3 = nn.BatchNorm2d(n_chans)

torch.nn.init.kaiming_normal_(self.conv1.weight, nonlinearity='relu')

torch.nn.init.constant_(self.batch_norm1.weight, 0.5)

torch.nn.init.zeros_(self.batch_norm1.bias)

torch.nn.init.kaiming_normal_(self.conv2.weight, nonlinearity='relu')

torch.nn.init.constant_(self.batch_norm2.weight, 0.5)

torch.nn.init.zeros_(self.batch_norm2.bias)

torch.nn.init.kaiming_normal_(self.conv3.weight, nonlinearity='relu')

torch.nn.init.constant_(self.batch_norm3.weight, 0.5)

torch.nn.init.zeros_(self.batch_norm3.bias)

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.batch_norm1(out)

out = torch.relu(out)

out = self.conv2(out)

out = self.batch_norm2(out)

out = torch.relu(out)

out = self.conv3(out)

out = self.batch_norm3(out)

return torch.relu(out + residual)

class ResNet50(nn.Module):

def __init__(self, num_classes):

super().__init__()

self.num_classes = num_classes

self.stage0 = nn.Sequential(nn.Conv2d(3, 64, kernel_size=7, stride=2, bias=False),

nn.MaxPool2d(kernel_size=3, stride=2))

self.stage1 = nn.Sequential(BottleNeck_1(64, 64, 1), *(2 * [BottleNeck_2(256)]))

self.stage2 = nn.Sequential(BottleNeck_1(256, 128, 2), *(3 * [BottleNeck_2(512)]))

self.stage3 = nn.Sequential(BottleNeck_1(512, 256, 2), *(5 * [BottleNeck_2(1024)]))

self.stage4 = nn.Sequential(BottleNeck_1(1024, 512, 2), *(2 * [BottleNeck_2(2048)]))

self.conv = nn.Sequential(

self.stage0,

self.stage1,

self.stage2,

self.stage3,

self.stage4

)

self.fc = nn.Sequential(

nn.Linear(2048 * 7 * 7, 4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(4096, 1000),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(1000, num_classes)

)

def forward(self, x):

x = self.conv(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

# ResNet18

class ResBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride):

super(ResBlock, self).__init__()

self.stride = stride

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels=in_channels,

out_channels=out_channels,

stride=stride,

padding=1,

kernel_size=3,

bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=out_channels,

out_channels=out_channels,

stride=1,

padding=1,

kernel_size=3,

bias=False),

nn.BatchNorm2d(out_channels),

)

self.conv2 = nn.Conv2d(in_channels=in_channels,

out_channels=out_channels,

stride=stride,

kernel_size=1,

bias=False)

def forward(self, x):

out = self.conv1(x)

if self.stride == 2:

residual = self.conv2(x)

else:

residual = x

return torch.relu(residual + out)

class ResNet18(nn.Module):

def __init__(self, num_classes):

super().__init__()

self.num_classes = num_classes

self.stage0 = nn.Sequential(nn.Conv2d(in_channels=3,

out_channels=64,

kernel_size=7,

stride=2,

padding=3),

nn.MaxPool2d(kernel_size=3,

padding=1,

stride=2))

self.stage1 = nn.Sequential(ResBlock(64, 64, 1), ResBlock(64, 64, 1))

self.stage2 = nn.Sequential(ResBlock(64, 128, 2), ResBlock(128, 128, 1))

self.stage3 = nn.Sequential(ResBlock(128, 256, 2), ResBlock(256, 256, 1))

self.stage4 = nn.Sequential(ResBlock(256, 512, 2), ResBlock(512, 512, 1))

self.conv = nn.Sequential(self.stage0, self.stage1, self.stage2, self.stage3, self.stage4,

nn.AvgPool2d(kernel_size=7))

self.fn = nn.Sequential(nn.Linear(512, 256),

nn.ReLU(inplace=True),

nn.BatchNorm1d(256),

nn.Linear(256, num_classes))

self._initialize_weights()

def forward(self, x):

out = self.conv(x)

out = out.view(out.size(0), -1)

out = self.fn(out)

return out

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') # 何教授方法

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01) # 正态分布赋值

nn.init.constant_(m.bias, 0)

# GoogLeNet

class Inception(nn.Module):

def __init__(self, in_channels, ch1x1, ch3x3m, ch3x3, ch5x5m, ch5x5, ch1x1p):

super().__init__()

self.passage1 = nn.Sequential(nn.Conv2d(in_channels, ch1x1, kernel_size=1),

nn.BatchNorm2d(ch1x1),

nn.ReLU(inplace=True))

self.passage2 = nn.Sequential(nn.Conv2d(in_channels, ch3x3m, kernel_size=1),

nn.BatchNorm2d(ch3x3m),

nn.ReLU(ch3x3m),

nn.Conv2d(ch3x3m, ch3x3, kernel_size=3, padding=1),

nn.BatchNorm2d(ch3x3),

nn.ReLU(inplace=True))

self.passage3 = nn.Sequential(nn.Conv2d(in_channels, ch5x5m, kernel_size=1),

nn.BatchNorm2d(ch5x5m),

nn.ReLU(ch5x5m),

nn.Conv2d(ch5x5m, ch5x5, kernel_size=5, padding=2),

nn.BatchNorm2d(ch5x5),

nn.ReLU(inplace=True))

self.passage4 = nn.Sequential(nn.MaxPool2d(kernel_size=3, stride=1, padding=1),

nn.Conv2d(in_channels, ch1x1p, kernel_size=1),

nn.BatchNorm2d(ch1x1p),

nn.ReLU(inplace=True))

def forward(self, x):

passage_1 = self.passage1(x)

passage_2 = self.passage2(x)

passage_3 = self.passage3(x)

passage_4 = self.passage4(x)

outputs = [passage_1, passage_2, passage_3, passage_4]

return torch.cat(outputs, 1)

class GoogLeNet(nn.Module):

def __init__(self, num_classes=1000, init_weights=True):

super().__init__()

self.num_classes = num_classes

self.net = nn.Sequential(nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(3, stride=2, ceil_mode=True),

nn.Conv2d(64, 64, kernel_size=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 192, kernel_size=3, padding=1),

nn.BatchNorm2d(192),

nn.ReLU(inplace=True),

nn.MaxPool2d(3, stride=2, ceil_mode=True),

Inception(192, 64, 96, 128, 16, 32, 32),

Inception(256, 128, 128, 192, 32, 96, 64),

nn.MaxPool2d(3, stride=2, ceil_mode=True),

Inception(480, 192, 96, 208, 16, 48, 64),

Inception(512, 160, 112, 224, 24, 64, 64),

Inception(512, 128, 128, 256, 24, 64, 64),

Inception(512, 112, 144, 288, 32, 64, 64),

Inception(528, 256, 160, 320, 32, 128, 128),

nn.MaxPool2d(3, stride=2, ceil_mode=True),

Inception(832, 256, 160, 320, 32, 128, 128),

Inception(832, 384, 192, 384, 48, 128, 128),

nn.AdaptiveAvgPool2d((1, 1)),

nn.Flatten(),

nn.Linear(1024, 256),

nn.BatchNorm1d(256),

nn.ReLU(inplace=True),

nn.Linear(256, num_classes))

def forward(self, x):

return self.net(x)

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') # 何教授方法

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01) # 正态分布赋值

nn.init.constant_(m.bias, 0)

def GetClassName(class_name):

xml_file = os.listdir(path_root + '/ILSVRC2012_bbox_train_dogs/' + class_name)[0]

tree = ET.ElementTree(file=path_root + '/ILSVRC2012_bbox_train_dogs/' + class_name + '/' + xml_file)

return tree.find('object').find('name').text

path_root = "data/Imagenet2012/"

transform = T.Compose([

T.RandomResizedCrop(224),

T.RandomHorizontalFlip(),

T.ToTensor(),

T.Normalize([0.4777, 0.4455, 0.3886], [0.2602, 0.2534, 0.2558])])

image_net_train = datasets.ImageFolder(path_root + 'ILSVRC2012_img_train_t3',

transform=transform)

image_net_train_loader = DataLoader(image_net_train, batch_size=100, shuffle=True)

def train(learning_rate=1e-2, n_epochs=10, model_path=None, net=None, num_classes=120, train_offset=0):

device = (torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu'))

print(f"Training on device {device}.")

if net is None:

net = AlexNet

if model_path is None:

model = net(num_classes=num_classes).to(device=device)

else:

model = net(num_classes=num_classes).to(device=device)

model.load_state_dict(torch.load(model_path))

optimizer = optim.SGD(model.parameters(), learning_rate)

loss_fn = nn.CrossEntropyLoss()

for epoch in range(1, n_epochs + 1):

loss_train = 0.0

for imgs, labels in image_net_train_loader:

imgs = imgs.to(device=device)

labels = labels.to(device=device)

outputs = model(imgs)

loss = loss_fn(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

loss_train += loss.item()

print('{} Epoch: {},Training loss: {}'.format(datetime.datetime.now(), epoch,

loss_train / len(image_net_train_loader)))

if not os.path.exists("model/ImageNet/" + net.__name__):

os.makedirs("model/ImageNet/" + net.__name__)

torch.save(model.state_dict(),

"model/ImageNet/" + net.__name__ + "/epoch_" + str(epoch + train_offset) + ".model")

def predict(image_path, model_path=None, net=None, num_classes=120):

img_transformer = T.Compose([

T.Resize((224, 224)),

T.ToTensor(),

T.Normalize([0.4777, 0.4455, 0.3886], [0.2602, 0.2534, 0.2558])])

device = (torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu'))

print(f"Training on device {device}.")

img = Image.open(image_path)

img = img_transformer(img).to(device=device)

if net is None:

net = AlexNet

if model_path is None:

model = net(num_classes=num_classes).to(device=device)

else:

model = net(num_classes=num_classes).to(device=device)

model.load_state_dict(torch.load(model_path))

model.eval()

output = model(img.unsqueeze(0))

_, predicted = torch.max(output, dim=1)

return GetClassName(image_net_train.classes[predicted.item()])

if __name__ == "__main__":

# predict

# print(predict("img_16.png", "model/ImageNet/ResNet18/epoch_700.model", net=ResNet18))

# print(predict("img_5.png", "model/ImageNet/GoogLeNet/epoch_12.model", net=GoogLeNet))

# train

# train(model_path="model/ImageNet/AlexNet/epoch_1120.model", n_epochs=1000) #4.786365736098516

# train(model_path="model/ImageNet/ResNet50/epoch_3.model", net=ResNet50, n_epochs=10, train_offset=6)

# train(model_path="model/ImageNet/ResNet18/epoch_700.model", net=ResNet18, n_epochs=50, train_offset=700)

train(model_path="model/ImageNet/GoogLeNet/epoch_12.model", net=GoogLeNet, n_epochs=50, train_offset=12)

#

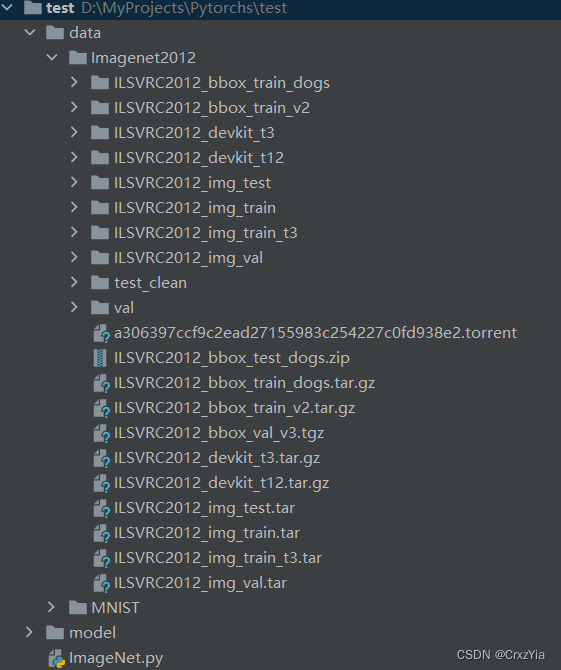

目录结构(数据集使用ImageNet2012)

版权声明:本文为weixin_41276201原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。