Python之解析网页XPath

XPath是一门语言

练习使用XPath

html_doc = """

<div>

<ul>

<li class="item-0"><a href="www.baidu.com">baidu</a>

<li class="item-1 one" name="first"><a href="https://blog.csdn.net/qq_25343557">myblog</a>

<li class="item-1 two" name="first"><a href="https://blog.csdn.net/qq_25343557">myblog2</a>

<li class="item-2"><a href="https://www.csdn.net/">csdn</a>

<li class="item-3"><a href="https://hao.360.cn/?a1004">bbb</a>

<li class="aaa"><a href="https://hao.360.cn/?a1004">aaa</a>

"""

在使用Xpath的时候需要把网页初始化

# 网页初始化

html = etree.HTML(html_doc)

# print(html) <Element html at 0x1f841388608>

获取所有的a标签值

# //子孙节点 / 子节点

# lis = html.xpath("//li/a")

# print(len(lis))

获取网页的属性值

# 获取网页的属性值

# lis = html.xpath("//li[@class='item-3']")

# print(len(lis)) 1

拿到class 里面的值

# 拿到class 里面的值

# lis = html.xpath("//a[@href='https://hao.360.cn/?a1004']/../@class")

# for i in lis:

# print(i) item-3 aaa

拿到a标签里文本的值

# 拿到a标签里文本的值

# lis = html.xpath("//a/text()")

# for i in lis:

# print(i) baidu myblog myblog2 csdn bbb aaa

starts-with 以什么开头

# starts-with 以什么开头

lis = html.xpath("//li[starts-with(@class,'item-')]/a/text()")

# for a in lis: baidu myblog myblog2 csdn bbb

# print(a)

拿到多个值

# 拿到多个值

lis = html.xpath("//li[contains(@class,'1')]")

# print(len(lis)) 2

多属性取值

# 多属性取值

lis = html.xpath("//li[contains(@class,'one') and (@name = 'first')]/a/text()")

# print(lis) ['myblog']

按照顺序选择(第二个)

# 按照顺序选择(第二个)

lis = html.xpath("//li[2]/a/text()")

倒数第一个

# 倒数第一个

lis = html.xpath("//li[last()]/a/text()")

# print(lis)

倒数第二个

# 倒数第二个

lis = html.xpath("//li[last()-1]/a/text()")

# print(lis)

位置小于等于3的

# 位置小于等于3的

lis = html.xpath("//li[position()<=3]/a/text()")

# print(lis) ['baidu', 'myblog', 'myblog2']

练习使用XPath

"""

案例练习使用XPath

"""

from lxml import etree

html_doc = """

<div>

<ul>

<li class="item-0"><a href="www.baidu.com">baidu</a>

<li class="item-1 one" name="first"><a href="https://blog.csdn.net/qq_25343557">myblog</a>

<li class="item-1 two" name="first"><a href="https://blog.csdn.net/qq_25343557">myblog2</a>

<li class="item-2"><a href="https://www.csdn.net/">csdn</a>

<li class="item-3"><a href="https://hao.360.cn/?a1004">bbb</a>

<li class="aaa"><a href="https://hao.360.cn/?a1004">aaa</a>

"""

# 网页初始化

html = etree.HTML(html_doc)

# print(html) <Element html at 0x1f841388608>

# //子孙节点 / 子节点

# lis = html.xpath("//li/a")

# print(len(lis))

# 获取网页的属性值

# lis = html.xpath("//li[@class='item-3']")

# print(len(lis)) 1

# 拿到class 里面的值

# lis = html.xpath("//a[@href='https://hao.360.cn/?a1004']/../@class")

# for i in lis:

# print(i) item-3 aaa

# 拿到a标签里文本的值

# lis = html.xpath("//a/text()")

# for i in lis:

# print(i) baidu myblog myblog2 csdn bbb aaa

# starts-with 以什么开头

lis = html.xpath("//li[starts-with(@class,'item-')]/a/text()")

# for a in lis: baidu myblog myblog2 csdn bbb

# print(a)

# 拿到多个值

lis = html.xpath("//li[contains(@class,'1')]")

# print(len(lis)) 2

# 多属性取值

lis = html.xpath("//li[contains(@class,'one') and (@name = 'first')]/a/text()")

# print(lis) ['myblog']

# 按照顺序选择(第二个)

lis = html.xpath("//li[2]/a/text()")

# 倒数第一个

lis = html.xpath("//li[last()]/a/text()")

# print(lis)

# 倒数第二个

lis = html.xpath("//li[last()-1]/a/text()")

# print(lis)

# 位置小于等于3的

lis = html.xpath("//li[position()<=3]/a/text()")

# print(lis) ['baidu', 'myblog', 'myblog2']

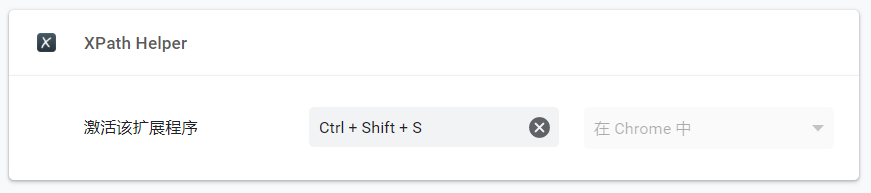

我们可以下载一个插件直接拖到谷歌里面就好,如果不可以就把插件解压成文件夹的形式在放到谷歌里

当然右键copy 再找到 copy XPath 即可

爬取《51job》相关职位信息,并保存相关职位信息

"""

爬取《51job》相关职位信息,并保存相关职位信息

"""

import requests

from lxml import etree

import csv

import time

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.87 Safari/537.36"

}

# csv是一个后缀

f = open("Python职位.csv", "w", newline="")

# 拿到一个写东西的软件

writer = csv.writer(f)

# 写一行写道Excl表里面去

writer.writerow(['编号', '职位名称', '公司名称', "薪资", "地址", "发布时间"])

i = 1

for page in range(1, 239):

response = requests.get(f"https://search.51job.com/list/020000%252C190200%252C030200,000000,0000,00,9,99,python,2,{page}.html?lang=c&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&ord_field=0&dibiaoid=0&line=&welfare=", headers=headers)

response.encoding = "gbk"

if response.status_code == 200:

html = etree.HTML(response.text)

els = html.xpath("//div[@class='el']")[4:]

for el in els:

# p'biao不用/

jobname = str(el.xpath("p[contains(@class,'t1')]/span/a/@title")).strip("[']")#默认找的的是第一个属性

jobcom = str(el.xpath("span[@class='t2']/a/@title")).strip("[']")

jobaddress = str(el.xpath("span[@class='t3']/text()")).strip("[']")

jobmoney = str(el.xpath("span[@class='t4']/text()")).strip("[']")

jobdate = str(el.xpath("span[@class='t5']/text()")).strip("[']")

writer.writerow([i, jobname, jobcom, jobaddress, jobmoney, jobdate])

i += 1

print(f"第{page}页获取完毕")

time.sleep(1)

版权声明:本文为what_to原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。