训练和预测代码如下

model=Sequential()

model.add(Dense(units=64,

input_dim=784,

kernel_initializer=‘normal’,

activation=‘relu’))

model.add(Dense(units=10,

kernel_initializer=‘normal’,

activation=‘softmax’))

model.compile(loss=‘categorical_crossentropy’,

optimizer=‘adam’,metrics=[‘accuracy’])

model.fit(x_train_normalize,

y_trainOnehot,

epochs=20,

batch_size=64,

verbose=1,

validation_data=(x_test_normalize,y_testOnehot))

y_predict= model.predict(x_train_normalize)

y_predictlabel=y_predict.argmax(1)

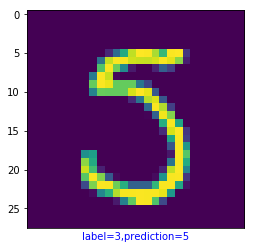

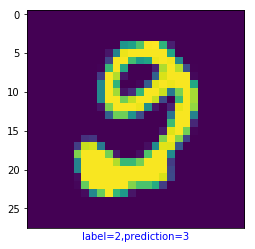

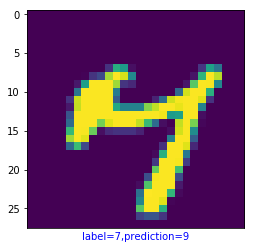

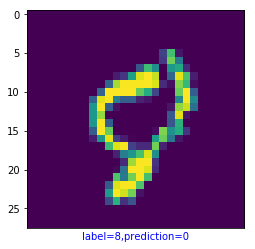

其它的手写数字都分类正确,只有0,没有一个分类正确的,算法的正确率也大致在90%左右。

分析:

1keras每次run代码,训练会从上一次训练结果的模型参数开始进行下一个epoch的训练,即使改变Nodes个数(units从64改成128),重新训练,精度和分类结果仍然和之前的结果基本一样。重新启动spyder则会重新初始化开始训练

2之前训练的精度无论迭代多少次,都是在0.89以上徘徊,因为所有的0都分类错误。(写代码遍历label为0的图像,没有正确分类的)。可能是因为在训练初期达到了局部最优,梯度下降无法走出该范围。重新训练后,路径不再经过该局部最优位置,因此精度大幅度提高到0.97 0.98

3通过查看分类错误图像有利于分析分类模型的效果,查找原因。对分类效果好的模型要注意保存。重新训练未必会再次优化到该参数。

附,训练曲线

4之后的重新训练,又发生了一次所有的1都分类错误,精确度收敛在0.86左右,在局部最优达到稳定。还有一次所有9分类错误,所有3分类错误。有一次训练,直接从精度0.95开始,还有一次收敛在精度0.77。

考虑增加nodes个数,是否能减少该现象。另外考虑有无设置训练步长的参数,避免该现象。实验证明,增加nodes不能解决该问题。

或者某些图像标记错误会引起该现象?不,如果算法鲁棒性好,即使有少量错误label应该依然有更好的训练结果,比如精度达到99%。考虑到这只是初级的算法,后续通过算法学习应该能够得到更优化的结果。

Layer (type) Output Shape Param #

dense (Dense) (None, 64) 50240

dense_1 (Dense) (None, 10) 650

Total params: 50,890

Trainable params: 50,890

Non-trainable params: 0

None

Train on 55000 samples, validate on 10000 samples

Epoch 1/40

55000/55000 [] - 5s 90us/step - loss: 8.9065 - acc: 0.4139 - val_loss: 5.2011 - val_acc: 0.6481

Epoch 2/40

55000/55000 [] - 4s 80us/step - loss: 4.3168 - acc: 0.7000 - val_loss: 3.1827 - val_acc: 0.7700

Epoch 3/40

55000/55000 [] - 5s 82us/step - loss: 3.0196 - acc: 0.7833 - val_loss: 2.8031 - val_acc: 0.7997

Epoch 4/40

55000/55000 [] - 4s 78us/step - loss: 2.7125 - acc: 0.8067 - val_loss: 2.6310 - val_acc: 0.8126

Epoch 5/40

55000/55000 [] - 5s 91us/step - loss: 2.5569 - acc: 0.8199 - val_loss: 2.5267 - val_acc: 0.8220

Epoch 6/40

55000/55000 [] - 5s 84us/step - loss: 2.4495 - acc: 0.8284 - val_loss: 2.4676 - val_acc: 0.8269

Epoch 7/40

55000/55000 [] - 4s 80us/step - loss: 2.3611 - acc: 0.8359 - val_loss: 2.4014 - val_acc: 0.8301

Epoch 8/40

55000/55000 [] - 4s 80us/step - loss: 2.2927 - acc: 0.8410 - val_loss: 2.3303 - val_acc: 0.8354

Epoch 9/40

55000/55000 [] - 4s 79us/step - loss: 2.2340 - acc: 0.8454 - val_loss: 2.3164 - val_acc: 0.8367

Epoch 10/40

55000/55000 [] - 4s 81us/step - loss: 2.1884 - acc: 0.8496 - val_loss: 2.2792 - val_acc: 0.8384

Epoch 11/40

55000/55000 [] - 4s 79us/step - loss: 2.1490 - acc: 0.8527 - val_loss: 2.2635 - val_acc: 0.8404

Epoch 12/40

55000/55000 [] - 4s 82us/step - loss: 2.1119 - acc: 0.8558 - val_loss: 2.2244 - val_acc: 0.8420

Epoch 13/40

55000/55000 [] - 4s 79us/step - loss: 2.0788 - acc: 0.8587 - val_loss: 2.2115 - val_acc: 0.8442

Epoch 14/40

55000/55000 [] - 4s 78us/step - loss: 2.0466 - acc: 0.8604 - val_loss: 2.1835 - val_acc: 0.8438

Epoch 15/40

55000/55000 [] - 4s 76us/step - loss: 2.0176 - acc: 0.8631 - val_loss: 2.1605 - val_acc: 0.8460

Epoch 16/40

55000/55000 [] - 4s 75us/step - loss: 1.5927 - acc: 0.8860 - val_loss: 0.7900 - val_acc: 0.9276

Epoch 17/40

55000/55000 [] - 4s 76us/step - loss: 0.5693 - acc: 0.9472 - val_loss: 0.6694 - val_acc: 0.9387

Epoch 18/40

55000/55000 [] - 4s 76us/step - loss: 0.4806 - acc: 0.9554 - val_loss: 0.6469 - val_acc: 0.9389

Epoch 19/40

55000/55000 [] - 4s 78us/step - loss: 0.4328 - acc: 0.9591 - val_loss: 0.6157 - val_acc: 0.9425

Epoch 20/40

55000/55000 [] - 5s 85us/step - loss: 0.3973 - acc: 0.9627 - val_loss: 0.5938 - val_acc: 0.9430

Epoch 21/40

55000/55000 [] - 4s 76us/step - loss: 0.3701 - acc: 0.9653 - val_loss: 0.5674 - val_acc: 0.9459

Epoch 22/40

55000/55000 [] - 4s 76us/step - loss: 0.3477 - acc: 0.9671 - val_loss: 0.5595 - val_acc: 0.9455

Epoch 23/40

55000/55000 [] - 4s 78us/step - loss: 0.3258 - acc: 0.9692 - val_loss: 0.5545 - val_acc: 0.9463

Epoch 24/40

55000/55000 [] - 4s 76us/step - loss: 0.3097 - acc: 0.9705 - val_loss: 0.5417 - val_acc: 0.9471

Epoch 25/40

55000/55000 [] - 4s 76us/step - loss: 0.2945 - acc: 0.9728 - val_loss: 0.5328 - val_acc: 0.9476

Epoch 26/40

55000/55000 [] - 4s 75us/step - loss: 0.2802 - acc: 0.9742 - val_loss: 0.5176 - val_acc: 0.9473

Epoch 27/40

55000/55000 [] - 4s 76us/step - loss: 0.2685 - acc: 0.9757 - val_loss: 0.5082 - val_acc: 0.9488

Epoch 28/40

55000/55000 [] - 4s 77us/step - loss: 0.2583 - acc: 0.9765 - val_loss: 0.5123 - val_acc: 0.9482

Epoch 29/40

55000/55000 [] - 4s 76us/step - loss: 0.2496 - acc: 0.9777 - val_loss: 0.4806 - val_acc: 0.9500

Epoch 30/40

55000/55000 [] - 4s 78us/step - loss: 0.2419 - acc: 0.9784 - val_loss: 0.4903 - val_acc: 0.9499

Epoch 31/40

55000/55000 [] - 4s 75us/step - loss: 0.2317 - acc: 0.9796 - val_loss: 0.4717 - val_acc: 0.9513

Epoch 32/40

55000/55000 [] - 4s 75us/step - loss: 0.2243 - acc: 0.9806 - val_loss: 0.4846 - val_acc: 0.9497

Epoch 33/40

55000/55000 [] - 4s 81us/step - loss: 0.2163 - acc: 0.9811 - val_loss: 0.4584 - val_acc: 0.9526

Epoch 34/40

55000/55000 [] - 4s 74us/step - loss: 0.2092 - acc: 0.9818 - val_loss: 0.4640 - val_acc: 0.9519

Epoch 35/40

55000/55000 [] - 5s 86us/step - loss: 0.2030 - acc: 0.9821 - val_loss: 0.4639 - val_acc: 0.9518

Epoch 36/40

55000/55000 [] - 5s 86us/step - loss: 0.1977 - acc: 0.9831 - val_loss: 0.4601 - val_acc: 0.9525

Epoch 37/40

55000/55000 [] - 5s 82us/step - loss: 0.1910 - acc: 0.9843 - val_loss: 0.4565 - val_acc: 0.9532

Epoch 38/40

55000/55000 [] - 4s 77us/step - loss: 0.1854 - acc: 0.9848 - val_loss: 0.4555 - val_acc: 0.9519

Epoch 39/40

55000/55000 [] - 4s 75us/step - loss: 0.1819 - acc: 0.9851 - val_loss: 0.4562 - val_acc: 0.9538

Epoch 40/40

55000/55000 [] - 4s 76us/step - loss: 0.1788 - acc: 0.9855 - val_loss: 0.4520 - val_acc: 0.9516

比如

img no: 103

img no: 4433

这个不是4?

img no: 4220

这个是9吗?

img no: 2080