最近在整MTCNN相关的东西,于是从github大佬处clone了一份,无奈本人手残,跑起来处处是报错。帖子上的询问均是石沉大海,只有边搞边记录了,希望能坚持到跑通的那一天吧= =。

博文CSDN:https://blog.csdn.net/weixin_36474809/article/details/82752199

博文代码: https://github.com/AITTSMD/MTCNN-Tensorflow。

README

- Download Wider Face Training part only from Official Website , unzip to replace

WIDER_trainand put it intoprepare_datafolder. - Download landmark training data from [here]((http://mmlab.ie.cuhk.edu.hk/archive/CNN_FacePoint.htm )),unzip and put them into

prepare_datafolder. - Run

prepare_data/gen_12net_data.pyto generate training data(Face Detection Part) for PNet. - Run

gen_landmark_aug_12.pyto generate training data(Face Landmark Detection Part) for PNet. - Run

gen_imglist_pnet.pyto merge two parts of training data. - Run

gen_PNet_tfrecords.pyto generate tfrecord for PNet. - After training PNet, run

gen_hard_exampleto generate training data(Face Detection Part) for RNet. - Run

gen_landmark_aug_24.pyto generate training data(Face Landmark Detection Part) for RNet. - Run

gen_imglist_rnet.pyto merge two parts of training data. - Run

gen_RNet_tfrecords.pyto generate tfrecords for RNet.(you should run this script four times to generate tfrecords of neg,pos,part and landmark respectively) - After training RNet, run

gen_hard_exampleto generate training data(Face Detection Part) for ONet. - Run

gen_landmark_aug_48.pyto generate training data(Face Landmark Detection Part) for ONet. - Run

gen_imglist_onet.pyto merge two parts of training data.

开始受苦

我们根据文章先去下有关数据集(官网就有,觉得慢就用迅雷之类的下,注意这里要下载的文件数量),然后放到对应文件夹位置(prepare_data)。

有需要可以去下阉割版的:https://pan.baidu.com/s/1mf0hM5VqtpdMfTpf2VRELA 提取码:kuqw (感谢网上大佬的资源)(不过我还是推荐下原版文件,虽然很大)

又或者(更新):链接: https://pan.baidu.com/s/1LIYlK5sVx4qsK9tvEuJ4cw 提取码: 2yvx

在遇见具体的代码问题之前,有几个常规报错需要了解:

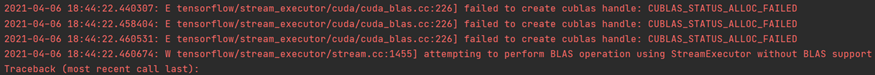

1.

这里是显卡的显存的问题,手动调整显卡的占用率:

import tensorflow as tf

gpu_options = tf.compat.v1.GPUOptions(per_process_gpu_memory_fraction=0.333)

sess = tf.compat.v1.Session(config=tf.ConfigProto(gpu_options=gpu_options))

2.

遇到no module之类的问题,我们查找资料发现:

根据官方通讯ensorflow 2.x与1.x相比发生了重大变化。tf.contrib将从核心TensorFlow信息库和构建过程中删除。TensorFlow的contrib模块已经超出了在单个存储库中可以维护和支持的范围。较大的项目最好单独维护,而较小的扩展将逐步扩展到TensorFlow核心代码。

如果要使用tensorflow 1.x功能/方法,请在中保存一个兼容性模块tensorflow 2.x。

简单来说,就是把tf.[modulename](modulename是用到的函数名)或者tf.contrib.[modulename]之类的代码改成:

tf.compat.v1.[modulename]()这样就可以解决相当一部分的兼容性问题。

但是还是会有一些小的问题,例如找不到tensorflow.compat.v1.contrib.slim和tensorflow.compat.v1.contrib.tensorboard.plugins.projector 。

是因为这类文件没有整合到compat.v1包里,需要另外安装然后:

import tf_slim as slim

from tensorboard.plugins import projector3.

这个貌似是tensorflow和cuda的版本问题,建议查找相关帖子,检查一下是否是因为不匹配产生的报错。(我用的是tf 2.4.1、CUDA11.2和461.33 的NVIDIA驱动)

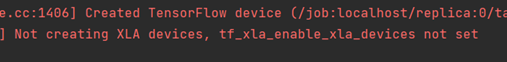

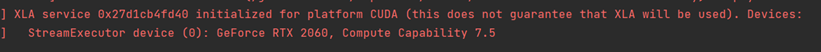

据说是Tesorflow-gpu 2.4.1,默认情况下,不再注册XLA:CPU和XLA:GPU设备。如果确实需要它们,检查过后可尝试加入以下代码:

import os

os.environ['TF_XLA_FLAGS'] = '--tf_xla_enable_xla_devices'

然后出现以下的情况应该是解除了报错(因为我不晓得实际上算不算解除= =)

4.

报错:could not create cudnn handle: CUDNN_STATUS_NOT_INITIALIZED ,考虑可能是GPU占用的问题。

解决:

a) tensorflow框架下设置GPU按需分配:

import tensorflow as tf

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

with tf.Session(config=config) as sess :

…

b) keras框架(Tensorflow backend) 设置GPU按需分配:

import tensorflow as tf

from keras import backend as K

config = tf.ConfigProto()

config.gpu_options.allow_growth=True

sess = tf.Session(config=config)

K.set_session(sess)

c) Tensorflow 2.0 设置GPU按需分配方式(没有session):

import tensorflow as tf

gpus = tf.config.experimental.list_physical_devices('GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

接下来是根据readme顺序运行代码时遇到的问题。

1.

运行gen_12net_data.py之前,由于部分情况下运行该文件不会自动生成一个…/…、DATA/12的文件夹,所以,必须自己在prepare_data的…/…/ 目录下自己新建一个DATA/12的文件夹(或者自己在代码内直接改文件路径)(涉及到绝对路径和相对路径)

2.

运行gen_12net_data.py报错‘NoneType’ object has no attribute ‘shape’ (这个报错查得我脑瓜生疼= =)

网络上说是因为文件是中文的问题or路径问题,但是并不完全对,这里分两种情况:

(1) 刚运行gen_12net_data.py立马报错的,这种是没有将WIDER_train放到上述的地址,或者是代码内的地址不对,建议好好检查一下;

(2)运行一段时间后中途报错的,可能是文件损坏或者丢失,多次尝试无果后建议去重新下载。

3.

运行gen_landmark_aug_12.py报错:AssertionError。

解决:更改主函数中的data_path到LFW文件解压出来的目录。(或者挨个去试)

4.

运行gen_PNet_tfrecords.py报错:AttributeError: 'NoneType' object has no attribute 'tostring'。

检查是不是有些代码需要改成tf.compat.v1。若依旧报错,可能是第一步运行gen_12net_data.py文件不完整或者出错,按照之前的顺序重新执行之前README的步骤即可。(脑瓜疼x2 XP)

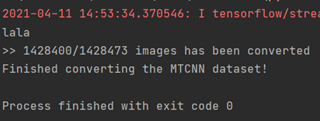

解决后训练完成的效果(训练有点花时间,请耐心等待)

5.

运行train_PNet.py/ read_tfrecord_v2.py报错:RuntimeError: Input pipelines based on Queues are not supported when eager execution is enabled. Please use tf.data to ingest data into your model instead.

可以参考文章:tf1.x迁移到tf2.x contrib的方法和思路

不管用的话,查资料得知,tf2.0好像是为了更安全对graph有一个默认的 eager execution is enabled by default,叫急切执行什么的,不管他有什么好处,我们为了跑通我们的代码,所以我们需要禁止它,2.x的TensorFlow就会返回执行1.x。于是在代码开头加一句来手动将它disable掉(下面二选一):

tf.compat.v1.disable_v2_behavior()

tf.compat.v1.disable_eager_execution()修改后,还要注意代码中的dataset_dir路径名以及.tfrecord_shuffle文件的名字是否正确。

亦或者,直接在文件开头引用时就:

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

我没试过,算了还是不要节外生枝。

然后read_tfrecord_v2.py文件就可以输出了。

不过后面运行train_PNet.py又会报错,warning部分是由于disable操作的原因,get_shape我估计是路径问题(?)。现在就卡在这儿了,希望成功了的老哥们能不吝赐教。

2021-04-13 13:43:21.220435: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library cudart64_110.dll

['E:\\study2\\MTCNN-Tensorflow\\train_models', 'E:\\study2\\MTCNN-Tensorflow', 'D:\\toolsware\\anaconda\\envs\\tensorflow\\python37.zip', 'D:\\toolsware\\anaconda\\envs\\tensorflow\\DLLs', 'D:\\toolsware\\anaconda\\envs\\tensorflow\\lib', 'D:\\toolsware\\anaconda\\envs\\tensorflow', 'D:\\toolsware\\anaconda\\envs\\tensorflow\\lib\\site-packages', '../prepare_data']

2021-04-13 13:43:27.077710: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library nvcuda.dll

2021-04-13 13:43:27.168672: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1720] Found device 0 with properties:

pciBusID: 0000:01:00.0 name: GeForce RTX 2060 computeCapability: 7.5

coreClock: 1.2GHz coreCount: 30 deviceMemorySize: 6.00GiB deviceMemoryBandwidth: 245.91GiB/s

2021-04-13 13:43:27.168851: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library cudart64_110.dll

2021-04-13 13:43:27.221668: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library cublas64_11.dll

2021-04-13 13:43:27.221762: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library cublasLt64_11.dll

2021-04-13 13:43:27.245849: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library cufft64_10.dll

2021-04-13 13:43:27.251559: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library curand64_10.dll

2021-04-13 13:43:27.279790: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library cusolver64_10.dll

2021-04-13 13:43:27.304099: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library cusparse64_11.dll

2021-04-13 13:43:27.307262: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library cudnn64_8.dll

2021-04-13 13:43:27.307604: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1862] Adding visible gpu devices: 0

E:/study2/MTCNN-Tensorflow/prepare_data/DATA/imglists/PNet\train_PNet_landmark.txt

WARNING:tensorflow:From E:\study2\MTCNN-Tensorflow\prepare_data\read_tfrecord_v2.py:19: string_input_producer (from tensorflow.python.training.input) is deprecated and will be removed in a future version.

Instructions for updating:

Queue-based input pipelines have been replaced by `tf.data`. Use `tf.data.Dataset.from_tensor_slices(string_tensor).shuffle(tf.shape(input_tensor, out_type=tf.int64)[0]).repeat(num_epochs)`. If `shuffle=False`, omit the `.shuffle(...)`.

Total size of the dataset is: 1428473

E:/study2/MTCNN-Tensorflow/data/MTCNN_model/PNet_landmark/PNet

dataset dir is: E:/study2/MTCNN-Tensorflow/prepare_data/DATA/imglists/PNet\train_PNet_landmark.tfrecord_shuffle

WARNING:tensorflow:From D:\toolsware\anaconda\envs\tensorflow\lib\site-packages\tensorflow\python\training\input.py:277: input_producer (from tensorflow.python.training.input) is deprecated and will be removed in a future version.

Instructions for updating:

Queue-based input pipelines have been replaced by `tf.data`. Use `tf.data.Dataset.from_tensor_slices(input_tensor).shuffle(tf.shape(input_tensor, out_type=tf.int64)[0]).repeat(num_epochs)`. If `shuffle=False`, omit the `.shuffle(...)`.

WARNING:tensorflow:From D:\toolsware\anaconda\envs\tensorflow\lib\site-packages\tensorflow\python\training\input.py:189: limit_epochs (from tensorflow.python.training.input) is deprecated and will be removed in a future version.

Instructions for updating:

Queue-based input pipelines have been replaced by `tf.data`. Use `tf.data.Dataset.from_tensors(tensor).repeat(num_epochs)`.

WARNING:tensorflow:From D:\toolsware\anaconda\envs\tensorflow\lib\site-packages\tensorflow\python\training\input.py:198: QueueRunner.__init__ (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

WARNING:tensorflow:From D:\toolsware\anaconda\envs\tensorflow\lib\site-packages\tensorflow\python\training\input.py:198: add_queue_runner (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

WARNING:tensorflow:From E:\study2\MTCNN-Tensorflow\prepare_data\read_tfrecord_v2.py:26: TFRecordReader.__init__ (from tensorflow.python.ops.io_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Queue-based input pipelines have been replaced by `tf.data`. Use `tf.data.TFRecordDataset`.

WARNING:tensorflow:From E:\study2\MTCNN-Tensorflow\prepare_data\read_tfrecord_v2.py:57: batch (from tensorflow.python.training.input) is deprecated and will be removed in a future version.

Instructions for updating:

Queue-based input pipelines have been replaced by `tf.data`. Use `tf.data.Dataset.batch(batch_size)` (or `padded_batch(...)` if `dynamic_pad=True`).

Traceback (most recent call last):

File "E:/study2/MTCNN-Tensorflow/train_models/train_PNet.py", line 46, in <module>

train_PNet(base_dir, prefix, end_epoch, display, lr)

File "E:/study2/MTCNN-Tensorflow/train_models/train_PNet.py", line 31, in train_PNet

train(net_factory,prefix, end_epoch, base_dir, display=display, base_lr=lr)

File "E:\study2\MTCNN-Tensorflow\train_models\train.py", line 182, in train

cls_loss_op,bbox_loss_op,landmark_loss_op,L2_loss_op,accuracy_op = net_factory(input_image, label, bbox_target,landmark_target,training=True)

File "E:\study2\MTCNN-Tensorflow\train_models\mtcnn_model.py", line 187, in P_Net

print(inputs.get_shape())

AttributeError: 'NoneType' object has no attribute 'get_shape'

Process finished with exit code 1