# 逻辑回归

## 逻辑回归处理二元分类

%matplotlib inlineimportmatplotlib.pyplot as plt#显示中文

from matplotlib.font_manager importFontProperties

font=FontProperties(fname=r"c:\windows\fonts\msyh.ttc", size=10)

importnumpy as np

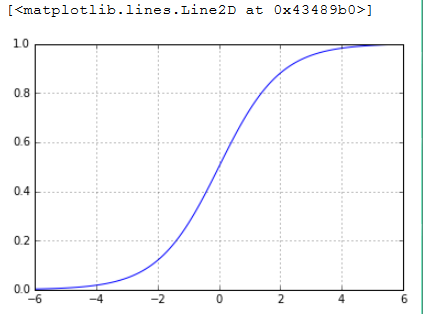

plt.figure()

plt.axis([-6,6,0,1])

plt.grid(True)

X=np.arange(-6,6,0.1)

y=1/(1+np.e**(-X))

plt.plot(X,y,'b-')

## 垃圾邮件分类

importpandas as pd

df=pd.read_csv('SMSSpamCollection',delimiter='\t',header=None)

df.head()

from sklearn.feature_extraction.text importTfidfVectorizerfrom sklearn.linear_model.logistic importLogisticRegressionfrom sklearn.cross_validation importtrain_test_split#用pandas加载数据.csv文件,然后用train_test_split分成训练集(75%)和测试集(25%):

X_train_raw, X_test_raw, y_train, y_test = train_test_split(df[1],df[0])#我们建一个TfidfVectorizer实例来计算TF-IDF权重:

vectorizer=TfidfVectorizer()

X_train=vectorizer.fit_transform(X_train_raw)

X_test=vectorizer.transform(X_test_raw)#LogisticRegression同样实现了fit()和predict()方法

classifier=LogisticRegression()

classifier.fit(X_train,y_train)

predictions=classifier.predict(X_test)for i ,prediction in enumerate(predictions[-5:]):print '预测类型:%s.信息:%s' %(prediction,X_test_raw.iloc[i])

输出结果:

预测类型:ham.信息:Waiting in e car 4 my mum lor. U leh? Reach home already?

预测类型:ham.信息:Dear got train and seat mine lower seat

预测类型:spam.信息:I just really need shit before tomorrow and I know you won't be awake before like 6

预测类型:ham.信息:What should i eat fo lunch senor

预测类型:ham.信息:645

## 二元分类效果评估方法

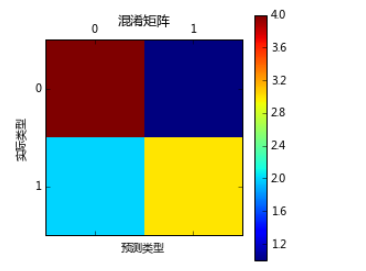

#混淆矩阵

from sklearn.metrics importconfusion_matriximportmatplotlib.pyplot as plt

y_test= [0, 0, 0, 0, 0, 1, 1, 1, 1, 1]

y_pred= [0, 1, 0, 0, 0, 0, 0, 1, 1, 1]

confusion_matrix=confusion_matrix(y_test,y_pred)print confusion_matrix

plt.matshow(confusion_matrix)

plt.title(u'混淆矩阵')

plt.colorbar()

plt.ylabel(u'实际类型')

plt.xlabel(u'预测类型')

plt.show()

## 准确率

importpandas as pdimportnumpy as npfrom sklearn.feature_extraction.text importTfidfVectorizerfrom sklearn.linear_model.logistic importLogisticRegressionfrom sklearn.cross_validation importtrain_test_split,cross_val_score

df=pd.read_csv('SMSSpamCollection',delimiter='\t',names=["label","message"])

X_train_raw, X_test_raw, y_train, y_test= train_test_split(df['message'], df['label'])

vectorizer=TfidfVectorizer()

X_train=vectorizer.fit_transform(X_train_raw)

X_test=vectorizer.transform(X_test_raw)

classifier=LogisticRegression()

classifier.fit(X_train,y_train)

scores=cross_val_score(classifier,X_train,y_train,cv=5)print '准确率',np.mean(scores),scores

输出结果:

准确率 0.954292731612 [ 0.96057348 0.96052632 0.94617225 0.95808383 0.94610778]

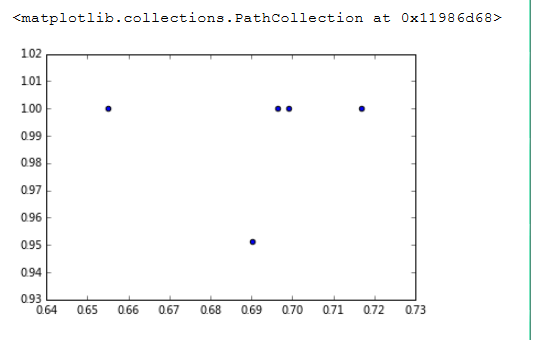

## 精确率和召回率

scikit-learn结合真实类型数据,提供了一个函数来计算一组预测值的精确率和召回率。

%matplotlib inlineimportnumpy as npimportpandas as pdfrom sklearn.feature_extraction.text importTfidfVectorizerfrom sklearn.linear_model.logistic importLogisticRegressionfrom sklearn.cross_validation importtrain_test_split, cross_val_score

df['label']=pd.factorize(df['label'])[0]

X_train_raw, X_test_raw, y_train, y_test= train_test_split(df['message'],df['label'])

vectorizer=TfidfVectorizer()

X_train=vectorizer.fit_transform(X_train_raw)

X_test=vectorizer.transform(X_test_raw)

classifier=LogisticRegression()

classifier.fit(X_train, y_train)

precisions= cross_val_score(classifier, X_train, y_train, cv=5, scoring=

'precision')print u'精确率:', np.mean(precisions), precisions

recalls= cross_val_score(classifier, X_train, y_train, cv=5, scoring='recall')print u'召回率:', np.mean(recalls), recalls

plt.scatter(recalls, precisions)

输出结果:

精确率: 0.990243902439 [ 1. 0.95121951 1. 1. 1. ]

召回率: 0.691498103666 [ 0.65486726 0.69026549 0.69911504 0.71681416 0.69642857]

## 计算综合评价指标

fls=cross_val_score(classifier,X_train,y_train,cv=5,scoring='f1')print '综合指标评价',np.mean(fls),fls

输出结果:

综合指标评价 0.791683999687 [ 0.76243094 0.79781421 0.8 0.77094972 0.82722513]

## ROC AUC

importnumpy as npimportpandas as pdimportmatplotlib.pyplot as pltfrom sklearn.feature_extraction.text importTfidfVectorizerfrom sklearn.linear_model.logistic importLogisticRegressionfrom sklearn.cross_validation importtrain_test_split,cross_val_scorefrom sklearn.metrics importroc_curve,auc

df['label']=pd.factorize(df['label'])[0]

X_train_raw, X_test_raw, y_train, y_test= train_test_split(df['message'],df['label'])

vectorizer=TfidfVectorizer()

X_train=vectorizer.fit_transform(X_train_raw)

X_test=vectorizer.transform(X_test_raw)

classifier=LogisticRegression()

classifier.fit(X_train, y_train)

predictions=classifier.predict_proba(X_test)#每一类的概率

false_positive_rate, recall, thresholds =roc_curve(y_test, predictions[:

,1])

roc_auc=auc(false_positive_rate,recall)

plt.title('Receiver Operating Characteristic')

plt.plot(false_positive_rate, recall,'b', label='AUC = %0.2f' %roc_auc)

plt.legend(loc='lower right')

plt.plot([0,1],[0,1],'r--')

plt.xlim([0.0,1.0])

plt.ylim([0.0,1.0])

plt.ylabel('Recall')

plt.xlabel('Fall-out')

plt.show()

## 网格搜索

importpandas as pdfrom sklearn.feature_extraction.text importTfidfVectorizerfrom sklearn.linear_model.logistic importLogisticRegressionfrom sklearn.grid_search importGridSearchCVfrom sklearn.pipeline importPipelinefrom sklearn.cross_validation importtrain_test_splitfrom sklearn.metrics importprecision_score, recall_score, accuracy_score

pipeline=Pipeline([

('vect', TfidfVectorizer(stop_words='english')),

('clf', LogisticRegression())

])

parameters={'vect__max_df': (0.25, 0.5, 0.75),'vect__stop_words': ('english', None),'vect__max_features': (2500, 5000, 10000, None),'vect__ngram_range': ((1, 1), (1, 2)),'vect__use_idf': (True, False),'vect__norm': ('l1', 'l2'),'clf__penalty': ('l1', 'l2'),'clf__C': (0.01, 0.1, 1, 10),

}

grid_search= GridSearchCV(pipeline, parameters, n_jobs=-1, verbose=1, scoring='accuracy', cv=3)

df=pd.read_csv('SMSSpamCollection',delimiter='\t',names=["label","message"])

df['label']=pd.factorize(df['label'])[0]

X_train, X_test, y_train, y_test= train_test_split(df['message'],df['label'])

grid_search.fit(X_train, y_train)print('最佳效果:%0.3f' % grid_search.best_score_)

输出结果;

最佳效果:0.986

print '最优参数组合'best_parameters=grid_search.best_estimator_.get_params()

for param_name insorted(parameters.keys()):print '\t%s:%r' %(param_name,best_parameters[param_name])

predictions=grid_search.predict(X_test)print '准确率:',accuracy_score(y_test,predictions)print '精确率:',precision_score(y_test,predictions)print '召回率:',recall_score(y_test,predictions)

输出结果:

clf__C:10

clf__penalty:'l2'

vect__max_df:0.25

vect__max_features:2500

vect__ngram_range:(1, 2)

vect__norm:'l2'

vect__stop_words:None

vect__use_idf:True

准确率: 0.979899497487

精确率: 0.974683544304

召回率: 0.865168539326

# logistics 多分类

importpandas as pd

df=pd.read_csv("logistic_data/train.tsv",header=0,delimiter='\t')printdf.count()printdf.head()

df.Phrase.head(10)

df.Sentiment.describe()

df.Sentiment.value_counts()

df.Sentiment.value_counts()/df.Sentiment.count()

importpandas as pdfrom sklearn.feature_extraction.text importTfidfVectorizerfrom sklearn.linear_model.logistic importLogisticRegressionfrom sklearn.cross_validation importtrain_test_splitfrom sklearn.metrics importclassification_report,accuracy_score,confusion_matrixfrom sklearn.pipeline importPipelinefrom sklearn.grid_search importGridSearchCV

pipeline=Pipeline([

('vect',TfidfVectorizer(stop_words='english')),

('clf',LogisticRegression())])

parameters={'vect__max_df':(0.25,0.5),'vect__ngram_range':((1,1),(1,2)),'vect__use_idf':(True,False),'clf__C':(0.1,1,10),

}

df=pd.read_csv("logistic_data/train.tsv",header=0,delimiter='\t')

X,y=df.Phrase,df.Sentiment.as_matrix()

X_train,X_test,y_train,y_test=train_test_split(X,y,train_size=0.5)

grid_search=GridSearchCV(pipeline,parameters,n_jobs=-1,verbose=1,scoring="accuracy")

grid_search.fit(X_train,y_train)print u'最佳效果:%0.3f'%grid_search.best_score_print u'最优参数组合:'best_parameters=grid_search.best_estimator_.get_params()for param_name insorted(parameters.keys()):print '\t%s:%r'%(param_name,best_parameters[param_name])

数据结果:

Fitting 3 folds for each of 24 candidates, totalling 72 fits

[Parallel(n_jobs=-1)]: Done 46 tasks | elapsed: 2.0min [Parallel(n_jobs=-1)]: Done 72 out of 72 | elapsed: 4.5min finished

最佳效果:0.619 最优参数组合: clf__C:10 vect__max_df:0.25 vect__ngram_range:(1, 2) vect__use_idf:False

## 多类分类效果评估

predictions=grid_search.predict(X_test)print u'准确率',accuracy_score(y_test,predictions)print u'混淆矩阵',confusion_matrix(y_test,predictions)print u'分类报告',classification_report(y_test,predictions)

数据结果:

准确率 0.636614122773

混淆矩阵 [[ 1133 1712 595 67 1]

[ 919 6136 6006 553 35]

[ 213 3212 32637 3634 138]

[ 22 420 6548 8155 1274]

[ 4 45 546 2411 1614]]

分类报告 precision recall f1-score support

0 0.49 0.32 0.39 3508

1 0.53 0.45 0.49 13649

2 0.70 0.82 0.76 39834

3 0.55 0.50 0.52 16419

4 0.53 0.35 0.42 4620

avg / total 0.62 0.64 0.62 78030