2013年提出的

ImageNet ILSVRC 2013的冠军,作者是Matthew Zeiler和Rob Fergus,所以叫做ZFNet(Zeiler&Fergus Net的简称)

ZFNet——可视化的开端(可视化理解卷积神经网络)

ZFNet是在AlexNet基础上进行了一些细节的改动,改动不大,网络结构上并没有太大的突破。主要是引入了可视化,使用了解卷积和反池化(无法实现,只能近似)的近似对每一层进行可视化,并采用一个GPU进行训练。该论文最大的贡献在于通过使用可视化技术揭示了神经网络各层到底在干什么,起到了什么作用。

按照以前的观点,一个卷积神经网络的好坏我们只能通过不断地训练去判断,我也没有办法知道每一次卷积、每一次池化、每一次经过激活函数到底发生了什么,也不知道神经网络为什么取得了如此好的效果,那么只能靠不停的实验来寻找更好的模型。ZFNet的核心工作其实就是一个——通过使用可视化技术揭示了神经网络各层到底在干什么,起到了什么作用。一旦知道了这些,如何调整我们的神经网络,往什么方向优化,就有了较好的依据。

主要工作:

(1)使用一个多层的反卷积网络来可视化训练过程中特征的演化及发现潜在的问题;

(2)同时根据遮挡图像局部对分类结果的影响来探讨对分类任务而言到底那部分输入信息更重要。

[PyTorch]ZFNet vs AlexNet | 大海

Visualizing and Understanding Convolutional Networks_tina的博客-CSDN博客

卷积神经网络可视化理解_ZHUYOUKANG的博客-CSDN博客_卷积神经网络可视化理解

Pascal VOC 2007

The PASCAL Visual Object Classes Challenge 2007 (VOC2007)

数据集包含 训练集:5011 张,测试集:4952张,共9963张,20个类

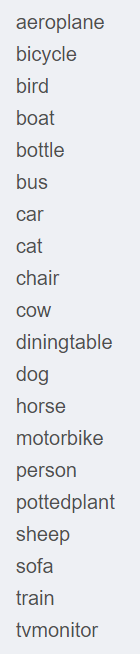

20个类分别为

目录结构

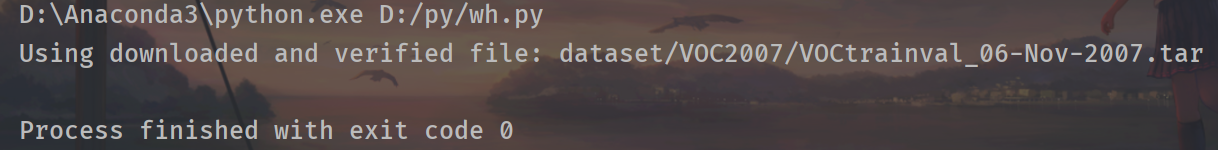

下载/展示数据集

import cv2 import numpy as np from torchvision import datasets import matplotlib.pyplot as plt #下载PASCAL VOC数据集 #如果已经有了就不再下载了 dataset = datasets.VOCDetection( root='dataset/VOC2007/', year='2007', image_set='trainval', download=True ) #len(dataset) 是 5011 # img, target = dataset.__getitem__(0) img, target = dataset[0] #取出第0张图片 #img是<class 'PIL.Image.Image'> #img是(375, 500) #target是dict #target的keys有dict_keys(['folder', 'filename', 'source', 'owner', 'size', 'segmented', 'object']) img = np.array(img) #现在img是(500, 375, 3) #此时用cv2.imshow()即可直接显示,若用plt.imshow的话则需要转换一下通道 #opencv是BGR通道,plt默认RGB通道 # cv2.imshow('img', img) plt.imshow(img[:,:,::-1]) #-1是通道反向取值,由BGR变为RGB plt.show()

下载读取之后目录下是这样

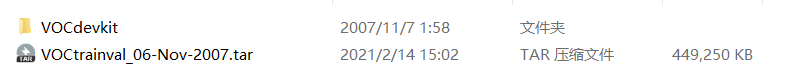

提取全部的训练/验证集,分类别保存标注信息

""" 提取全部的训练/验证集,分类别保存标注信息 """ import cv2 import numpy as np import os import xmltodict # import utils.util as util def check_dir(data_dir): if not os.path.exists(data_dir): os.mkdir(data_dir) path = 'dataset/VOC2007/' root_dir = path + 'train_val/' train_dir = path + 'train_val/train/' val_dir = path + 'train_val/val/' train_txt_path = path + 'VOCdevkit/VOC2007/ImageSets/Main/train.txt' val_txt_path = path + 'VOCdevkit/VOC2007/ImageSets/Main/val.txt' annotation_dir = path + 'VOCdevkit/VOC2007/Annotations' jpeg_image_dir = path + 'VOCdevkit/VOC2007/JPEGImages' alphabets = ['aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus', 'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse', 'motorbike', 'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tvmonitor'] def find_all_cate_rects(annotation_dir, name_list): """ 找出所有的类别的标注框 """ cate_list = list() for i in range(20): cate_list.append(list()) for name in name_list: annotation_path = os.path.join(annotation_dir, name + ".xml") with open(annotation_path, 'rb') as f: xml_dict = xmltodict.parse(f) # print(xml_dict) objects = xml_dict['annotation']['object'] if isinstance(objects, list): for obj in objects: obj_name = obj['name'] obj_idx = alphabets.index(obj_name) difficult = int(obj['difficult']) if difficult != 1: bndbox = obj['bndbox'] cate_list[obj_idx].append({'img_name': name, 'rect': (int(bndbox['xmin']), int(bndbox['ymin']), int(bndbox['xmax']), int(bndbox['ymax']))}) elif isinstance(objects, dict): obj_name = objects['name'] obj_idx = alphabets.index(obj_name) difficult = int(objects['difficult']) if difficult != 1: bndbox = objects['bndbox'] cate_list[obj_idx].append({'img_name': name, 'rect': (int(bndbox['xmin']), int(bndbox['ymin']), int(bndbox['xmax']), int(bndbox['ymax']))}) else: pass return cate_list def save_cate(cate_list, image_dir, res_dir): """ 保存裁剪的图像 """ # 保存image_dir下所有图像,以便后续查询 image_dict = dict() image_name_list = os.listdir(image_dir) for name in image_name_list: image_path = os.path.join(image_dir, name) img = cv2.imread(image_path) image_dict[name.split('.')[0]] = img # 遍历所有类别,保存标注的图像 for i in range(20): cate_name = alphabets[i] cate_dir = os.path.join(res_dir, cate_name) check_dir(cate_dir) for item in cate_list[i]: img_name = item['img_name'] xmin, ymin, xmax, ymax = item['rect'] rect_img = image_dict[img_name][ymin:ymax, xmin:xmax] img_path = os.path.join(cate_dir, '%s-%d-%d-%d-%d.png' % (img_name, xmin, ymin, xmax, ymax)) cv2.imwrite(img_path, rect_img) if __name__ == '__main__': check_dir(root_dir) check_dir(train_dir) check_dir(val_dir) train_name_list = np.loadtxt(train_txt_path, dtype=np.str) print(train_name_list) cate_list = find_all_cate_rects(annotation_dir, train_name_list) print([len(x) for x in cate_list]) save_cate(cate_list, jpeg_image_dir, train_dir) val_name_list = np.loadtxt(val_txt_path, dtype=np.str) print(val_name_list) cate_list = find_all_cate_rects(annotation_dir, val_name_list) print([len(x) for x in cate_list]) save_cate(cate_list, jpeg_image_dir, val_dir) print('done')

datasets.ImageFolder

ImageFolder是一个通用的数据加载器,它要求我们以下面这种格式来组织数据集的训练、验证或者测试图片。root/dog/xxx.png root/dog/xxy.png root/dog/xxz.png root/cat/123.png root/cat/nsdf3.png root/cat/asd932_.pngImageFolder假设所有的文件按文件夹保存,每个文件夹下存储同一个类别的图片,文件夹名为类名

像这次ImageFolder要读取的文件夹就是这样子的

网络实现

论文中输入为224×224,实际操作为227×227

输入的图片的大小和Alexnet一样的

ZFNet在AlexNet基础上的调整:

- 第1个卷积层,kernel size从11减小为7,将stride从4减小为2(这将导致feature map增大1倍)

- 为了让后续feature map的尺寸保持一致,第2个卷积层的stride从1变为2

- 第3、4、5个卷积层,instead of 384, 384, 256 filters use 512, 1024, 512

仅这2项修改,就获得了几个点的性能提升

import torch from torch import nn import os import time import copy import torch import torch.nn as nn import torch.optim as optim from torch.utils.data import DataLoader import torchvision.transforms as transforms # from torchvision.datasets import ImageFolder from torchvision import datasets import torchvision import matplotlib.pyplot as plt class ZFNet(nn.Module): def __init__(self, num_classes=1000): super(ZFNet, self).__init__() self.features = nn.Sequential( nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=2), nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2), nn.Conv2d(64, 192, kernel_size=5, stride=2, padding=2), nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2), nn.Conv2d(192, 384, kernel_size=3, padding=1), nn.ReLU(inplace=True), nn.Conv2d(384, 256, kernel_size=3, padding=1), nn.ReLU(inplace=True), nn.Conv2d(256, 256, kernel_size=3, padding=1), nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2), ) self.avgpool = nn.AdaptiveAvgPool2d((6, 6)) self.classifier = nn.Sequential( nn.Dropout(), nn.Linear(256 * 6 * 6, 4096), nn.ReLU(inplace=True), nn.Dropout(), nn.Linear(4096, 4096), nn.ReLU(inplace=True), nn.Linear(4096, num_classes), ) def forward(self, x): x = self.features(x) x = self.avgpool(x) x = torch.flatten(x, 1) x = self.classifier(x) return x data_root_dir = 'dataset/VOC2007/train_val' model_dir = 'checkpoints/' def load_data(root_dir): transform = transforms.Compose([ transforms.Resize((227, 227)), transforms.RandomHorizontalFlip(), transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)) ]) data_loaders = {} dataset_sizes = {} for phase in ['train', 'val']: phase_dir = os.path.join(root_dir, phase) data_set = datasets.ImageFolder( root=phase_dir, transform=transform ) data_loader = DataLoader( dataset=data_set, batch_size=64, shuffle=True, num_workers=8 ) data_loaders[phase] = data_loader dataset_sizes[phase] = len(data_set) return data_loaders, dataset_sizes def train_model(model, criterion, optimizer, scheduler, dataset_sizes, dataloaders, num_epochs=25, device=None): since = time.time() best_model_wts = copy.deepcopy(model.state_dict()) best_acc = 0.0 loss_dict = {'train': [], 'val': []} acc_dict = {'train': [], 'val': []} for epoch in range(num_epochs): print('Epoch {}/{}'.format(epoch, num_epochs - 1)) print('-' * 10) # Each epoch has a training and validation phase for phase in ['train', 'val']: if phase == 'train': model.train() # Set model to training mode else: model.eval() # Set model to evaluate mode running_loss = 0.0 running_corrects = 0 # Iterate over data. for inputs, labels in dataloaders[phase]: inputs = inputs.to(device) labels = labels.to(device) # zero the parameter gradients optimizer.zero_grad() # forward # track history if only in train with torch.set_grad_enabled(phase == 'train'): outputs = model(inputs) _, preds = torch.max(outputs, 1) loss = criterion(outputs, labels) # backward + optimize only if in training phase if phase == 'train': loss.backward() optimizer.step() # statistics running_loss += loss.item() * inputs.size(0) running_corrects += torch.sum(preds == labels.data) if phase == 'train': scheduler.step() epoch_loss = running_loss / dataset_sizes[phase] epoch_acc = running_corrects.double() / dataset_sizes[phase] loss_dict[phase].append(epoch_loss) acc_dict[phase].append(epoch_acc) print('{} Loss: {:.4f} Acc: {:.4f}'.format( phase, epoch_loss, epoch_acc)) # deep copy the model if phase == 'val' and epoch_acc > best_acc: best_acc = epoch_acc best_model_wts = copy.deepcopy(model.state_dict()) print() time_elapsed = time.time() - since print('Training complete in {:.0f}m {:.0f}s'.format( time_elapsed // 60, time_elapsed % 60)) print('Best val Acc: {:4f}'.format(best_acc)) # load best model weights model.load_state_dict(best_model_wts) return model, loss_dict, acc_dict def save_png(title, res_dict): # x_major_locator = MultipleLocator(1) # ax = plt.gca() # ax.xaxis.set_major_locator(x_major_locator) fig = plt.figure() plt.title(title) for name, res in res_dict.items(): for k, v in res.items(): x = list(range(len(v))) plt.plot(v, label='%s-%s' % (name, k)) plt.legend() plt.savefig('%s.png' % title) if __name__ == '__main__': data_loaders, data_sizes = load_data(data_root_dir) print(data_sizes) res_loss = dict() res_acc = dict() # for name in ['alexnet', 'zfnet']: for name in ['zfnet']: if name == 'alexnet': model = torchvision.models.AlexNet(num_classes=20) else: model = ZFNet(num_classes=20) device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu') model = model.to(device) criterion = nn.CrossEntropyLoss() # optimizer = optim.SGD(model.parameters(), lr=1e-3, momentum=0.9) optimizer = optim.Adam(model.parameters(), lr=1e-3) lr_scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=15, gamma=0.1) best_model, loss_dict, acc_dict = train_model(model, criterion, optimizer, lr_scheduler, data_sizes, data_loaders, num_epochs=5, device=device) # 保存最好的模型参数 if not os.path.exists(model_dir): os.mkdir(model_dir) torch.save(best_model.state_dict(), os.path.join(model_dir, '%s.pth' % name)) res_loss[name] = loss_dict res_acc[name] = acc_dict print('train %s done' % name) print() save_png('loss', res_loss) save_png('acc', res_acc)

各卷积层的可视化

为什么仅2项修改,ZFNet就比AlexNet获得了几个点的性能提升呢?

通过对AlexNet的特征进行可视化,文章作者发现第2层出现了aliasing。在数字信号处理中,aliasing是指在采样频率过低时出现的不同信号混淆的现象,作者认为这是第1个卷积层stride过大引起的,为了解决这个问题,可以提高采样频率,所以将stride从4调整为2,与之相应的将kernel size也缩小(可以认为stride变小了,kernel没有必要看那么大范围了),这样修改前后,特征的变化情况如下图所示,第1层呈现了更多更具区分力的特征,第二2层的特征也更加清晰,没有aliasing现象

可视化操作

我们讲到卷积神经网络通过逐层卷积将原始像素空间逐层映射到特征空间,深层feature map上每个位置的值都代表与某种模式的相似程度,但因为其位于特征空间,不利于人眼直接观察对应的模式,为了便于观察理解,需要将其映射回像素空间,“从群众中来,到群众中去”,论文《 Visualizing and Understanding Convolutional Networks》就重点介绍了如何“到群众中去”。

可视化操作,针对的是已经训练好的网络,或者训练过程中的网络快照,可视化操作不会改变网络的权重,只是用于分析和理解在给定输入图像时网络观察到了什么样的特征,以及训练过程中特征发生了什么变化。

给定1张输入图像,先前向传播,得到每一层的feature map,如果想可视化第ii层学到的特征,保留该层feature map的最大值,将其他位置和其他feature map置0,将其反向映射回原始输入所在的像素空间。对于一般的卷积神经网络,前向传播时不断经历 input image→conv → rectification → pooling →……,可视化时,则从某一层的feature map开始,依次反向经历 unpooling → rectification → deconv → …… → input space,如下图所示,上方对应更深层,下方对应更浅层,前向传播过程在右半侧从下至上,特征可视化过程在左半侧从上至下

可视化时每一层的操作如下:

- Unpooling:在前向传播时,记录相应max pooling层每个最大值来自的位置,在unpooling时,根据来自上层的map直接填在相应位置上,如上图所示,Max Locations “Switches”是一个与pooling层输入等大小的二值map,标记了每个局部极值的位置。

- Rectification:因为使用的ReLU激活函数,前向传播时只将正值原封不动输出,负值置0,“反激活”过程与激活过程没什么分别,直接将来自上层的map通过ReLU。

- Deconvolution:可能称为transposed convolution更合适,卷积操作output map的尺寸一般小于等于input map的尺寸,transposed convolution可以将尺寸恢复到与输入相同,相当于上采样过程,该操作的做法是,与convolution共享同样的卷积核,但需要将其左右上下翻转(即中心对称),然后作用在来自上层的feature map进行卷积,结果继续向下传递。关于Deconvolution的更细致介绍,可以参见博文《一文搞懂 deconvolution、transposed convolution、sub-pixel or fractional convolution》

不断经历上述过程,将特征映射回输入所在的像素空间,就可以呈现出人眼可以理解的特征。给定不同的输入图像,看看每一层关注到最显著的特征是什么,如下图所示: