文章目录

1.GC overhead limit exceeded

1.1GC overhead limit exceeded 的原因

JVM花费了98%的时间进行垃圾回收,而只得到2%可用的内存,频繁的进行内存回收(最起码已经进行了5次连续的垃圾回收),JVM就会曝出ava.lang.OutOfMemoryError: GC overhead limit exceeded错误。

具体参考 https://www.cnblogs.com/airnew/p/11756450.html

1.2 案例分析

1.2.1 dataX 同步Mysql数据到ES报错

经DataX智能分析,该任务最可能的错误原因是:

com.alibaba.datax.common.exception.DataXException: Code:[Framework-13], Description:[DataX插件运行时出错, 具体原因请参看DataX运行结束时的错误诊断信息 .]. - java.lang.OutOfMemoryError: GC overhead limit exceeded

at java.util.LinkedList.linkLast(LinkedList.java:142)

at java.util.LinkedList.add(LinkedList.java:338)

at io.searchbox.core.Bulk$Builder.addAction(Bulk.java:175)

at com.alibaba.datax.plugin.writer.elasticsearchwriter.ESWriter$Task.doBatchInsert(ESWriter.java:461)

at com.alibaba.datax.plugin.writer.elasticsearchwriter.ESWriter$Task.startWrite(ESWriter.java:293)

at com.alibaba.datax.core.taskgroup.runner.WriterRunner.run(WriterRunner.java:56)

at java.lang.Thread.run(Thread.java:745)

- java.lang.OutOfMemoryError: GC overhead limit exceeded

at java.util.LinkedList.linkLast(LinkedList.java:142)

at java.util.LinkedList.add(LinkedList.java:338)

at io.searchbox.core.Bulk$Builder.addAction(Bulk.java:175)

at com.alibaba.datax.plugin.writer.elasticsearchwriter.ESWriter$Task.doBatchInsert(ESWriter.java:461)

at com.alibaba.datax.plugin.writer.elasticsearchwriter.ESWriter$Task.startWrite(ESWriter.java:293)

at com.alibaba.datax.core.taskgroup.runner.WriterRunner.run(WriterRunner.java:56)

at java.lang.Thread.run(Thread.java:745)

at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:40)

at com.alibaba.datax.core.job.scheduler.processinner.ProcessInnerScheduler.dealFailedStat(ProcessInnerScheduler.java:39)

at com.alibaba.datax.core.job.scheduler.AbstractScheduler.schedule(AbstractScheduler.java:99)

at com.alibaba.datax.core.job.JobContainer.schedule(JobContainer.java:535)

at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:119)

at com.alibaba.datax.core.Engine.start(Engine.java:92)

at com.alibaba.datax.core.Engine.entry(Engine.java:171)

at com.alibaba.datax.core.Engine.main(Engine.java:204)

1.2.2 查看进程使用的JVM内存

查看进程使用的JVM内存

[root@hadoop 12735]# cat /proc/12735/

attr/ cmdline environ io mem ns/ pagemap sched stack task/

autogroup comm exe limits mountinfo numa_maps patch_state schedstat stat timers

auxv coredump_filter fd/ loginuid mounts oom_adj personality sessionid statm uid_map

cgroup cpuset fdinfo/ map_files/ mountstats oom_score projid_map setgroups status wchan

clear_refs cwd/ gid_map maps net/ oom_score_adj root/ smaps syscall

[root@hadoop 12735]# cat /proc/12735/sta

stack stat statm status

[root@hadoop 12735]# cat /proc/12735/status

VmRSS: 1220900 kB

此进程使用的1.2G

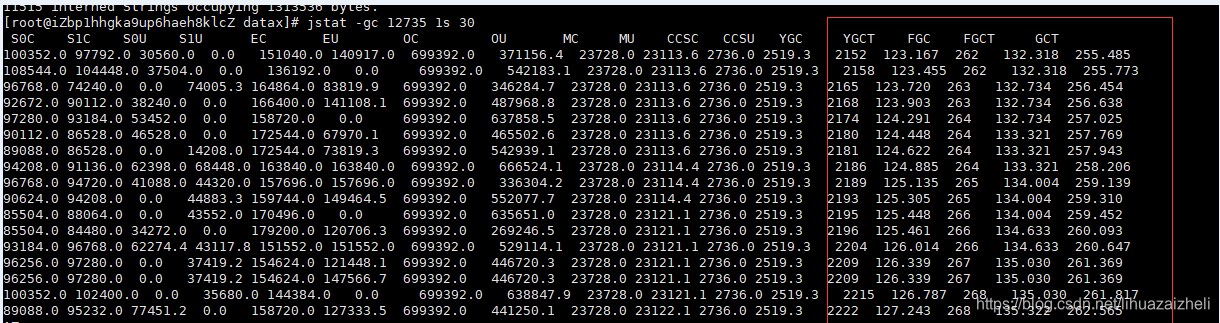

1.2.3 查看进程的GC情况

时间间隔1s 打印30次

jstat -gc 53345 1s 30

YGC 年轻代GC次数

YGCT 单位s 年轻代GC次数/秒

FGC 老年代GC次数

FGCT 单位s 老年代GC次数/秒

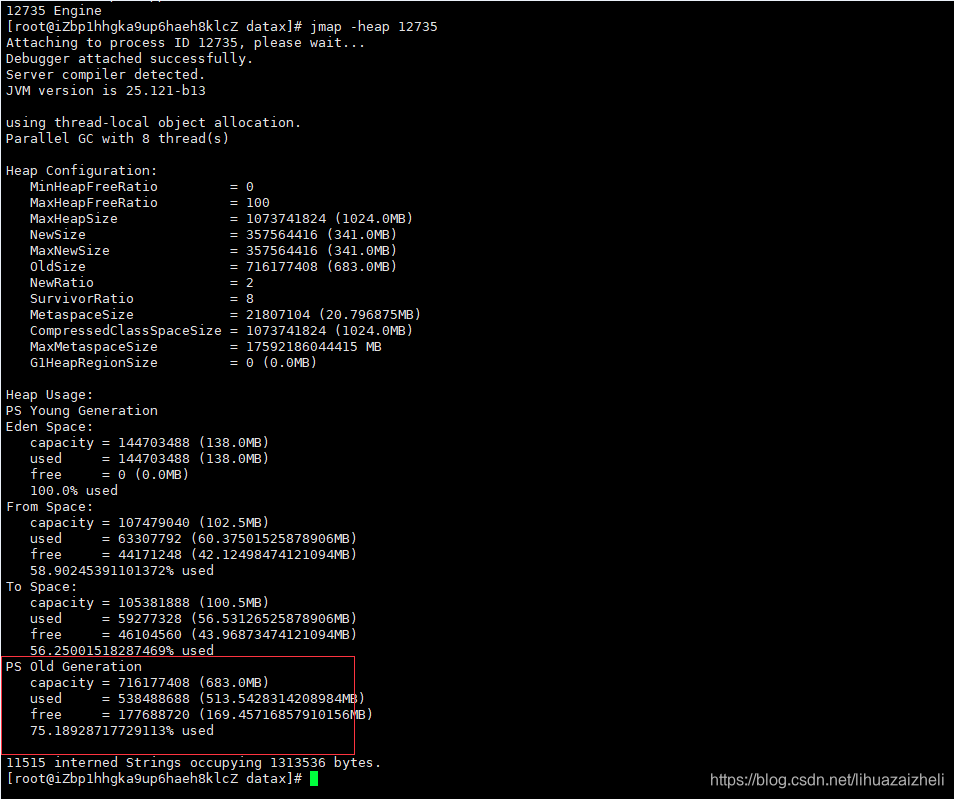

1.2.4 查看堆的情况

jmap -heap 55534

1.2.5 解决

1.提高JVM内存 “jvm”: “-Xms2048m -Xmx2048m”

2.或者减少写入到ES的 “batchSize”: 10000

"job": {

"entry": {

"jvm": "-Xms1024m -Xmx1024m"

},

"setting": {

"speed": {

"channel": 8,

"record":-1,

"byte":-1

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

......

},

"writer":{

"name": "elasticsearchwriter",

"parameter": {

......

"batchSize": 20000,

......

}

}

}

],

}

}

版权声明:本文为lihuazaizheli原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。