一个完整的处理图片分类的代码,包括以下几部分:导入需要的库,数据预处理,搭建神经网络模型,训练及测试,输出损失和正确率。

- 导入库

import torch

import torchvision

from torch.autograd import Variable

from torchvision import datasets, transforms

import os # os包集成了一些对文件路径和目录进行操作的类

import matplotlib.pyplot as plt

import time

- 数据与处理:这部分预处理共有三部分,数据已经预处理成单个文件夹,即Data文件夹中包括训练和测试两个文件夹,每个文件夹中的数据按照各自的类别放入不同类别的文件夹中 。因此采用了ImageFolder及DataLoader加载数据

# 读取数据

# 这一步类似预处理,将图片裁剪成64*64大小

data_dir = 'C:/Users/17865/Desktop/Sort/Data'

data_transform = {x:transforms.Compose([transforms.Scale([64,64]),

transforms.ToTensor()]) for x in ['train', 'valid']}

# 这一步相当于读取数据

# ImageFolder返回的对象是一个包含数据集所有图像及对应标签构成的二维元组容器,支持索引和迭代,可作为torch.utils.data.DataLoader的输入。

image_datasets = {x:datasets.ImageFolder(root = os.path.join(data_dir,x),

transform = data_transform[x]) for x in ['train', 'valid']}

# 读取完数据后,对数据进行装载

dataloader = {x:torch.utils.data.DataLoader(dataset = image_datasets[x],

batch_size = 4,

shuffle = True) for x in ['train', 'valid']}

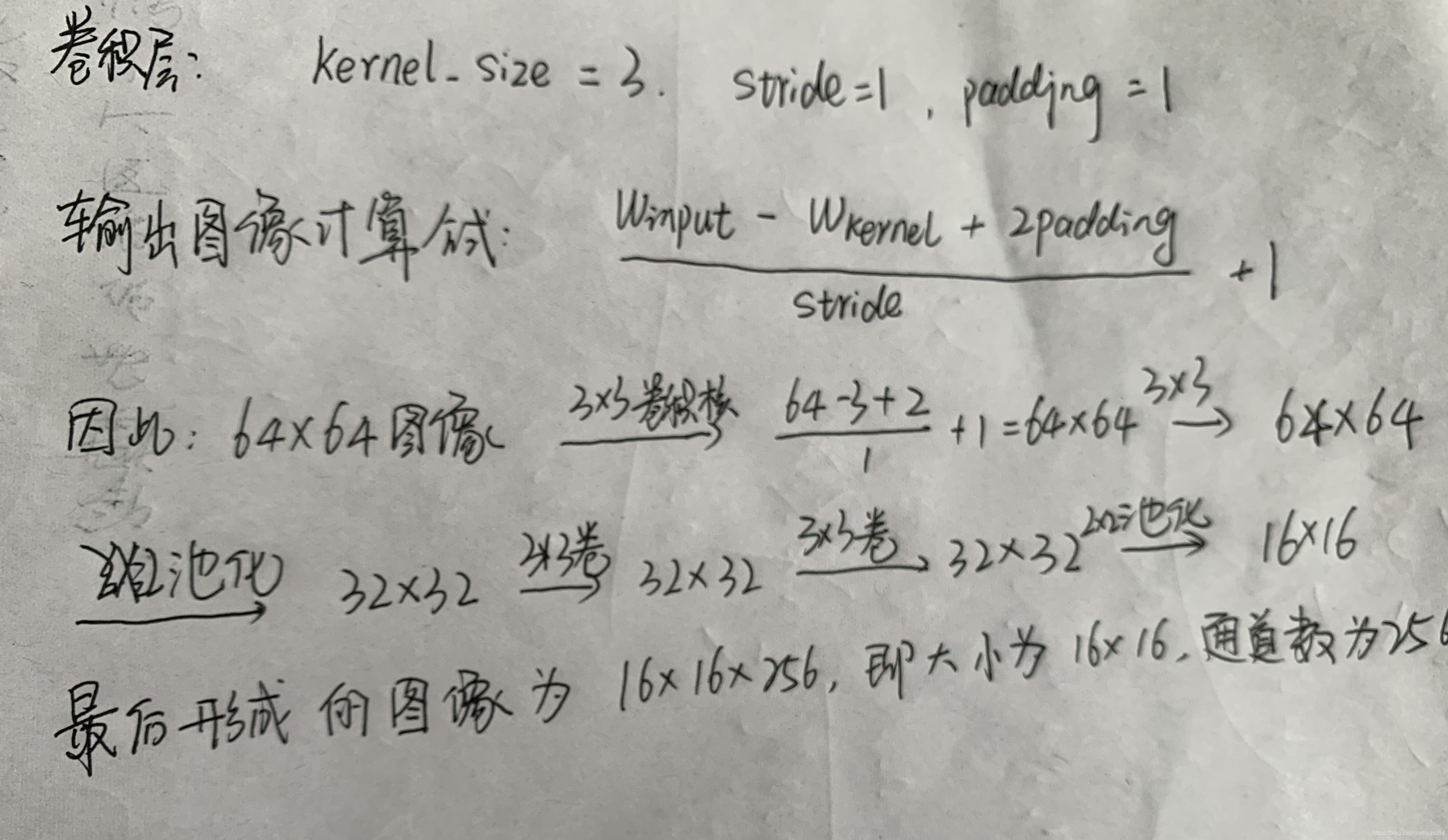

- 搭建网络模型:随意搭建简单模型,但也需要考虑输如输出图片大小、卷积核参数、padding、stride等,其中输入通道数:3表示RGB三通道,其余的通道数随意设置。输出通道数:3表示3分类,其余设置也比较随意。此网络共有四次卷积、两次池化及一次droupout。因此分类部分1616256的来源如下:

class Models(torch.nn.Module):

def __init__(self):

super(Models, self).__init__()

self.Conv = torch.nn.Sequential(

torch.nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(kernel_size=2, stride=2),

torch.nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(kernel_size=2, stride=2))

self.Classes = torch.nn.Sequential(

torch.nn.Linear(16 * 16 * 256, 512),

torch.nn.ReLU(),

torch.nn.Dropout(p=0.5),

torch.nn.Linear(512, 3))

def forward(self, inputs):

x = self.Conv(inputs)

x = x.view(-1, 16 * 16 * 256)

x = self.Classes(x)

return x

model = Models()

print(model)

- 定义损失和优化:交叉熵损失(适合多分类)和自适应优化(首选的优化函数)函数

loss_f = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.00001)

# 使用GPU训练

Use_gpu = torch.cuda.is_available()

# 将模型放置在GPU上

if Use_gpu:

model = model.cuda()

- 训练及测试网络

# 设置5个epoch

epoch_n = 5

time_open = time.time()

# 每执行一个epoch输出一次

for epoch in range(epoch_n):

print('epoch {}/{}'.format(epoch, epoch_n - 1))

print('-' * 10)

# 判断是训练还是测试

for phase in ['train', 'valid']:

if phase == 'train':

# 设置为True,会进行Dropout并使用batch mean和batch var

print('training...')

model.train(True)

else:

# 设置为False,不会进行Dropout并使用running mean和running var

print('validing...')

model.train(False)

# 初始化loss和corrects

running_loss = 0.0

running_corrects = 0.0

# 输出标号 和对应图片,下标从1开始

for batch, data in enumerate(dataloader[phase], 1):

X, Y = data

# 将数据放在GPU上训练

X, Y = Variable(X).cuda(), Variable(Y).cuda()

# 模型预测概率

y_pred = model(X)

# pred,概率较大值对应的索引值,可看做预测结果,1表示行

_, pred = torch.max(y_pred.data, 1)

# 梯度归零

optimizer.zero_grad()

# 计算损失

loss = loss_f(y_pred, Y)

# 训练 需要反向传播及梯度更新

if phase == 'train':

# 反向传播出现问题

loss.backward()

optimizer.step()

# 损失和

running_loss += loss.data.item()

# 预测正确的图片个数

running_corrects += torch.sum(pred == Y.data)

# 训练时,每500个batch输出一次,训练loss和acc

if batch % 500 == 0 and phase == 'train':

print('batch{},trainLoss:{:.4f},trainAcc:{:.4f}'.format(batch, running_loss / batch,

100 * running_corrects / (4 * batch)))

# 输出每个epoch的loss和acc

epoch_loss = running_loss * 4 / len(image_datasets[phase])

epoch_acc = 100 * running_corrects / len(image_datasets[phase])

print('{} Loss:{:.4f} Acc:{:.4f}%'.format(phase, epoch_loss, epoch_acc))

time_end = time.time() - time_open

print(time_end)

- 保存模型及参数

# 保存和加载整个模型

torch.save(model, 'model.pth')

model_1 = torch.load('model.pth')

print(model_1)

# 仅保存和加载模型参数

torch.save(model.state_dict(), 'params.pth')

dic = torch.load('params.pth')

model.load_state_dict(dic)

print(dic)

完整代码

import torch

import torchvision

from torch.autograd import Variable

from torchvision import datasets, transforms

import os # os包集成了一些对文件路径和目录进行操作的类

import matplotlib.pyplot as plt

import time

# 读取数据

data_dir = 'C:/Users/17865/Desktop/超声医疗/sjb的数据集/Data'

data_transform = {x:transforms.Compose([transforms.Scale([64,64]),

transforms.ToTensor()]) for x in ['train', 'valid']} # 这一步类似预处理

image_datasets = {x:datasets.ImageFolder(root = os.path.join(data_dir,x),

transform = data_transform[x]) for x in ['train', 'valid']} # 这一步相当于读取数据

dataloader = {x:torch.utils.data.DataLoader(dataset = image_datasets[x],

batch_size = 4,

shuffle = True) for x in ['train', 'valid']} # 读取完数据后,对数据进行装载

# 模型搭建

class Models(torch.nn.Module):

def __init__(self):

super(Models, self).__init__()

self.Conv = torch.nn.Sequential(

torch.nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(kernel_size=2, stride=2),

torch.nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(kernel_size=2, stride=2))

self.Classes = torch.nn.Sequential(

torch.nn.Linear(16 * 16 * 256, 512),

torch.nn.ReLU(),

torch.nn.Dropout(p=0.5),

torch.nn.Linear(512, 3))

def forward(self, inputs):

x = self.Conv(inputs)

x = x.view(-1, 16 * 16 * 256)

x = self.Classes(x)

return x

model = Models()

print(model)

'''

# 保存和加载整个模型

torch.save(model, 'model.pth')

model_1 = torch.load('model.pth')

print(model_1)

# 仅保存和加载模型参数

torch.save(model.state_dict(), 'params.pth')

dic = torch.load('params.pth')

model.load_state_dict(dic)

print(dic)

'''

loss_f = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.00001)

Use_gpu = torch.cuda.is_available()

if Use_gpu:

model = model.cuda()

epoch_n = 5

time_open = time.time()

for epoch in range(epoch_n):

print('epoch {}/{}'.format(epoch, epoch_n - 1))

print('-' * 10)

for phase in ['train', 'valid']:

if phase == 'train':

# # 设置为True,会进行Dropout并使用batch mean和batch var

print('training...')

model.train(True)

else:

# # 设置为False,不会进行Dropout并使用running mean和running var

print('validing...')

model.train(False)

running_loss = 0.0

running_corrects = 0.0

# 输出标号 和对应图片,下标从1开始

for batch, data in enumerate(dataloader[phase], 1):

X, Y = data

# 将数据放在GPU上训练

X, Y = Variable(X).cuda(), Variable(Y).cuda()

# 模型预测概率

y_pred = model(X)

# pred,概率较大值对应的索引值,可看做预测结果,1表示行

_, pred = torch.max(y_pred.data, 1)

# 梯度归零

optimizer.zero_grad()

# 计算损失

loss = loss_f(y_pred, Y)

# 训练 需要反向传播及梯度更新

if phase == 'train':

# 反向传播出现问题

loss.backward()

optimizer.step()

# 损失和

running_loss += loss.data.item()

# 预测正确的图片个数

running_corrects += torch.sum(pred == Y.data)

# 训练时,每500个batch输出一次,训练loss和acc

if batch % 500 == 0 and phase == 'train':

print('batch{},trainLoss:{:.4f},trainAcc:{:.4f}'.format(batch, running_loss / batch,

100 * running_corrects / (4 * batch)))

# 输出每个epoch的loss和acc

epoch_loss = running_loss * 4 / len(image_datasets[phase])

epoch_acc = 100 * running_corrects / len(image_datasets[phase])

print('{} Loss:{:.4f} Acc:{:.4f}%'.format(phase, epoch_loss, epoch_acc))

time_end = time.time() - time_open

print(time_end)

版权声明:本文为aass6d原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接和本声明。