Ceph是一个统一的分布式存储系统,提供较好的性能、可靠性和可扩展性。Ceph的主要目标是设计成基于POSIX的没有单点故障的分布式文件系统,使数据能容错和无缝的复制。

一、准备服务器

1、SSH免密码登录

[root@ceph-1 ~]# ssh-keygen[root@ceph-1 ~]# ssh-copy-id root@ceph-2[root@ceph-1 ~]# ssh-copy-id root@ceph-32、时间同步

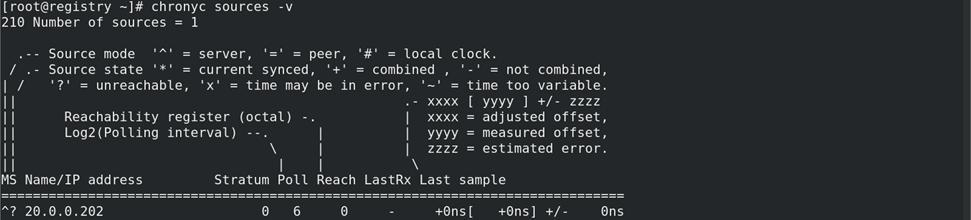

[root@ceph-1 ~]# vim /etc/chrony.conf添加:server 20.0.0.202 iburst [root@ceph-1 ~]# scp /etc/chrony.conf root@ceph-2:/etc/[root@ceph-1 ~]# scp /etc/chrony.conf root@ceph-3:/etc/ 注意:ceph-1、ceph-2和ceph-3都要重启chronyd服务。[root@ceph-1 ~]# systemctl restart chronyd[root@ceph-1 ~]# chronyc sources -v

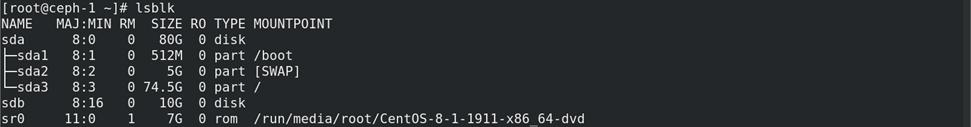

3、添加硬盘

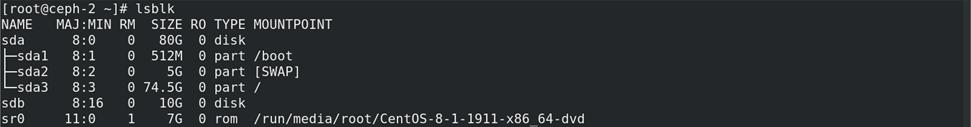

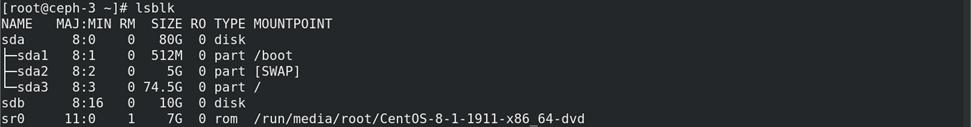

(1)ceph-1、ceph-2和ceph-3服务器中各添加一块硬盘。

[root@ceph-1 ~]# lsblk

[root@ceph-2 ~]# lsblk

[root@ceph-3 ~]# lsblk

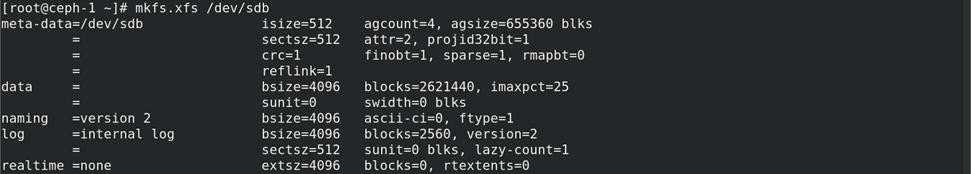

(2)格式硬盘

[root@ceph-1 ~]# mkfs.xfs /dev/sdb[root@ceph-2 ~]# mkfs.xfs /dev/sdb[root@ceph-3 ~]# mkfs.xfs /dev/sdb

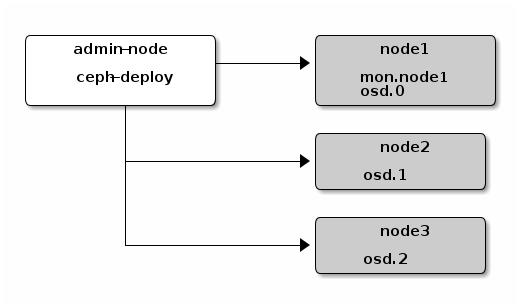

二、部署ceph

目前Ceph官方提供三种部署Ceph集群的方法,分别是ceph-deploy,cephadm和手动安装:

- ceph-deploy,一个集群自动化部署工具,使用较久,成熟稳定,被很多自动化工具所集成,可用于生产部署;

- cephadm,从Octopus开始提供的新集群部署工具,支持通过图形界面或者命令行界面添加节点,目前不建议用于生产环境,有兴趣可以尝试;

- manual,手动部署,一步步部署Ceph集群,支持较多定制化和了解部署细节,安装难度较大,但可以清晰掌握安装部署的细节。

1、在所有节点ceph-1安装工具

(1)准备yum源

[root@ceph-1 ~]# vim /etc/yum.repos.d/ceph.repo添加:[Ceph]name=Ceph packages for $basearchbaseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el8/x86_64/enabled=1gpgcheck=1type=rpm-mdgpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [Ceph-noarch]name=Ceph noarch packagesbaseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el8/noarch/enabled=1gpgcheck=1type=rpm-mdgpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [ceph-source]name=Ceph source packagesbaseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el8/SRPMS/enabled=1gpgcheck=1type=rpm-mdgpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [root@ceph-1 ~]# scp /etc/yum.repos.d/ceph.repo root@ceph-2:/etc/yum.repos.d/[root@ceph-1 ~]# scp /etc/yum.repos.d/ceph.repo root@ceph-3:/etc/yum.repos.d/ [root@ceph-1 ~]# yum install epel-release -y[root@ceph-1 ~]# yum clean all && yum makecache(2)在所有节点上部署ceph软件

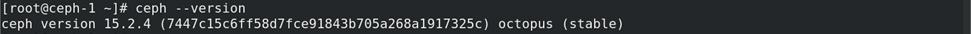

[root@ceph-1 ~]# yum -y install ceph[root@ceph-1 ~]# yum install -y ceph-common [root@ceph-1 ~]# ceph --version

2、创建管理节点

(2)在ceph-1创建管理节点

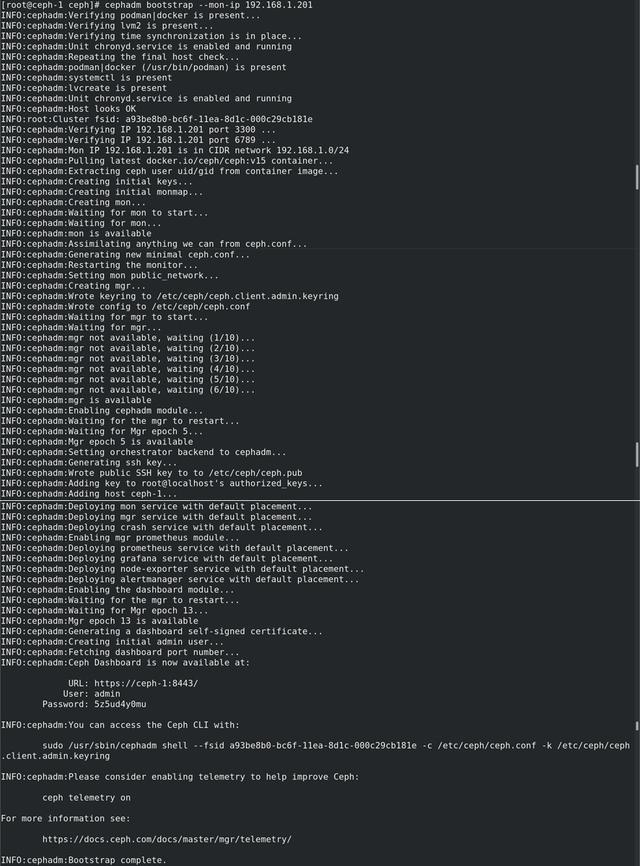

[root@ceph-1 ~]# yum install -y cephadm[root@ceph-1 ~]# cd /etc/ceph/[root@ceph-1 ceph]# cephadm bootstrap --mon-ip 192.168.1.201

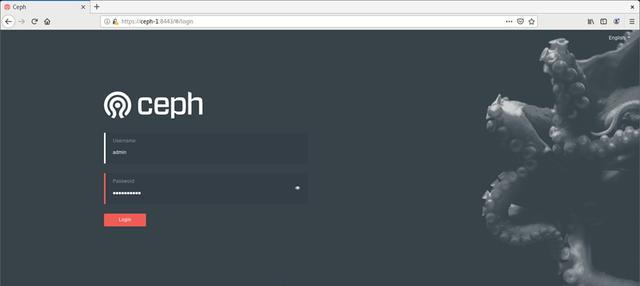

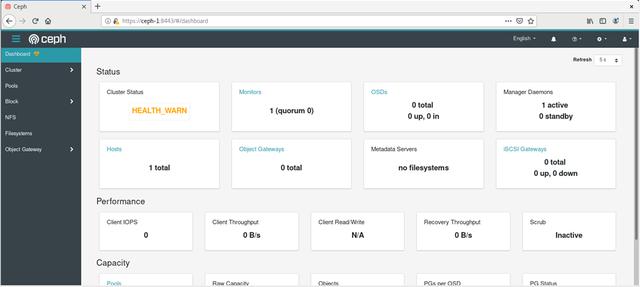

(3)访问ceph UI界面。注意:首次登陆要修改密码,进行验证。

[root@ceph-1 ~]# podman images

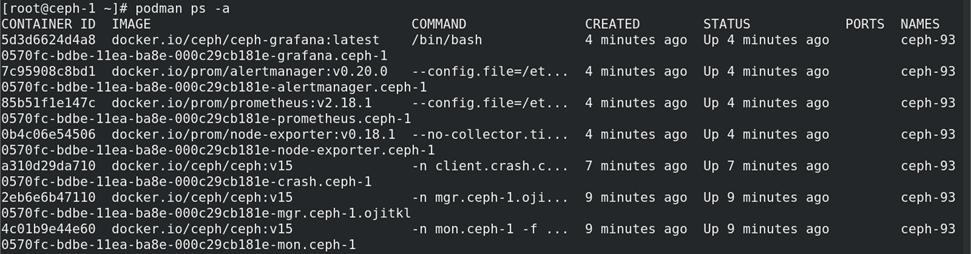

[root@ceph-1 ~]# podman ps -a

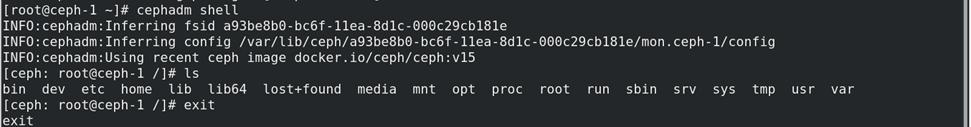

cephadm shell命令在安装了所有Ceph包的容器中启动一个bash shell

[root@ceph-1 ~]# cephadm shell

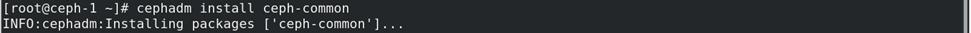

[root@ceph-1 ~]# cephadm install ceph-common或者[root@ceph-1 ~]# yum install -y ceph-common

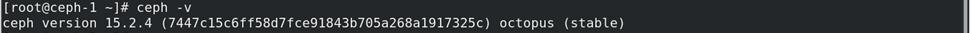

[root@ceph-1 ~]# ceph -v

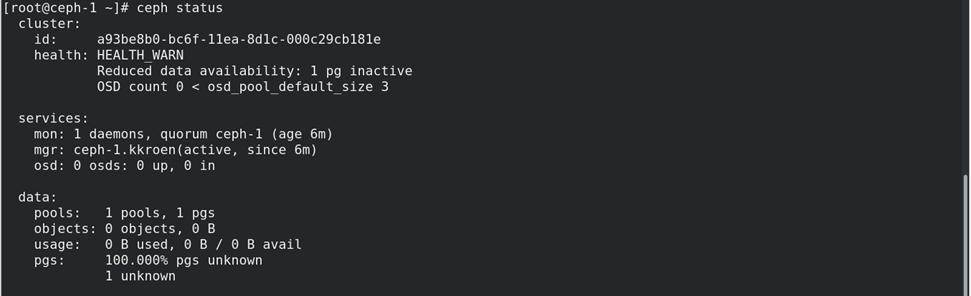

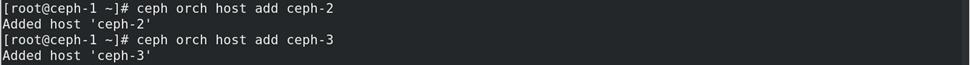

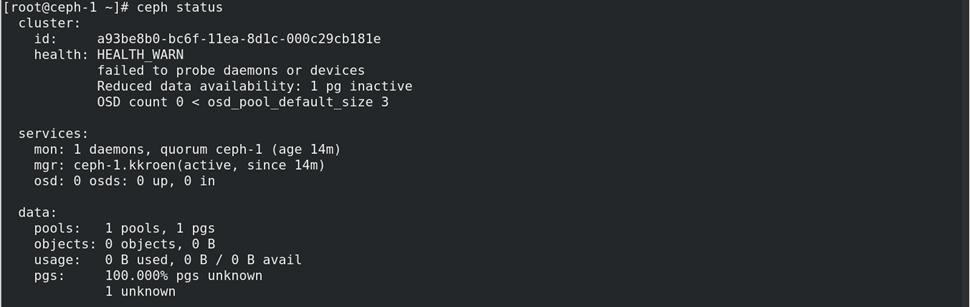

[root@ceph-1 ~]# ceph status

[root@ceph-1 ~]# ceph health

3、创建ceph群集

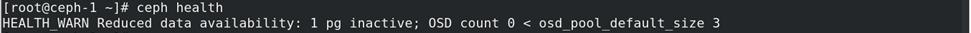

[root@ceph-1 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph-2[root@ceph-1 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph-3

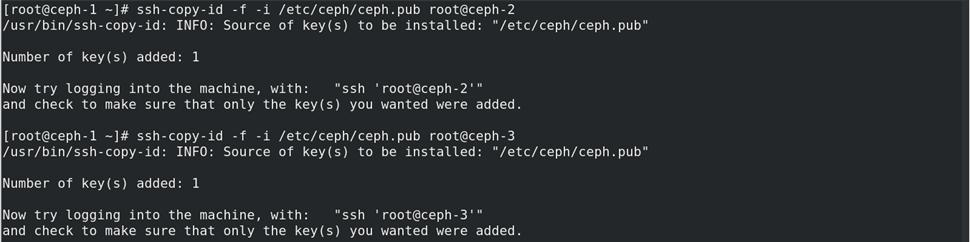

[root@ceph-1 ~]# ceph orch host add ceph-2[root@ceph-1 ~]# ceph orch host add ceph-3

[root@ceph-1 ~]# ceph status

4、部署添加monitor

(1)设置公共网段,以便client访问

[root@ceph-1 ~]# ceph config set mon public_network 192.168.1.0/24(2)设置monitor节点

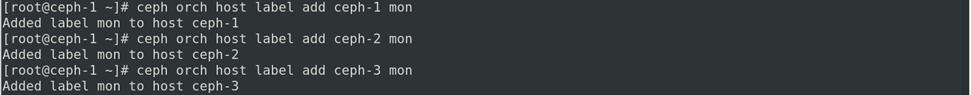

[root@ceph-1 ~]# ceph orch host label add ceph-1 mon[root@ceph-1 ~]# ceph orch host label add ceph-2 mon[root@ceph-1 ~]# ceph orch host label add ceph-3 mon

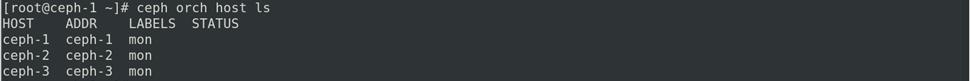

[root@ceph-1 ~]# ceph orch host ls

(3)各节点拉取images并启动容器

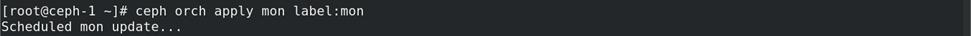

[root@ceph-1 ~]# ceph orch apply mon label:mon

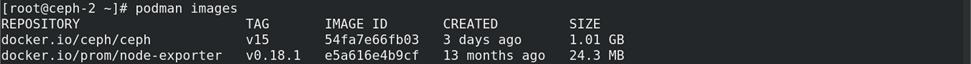

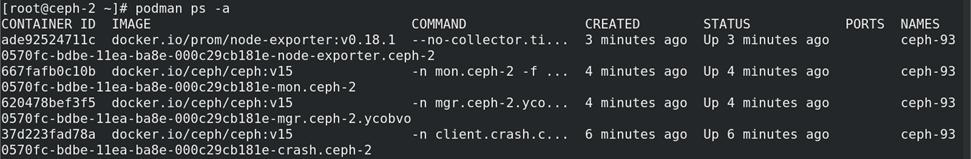

查看ceph-2的镜像和容器运行情况

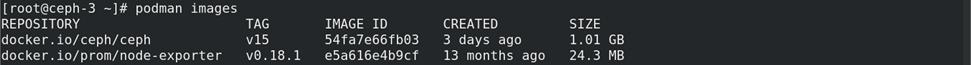

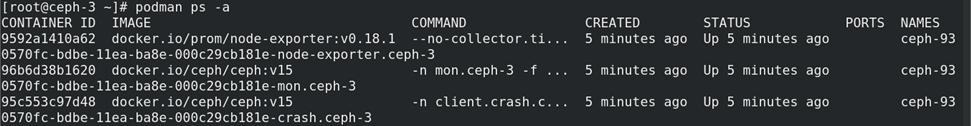

查看ceph-3的镜像和容器运行情况

(4)查看ceph群集健康状态

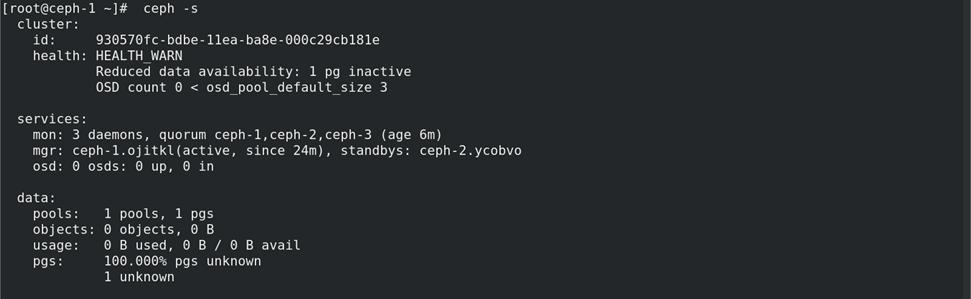

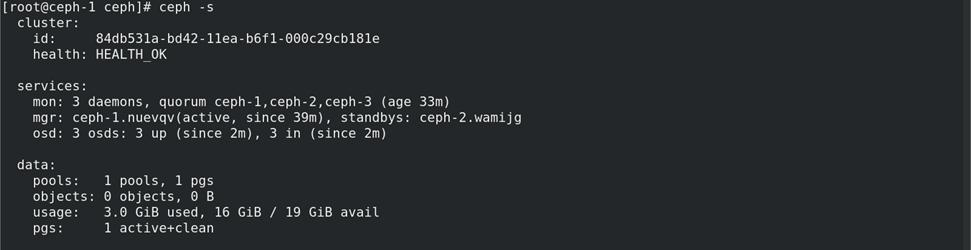

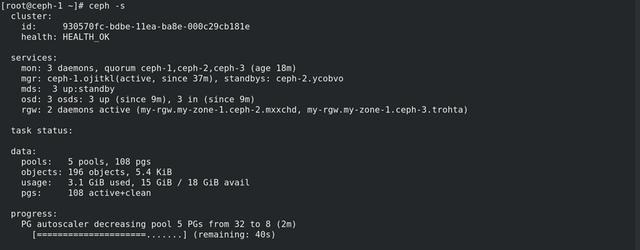

[root@ceph-1 ~]# ceph -s

5、部署OSD

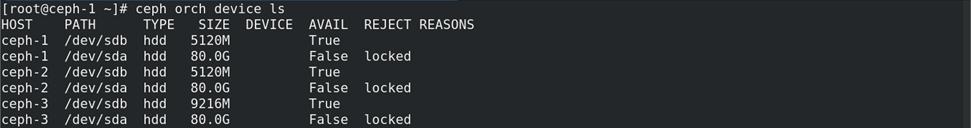

(1)查看查看硬盘

[root@ceph-1 ~]# ceph orch device ls

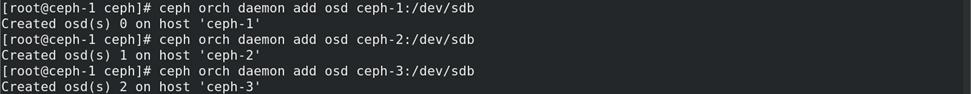

(2)添加硬盘

[root@ceph-1 ceph]# ceph orch daemon add osd ceph-1:/dev/sdb[root@ceph-1 ceph]# ceph orch daemon add osd ceph-2:/dev/sdb[root@ceph-1 ceph]# ceph orch daemon add osd ceph-3:/dev/sdb

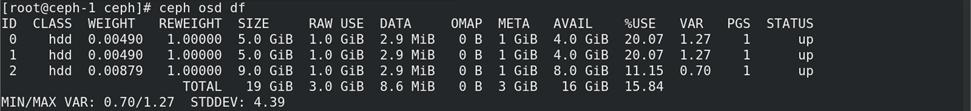

(3)查看添加硬盘情况

[root@ceph-1 ceph]# ceph osd df

(4)查看群集健康

[root@ceph-1 ceph]# ceph -s

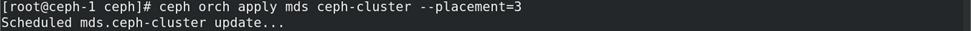

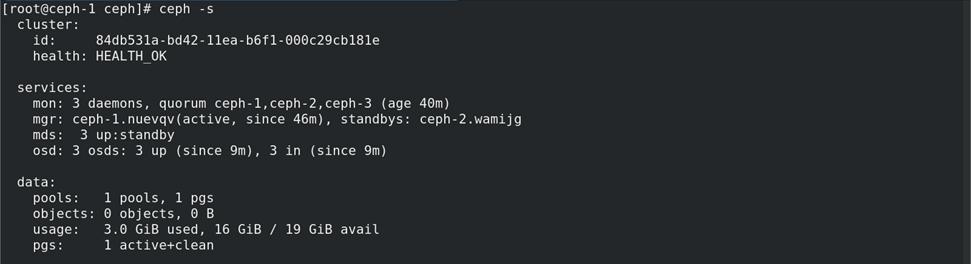

6、部署MDS

[root@ceph-1 ceph]# ceph orch apply mds ceph-cluster --placement=3

[root@ceph-1 ceph]# ceph -s

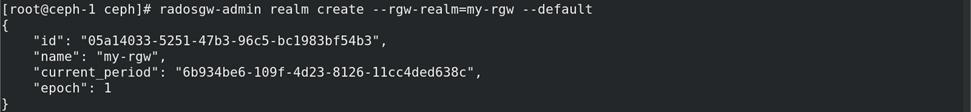

7、部署RGW

(1)创建一个领域

[root@ceph-1 ceph]# radosgw-admin realm create --rgw-realm=my-rgw --default

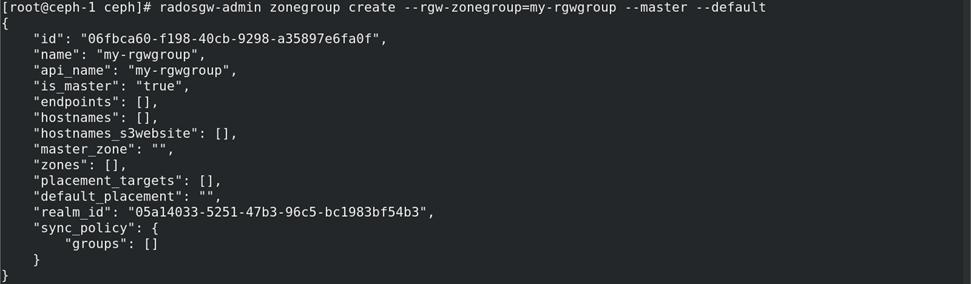

(2)创建一个zonegroup

[root@ceph-1 ~]# radosgw-admin zonegroup create --rgw-zonegroup=my-rgwgroup --master --default

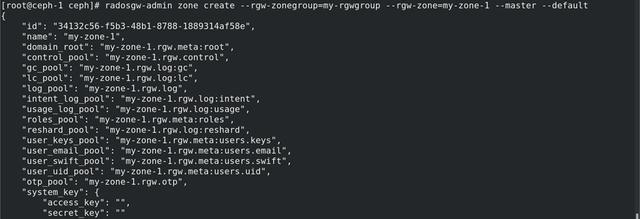

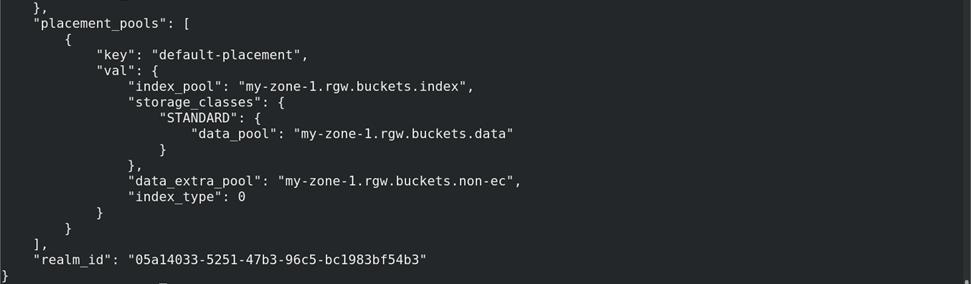

(3)创建一个区域

[root@ceph-1 ceph]# radosgw-admin zone create --rgw-zonegroup=my-rgwgroup --rgw-zone=my-zone-1 --master --default

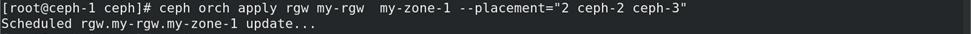

(4)部署一组radosgw守护进程

[root@ceph-1 ceph]# ceph orch apply rgw my-rgw my-zone-1 --placement="2 ceph-2 ceph-3"

(5)查看RGW部署情况

[root@ceph-1 ceph]# ceph -s

(6)为RGW开启dashborad创建管理用户

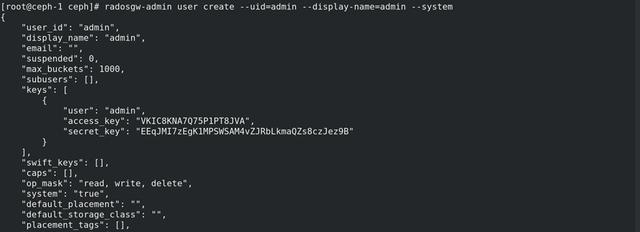

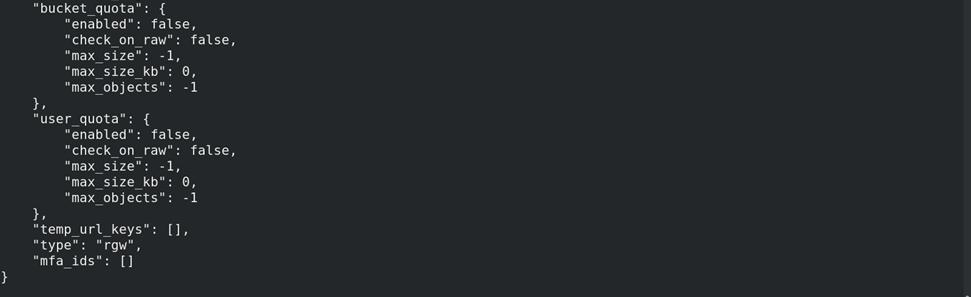

[root@ceph-1 ceph]# radosgw-admin user create --uid=admin --display-name=admin --system

(7)设置dashboard凭证

注意:使用rgw的管理用户的Key。

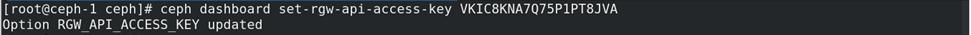

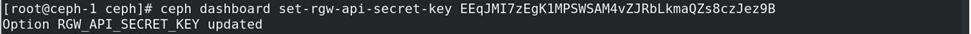

[root@ceph-1 ceph]# ceph dashboard set-rgw-api-access-key VKIC8KNA7Q75P1PT8JVA

[root@ceph-1 ceph]# ceph dashboard set-rgw-api-secret-key EEqJMI7zEgK1MPSWSAM4vZJRbLkmaQZs8czJez9B

设置

[root@ceph-1 ceph]# ceph dashboard set-rgw-api-ssl-verify False[root@ceph-1 ceph]# ceph dashboard set-rgw-api-scheme http[root@ceph-1 ceph]# ceph dashboard set-rgw-api-host 192.168.1.0/24[root@ceph-1 ceph]# ceph dashboard set-rgw-api-port 80[root@ceph-1 ceph]# ceph dashboard set-rgw-api-user-id admin(8)重启RGW

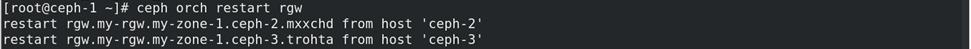

[root@ceph-1 ceph]# ceph orch restart rgw

(4)修改ceph配置文件

[root@ceph-1 ~]# vim /etc/ceph/ceph.conf添加:public network = 192.168.1.0/24cluster network = 192.168.1.0/24osd_pool_default_size = 2mon_clock_drift_allowed = 2 ##增大mon之间时差 [mon]mon allow pool delete = true ##允许删除pool挂载使用

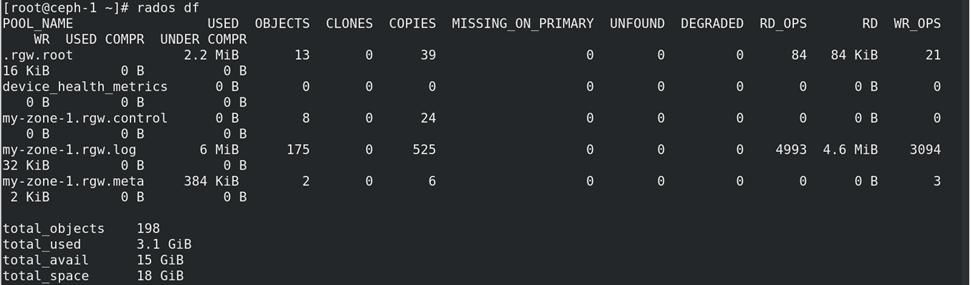

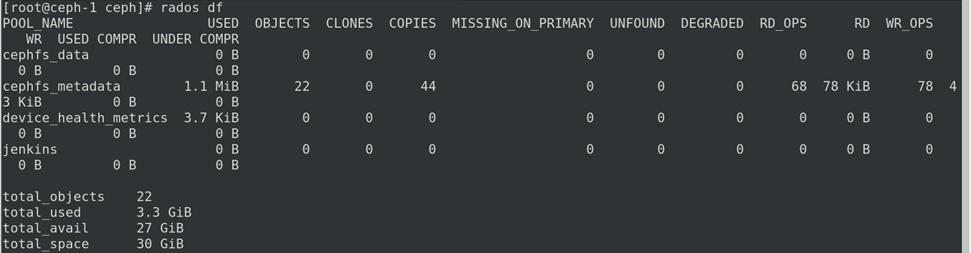

[root@ceph-1 ~]# rados df

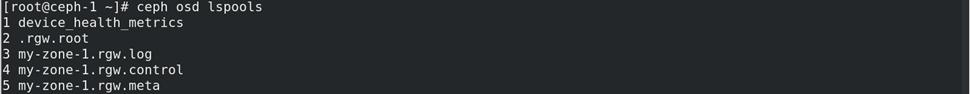

[root@ceph-1 ~]# ceph osd lspools

三、创建ceph文件系统

1、创建存储池

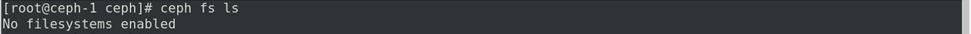

[root@ceph-1 ceph]# ceph fs ls

一个 Ceph 文件系统需要至少两个 RADOS 存储池,一个用于数据、一个用于元数据。配置这些存储池时需考虑:

为元数据存储池设置较高的副本水平,因为此存储池丢失任何数据都会导致整个文件系统失效。

为元数据存储池分配低延时存储器(像 SSD ),因为它会直接影响到客户端的操作延时。

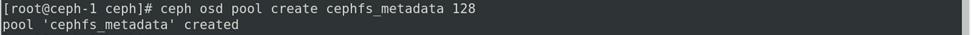

[root@ceph-1 ceph]# ceph osd pool create cephfs_data 128[root@ceph-1 ceph]# ceph osd pool create cephfs_metadata 128

注:关于创建存储池

确定 pg_num 取值是强制性的,因为不能自动计算。下面是几个常用的值:

*少于 5 个 OSD 时可把 pg_num 设置为 128

*OSD 数量在 5 到 10 个时,可把 pg_num 设置为 512

*OSD 数量在 10 到 50 个时,可把 pg_num 设置为 4096

*OSD 数量大于 50 时,你得理解权衡方法、以及如何自己计算 pg_num 取值

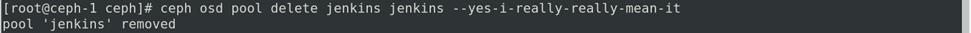

删除存储池pool

2、创建文件系统

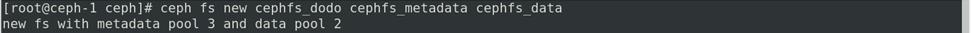

[root@ceph-1 ceph]# ceph fs new cephfs_dodo cephfs_metadata cephfs_data

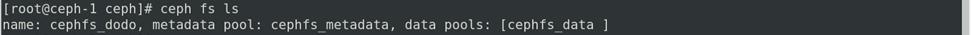

查看创建后的cephfs

[root@ceph-1 ceph]# ceph fs ls

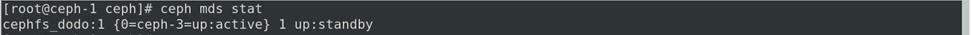

查看mds节点状态

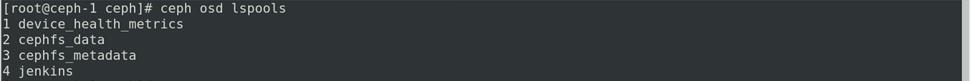

查看存储池pool

[root@ceph-1 ceph]# rados lspools或者[root@ceph-1 ceph]# ceph osd lspools

[root@ceph-1 ceph]# rados df

3、挂载Ceph文件系统

(1)创建挂载点

[root@client ~]# mkdir /dodo(2)内核驱动挂载Ceph文件系统

准备秘钥

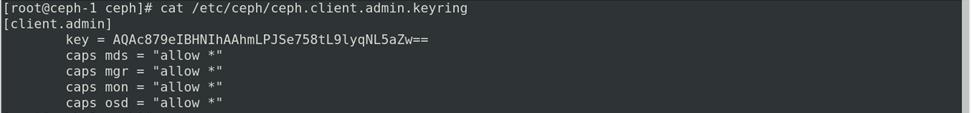

[root@ceph-1 ceph]# cat /etc/ceph/ceph.client.admin.keyring

将ceph服务器的秘钥复制到Client中。

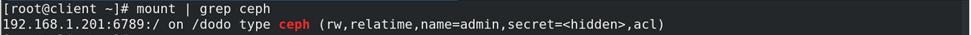

[root@client ~]# mkdir /etc/ceph/[root@client ~]# vim /etc/ceph/admin.secret添加:AQAc879eIBHNIhAAhmLPJSe758tL9lyqNL5aZw==挂载[root@client ~]# mount -t ceph 192.168.1.201:6789:/ /dodo/ -o name=admin,secret=AQAc879eIBHNIhAAhmLPJSe758tL9lyqNL5aZw==或者[root@client ~]# mount -t ceph 192.168.1.201:6789:/ /dodo -o name=admin,secretfile=/etc/ceph/admin.secret [root@client ~]# mount | grep ceph

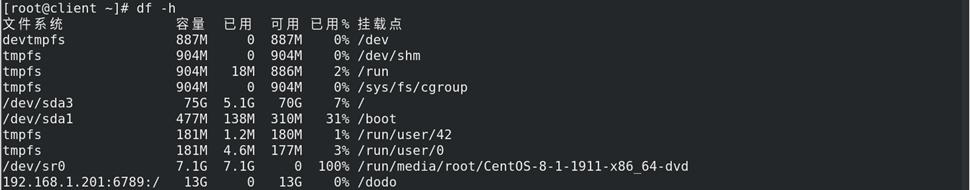

[root@client ~]# df -h

取消挂载

[root@client ~]# umount /dodo(3)用户控件挂载Ceph文件系统

安装ceph-fuse

[root@client ~]# yum install epel-release -y[root@client ~]# yum install -y ceph-fuse拷贝ceph配置和秘钥文件

[root@ceph-1 ~]# scp /etc/ceph/ceph.* client:/etc/ceph/注意:将ceph配置和秘钥文件权限设置为644

[root@client ~]# chmod 644 /etc/ceph/*挂载

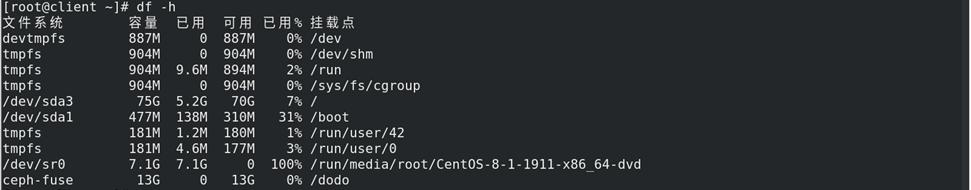

[root@client ~]# ceph-fuse -m 192.168.1.201:6789 /dodo

[root@client ~]# df -h

取消挂载

[root@client ~]# fusermount -u /dodo四、kubernetes应用ceph

(一)准备ceph存储

1、创建存储池pool

[root@ceph-1 ceph]# ceph osd pool create dodo-cephfs 642、Ceph上准备K8S客户端账号

[root@ceph-1 ceph]# ceph auth get-or-create client.dodo mon 'allow r' osd 'allow rwx pool=dodo-cephfs' -o dodo-cephfs.k8s.keyring3、获取账号的密钥

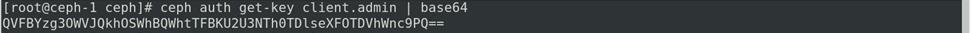

[root@ceph-1 ceph]# ceph auth get-key client.admin | base64秘钥为:QVFBYzg3OWVJQkhOSWhBQWhtTFBKU2U3NTh0TDlseXFOTDVhWnc9PQ==

(二)在客户端(k8s-node-1、k8s-node-2和k8s-master)上安装ceph

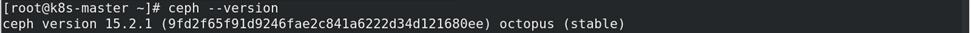

注意:客户端版本与ceph集群的版本保持一致。

1、安装ceph

[root@k8s-master ~]# vim /etc/yum.repos.d/ceph.repo添加:[Ceph]name=Ceph packages for $basearchbaseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/x86_64/enabled=1gpgcheck=1type=rpm-mdgpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [Ceph-noarch]name=Ceph noarch packagesbaseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/noarch/enabled=1gpgcheck=1type=rpm-mdgpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [ceph-source]name=Ceph source packagesbaseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/SRPMS/enabled=1gpgcheck=1type=rpm-mdgpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [root@k8s-master ~]# scp /etc/yum.repos.d/ceph.repo 192.168.1.1:/etc/yum.repos.d/[root@k8s-master ~]# scp /etc/yum.repos.d/ceph.repo 192.168.1.2:/etc/yum.repos.d/ [root@k8s-master ~]# yum install epel-release -y[root@k8s-master ~]# yum -y install ceph-common[root@k8s-master ~]# ceph --version

2、将ceph群集中的配置文件ceph.conf拷贝到所有节点的/etc/ceph目录下

[root@ceph-1 ~]# scp /etc/ceph/ceph.conf 192.168.1.1:/etc/ceph/[root@ceph-1 ~]# scp /etc/ceph/ceph.conf 192.168.1.2:/etc/ceph/[root@ceph-1 ~]# scp /etc/ceph/ceph.conf 192.168.1.3:/etc/ceph/3、将caph集群的ceph.client.admin.keyring文件放在k8s控制节点的/etc/ceph目录

[root@ceph-1 ~]# scp /etc/ceph/dodo-cephfs.k8s.keyring 192.168.1.1:/etc/ceph/[root@ceph-1 ~]# scp /etc/ceph/dodo-cephfs.k8s.keyring 192.168.1.2:/etc/ceph/[root@ceph-1 ~]# scp /etc/ceph/dodo-cephfs.k8s.keyring 192.168.1.3:/etc/ceph/4、生成加密key

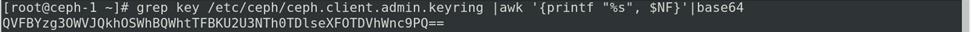

[root@ceph-1 ~]# grep key /etc/ceph/ceph.client.admin.keyring |awk '{printf "%s", $NF}'|base64QVFBYzg3OWVJQkhOSWhBQWhtTFBKU2U3NTh0TDlseXFOTDVhWnc9PQ==

5、创建ceph的secret

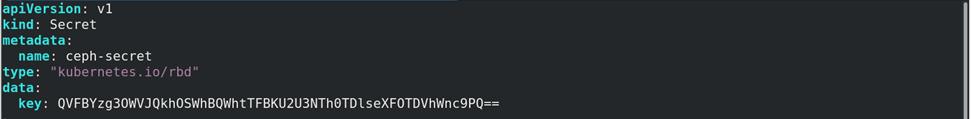

[root@k8s-master ~]# vim ceph-secret.yaml添加:apiVersion: v1kind: Secretmetadata: name: ceph-secrettype: "kubernetes.io/rbd"data: key: QVFBYzg3OWVJQkhOSWhBQWhtTFBKU2U3NTh0TDlseXFOTDVhWnc9PQ==

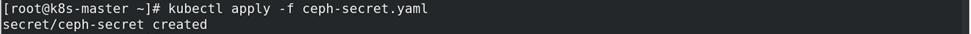

[root@k8s-master ~]# kubectl apply -f ceph-secret.yaml

[root@k8s-master ~]# kubectl get secrets ceph-secret

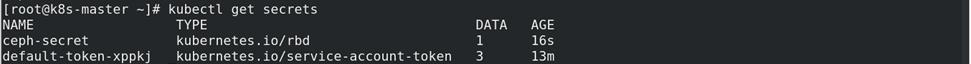

6、创建存储类

[root@k8s-master ~]# vim ceph-class.yaml添加:apiVersion: storage.k8s.io/v1kind: StorageClassmetadata: name: test-cephprovisioner: kubernetes.io/rbdparameters: monitors: 192.168.1.201:6789 adminId: admin adminSecretName: ceph-secret adminSecretNamespace: default pool: dodo-cephfs userId: admin userSecretName: ceph-secret

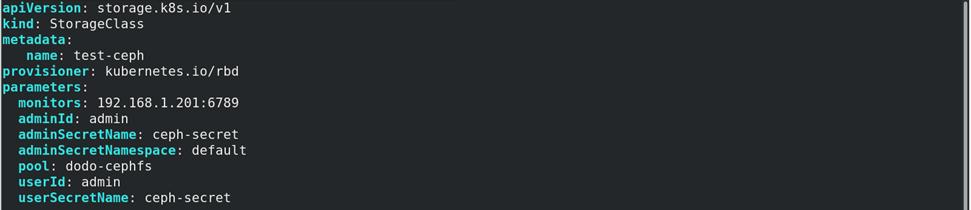

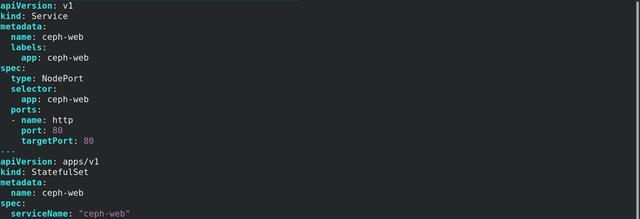

7、应用ceph

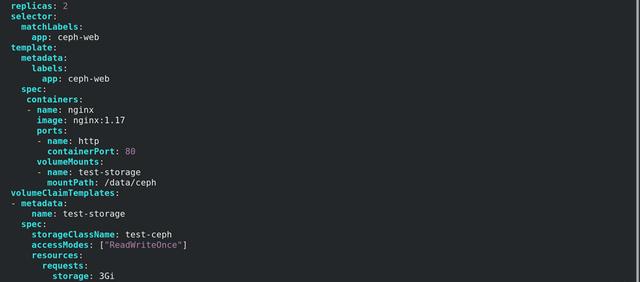

[root@k8s-master ~]# vim ceph-web.yaml添加:apiVersion: v1kind: Servicemetadata: name: ceph-web labels: app: ceph-webspec: type: NodePort selector: app: ceph-web ports: - name: http port: 80 targetPort: 80---apiVersion: apps/v1kind: StatefulSetmetadata: name: ceph-webspec: serviceName: "ceph-web" replicas: 2 selector: matchLabels: app: ceph-web template: metadata: labels: app: ceph-web spec: containers: - name: nginx image: nginx:1.17 ports: - name: http containerPort: 80 volumeMounts: - name: test-storage mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: test-storage spec: storageClassName: test-ceph accessModes: ["ReadWriteOnce"] resources: requests: storage: 3Gi

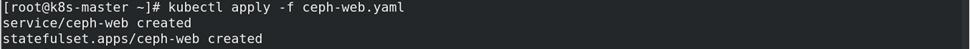

[root@k8s-master ~]# kubectl apply -f ceph-web.yaml

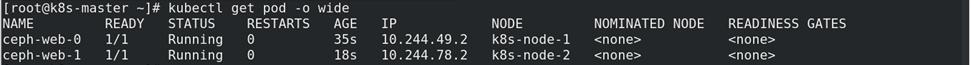

[root@k8s-master ~]# kubectl get pod -o wide

[root@k8s-master ~]# kubectl get pv

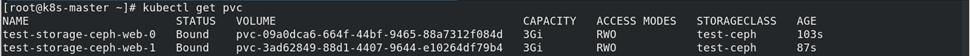

[root@k8s-master ~]# kubectl get pvc

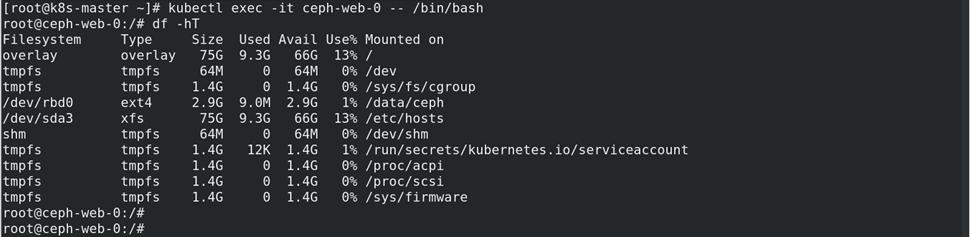

连接容器查看挂载情况

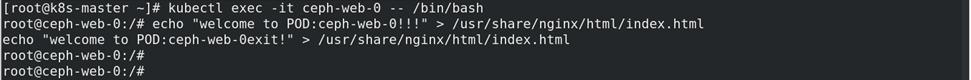

添加测试文件

[root@k8s-master ~]# kubectl exec -it ceph-web-0 -- /bin/bashroot@ceph-web-0:/# echo "welcome to POD:ceph-web-0!!!" > /usr/share/nginx/html/index.html

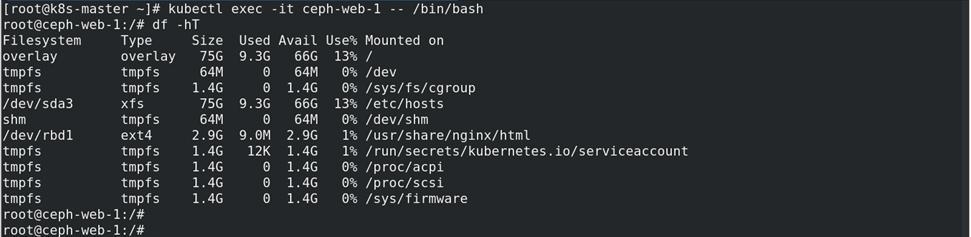

[root@k8s-master ~]# kubectl exec -it ceph-web-1 -- /bin/bashroot@ceph-web-1:/# df -hT

[root@k8s-master ~]# kubectl exec -it ceph-web-1 -- /bin/bashroot@ceph-web-1:/# echo "welcome to POD:ceph-web-1!!!" > /usr/share/nginx/html/index.html

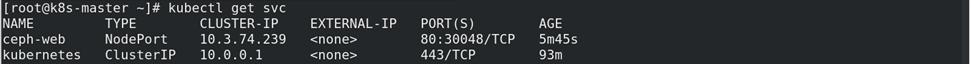

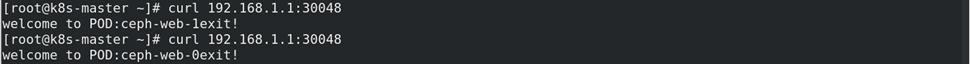

访问:

注:以轮训的方式显示。